Set2Box Similarity Preserving Representation Learning for Sets Geon Lee

2025-05-03

0

0

2.06MB

12 页

10玖币

侵权投诉

Set2Box: Similarity Preserving Representation

Learning for Sets

Geon Lee

KAIST AI

geonlee0325@kaist.ac.kr

Chanyoung Park

KAIST ISysE & AI

cy.park@kaist.ac.kr

Kijung Shin

KAIST AI & EE

kijungs@kaist.ac.kr

Abstract—Sets have been used for modeling various types

of objects (e.g., a document as the set of keywords in it and

a customer as the set of the items that she has purchased).

Measuring similarity (e.g., Jaccard Index) between sets has been

a key building block of a wide range of applications, including,

plagiarism detection, recommendation, and graph compression.

However, as sets have grown in numbers and sizes, the compu-

tational cost and storage required for set similarity computation

have become substantial, and this has led to the development of

hashing and sketching based solutions.

In this work, we propose SET2BOX, a learning-based approach

for compressed representations of sets from which various simi-

larity measures can be estimated accurately in constant time. The

key idea is to represent sets as boxes to precisely capture overlaps

of sets. Additionally, based on the proposed box quantization

scheme, we design SET2BOX+, which yields more concise but

more accurate box representations of sets. Through extensive

experiments on 8real-world datasets, we show that, compared

to baseline approaches, SET2BOX+is (a) Accurate: achieving up

to 40.8×smaller estimation error while requiring 60% fewer bits

to encode sets, (b) Concise: yielding up to 96.8×more concise

representations with similar estimation error, and (c) Versatile:

enabling the estimation of four set-similarity measures from a

single representation of each set.

I. INTRODUCTION

Sets are ubiquitous, modeling various types of objects in

many domains, including (a) a document: modeled as the set

of keywords in it, (b) a customer: modeled as the set of the

items that she has purchased, (c) a social circle: modeled

as the set of its members, and (d) a question on online

Q/A platforms: modeled as the set of tags attached to the

question. Moreover, a number of set similarity measures (e.g.,

Jaccard Index and Dice Index), most of which are based on

the overlaps between sets, have been developed.

As a result of the omnipresence of sets, measuring their

similarity has been employed as a fundamental building block

of a wide range of applications, including the following:

◦Plagiarism Detection: Plagiarism is a critical problem in the

digital age, where a vast amount of resources is accessible. A

text is modeled as a “bag of words,” and texts whose set rep-

resentations are highly similar are suspected of plagiarism [1].

◦Gene Expression Mining: Mining gene expressions is

useful for understanding clinical conditions (e.g., tumor and

cancer). The functionality of a set of genes is estimated by

comparing the set with other sets with known functionality [2].

◦Recommendation: Recommendation is essential to support

users in finding relevant items. To this end, it is useful to

identify users with similar tastes (e.g., users who purchased a

similar set of items and users with similar activities) [3], [4].

◦Graph Compression: As large-scale graphs are om-

nipresent, compressing them into coarse-grained summary

graphs so that they fit in main memory is important. In

many graph compression algorithms, nodes with similar sets

of neighbors are merged into a supernode to yield a concise

summary graph while minimizing the information loss [5], [6].

◦Medical Image Analysis: CT or MRI provide exquisite

details of inner body (e.g., brain), and they are often described

as a collection of spatially localized anatomical features

termed “keypoints”. Sets of keypoints from different images

are compared to diagnose and investigate diseases [7]–[9].

As sets grow in numbers and sizes, computation of set

similarity requires substantial computational cost and storage.

For example, similarities between tens of millions of nodes,

which are represented as neighborhood sets of up to millions

of neighbors, were measured for graph compression [5].

Moreover, similarities between tens of thousands of movies,

which are represented as sets of up to hundreds of thousands

of users who have rated them, were measured for movie

recommendation [3].

In order to reduce the space and computation required

for set-similarity computation, a number of approaches based

on hashing and sketching [4], [10] have been developed.

While their simplicity and theoretical guarantees are tempting,

significant gains are expected if patterns in a given collection

of sets can be learned and exploited.

In this paper, we propose SET2BOX, a learning-based

approach for compressed representations of sets from which

various similarity measures can be estimated accurately in

constant time. The key idea of SET2BOX is to represent

sets as boxes to accurately capture the overlaps between

sets and thus their similarity based on them. Specifically,

by utilizing the volumes of the boxes to approximate the

sizes of the sets, SET2BOX derives representations that are:

(a) Concise: can represent sets of arbitrary sizes using the

same number of bits, (b) Accurate: can accurately model

overlaps between sets, and (c) Versatile: can be used to

estimate various set similarity measures in a constant time.

These properties are supported by the geometric nature of

boxes, which share primary characteristics of sets. In addition,

we propose SET2BOX+, which yields even more concise but

more accurate boxes based on the proposed box quantization

arXiv:2210.03282v1 [cs.SI] 7 Oct 2022

TABLE I: Frequently-used symbols.

Notation Definition

S={s1, ..., s|S|}set of sets

E={e1, ..., e|E|}set of entities

B = (c,f) a box with center cand offset f

V(B) volume of box B

T+and T−a set of positive & negative samples

Qc∈R|E|×dcenter embedding matrix of entities

Qf∈R|E|×d

+offset embedding matrix of entities

Dnumber of subspaces

Knumber of key boxes in each subspace

scheme. We summarize our contributions as follows:

•Accurate & Versatile Algorithm: We propose SET2BOX, a

set representation learning method that accurately preserves

similarity between sets in terms of four measures.

•Concise & Effective Algorithm: We devise SET2BOX+to

enhance SET2BOX through an end-to-end box quantization

scheme. It yields up to 40.8×more accurate similarity esti-

mation while requiring 60% fewer bits than its competitors.

•Extensive Experiments: Using 8 real-world datasets, we

validate the advantages of SET2BOX+over its competitors

and the effectiveness of each of its components.

For reproducibility, the code and data are available at https:

//github.com/geon0325/Set2Box.

In Section II, we review related work. In Section III, we

define the problem of similarity-preserving set embedding

and discuss intuitive approaches. In Section IV, we present

SET2BOX and SET2BOX+. In Section V, we provide ex-

perimental results. In Section VI, we analyze the considered

methods. Lastly, we offer conclusions in Section VII.

II. RELATED WORK

Here, we review previous studies related to our work.

Similarity-Preserving Embedding: Representation learning

for preserving similarities between instances has been studied

for graphs [11]–[14], images [15]–[17], and texts [18]. These

methods aim to yield high-quality embeddings by minimizing

the information loss of the original data. However, most of

them are designed to preserve the predetermined similarity

matrix, which are not extensible to new measures [13], [14].

In this paper, we focus on the problem of learning similarity-

preserving representations for sets, and we aim to learn a ver-

satile representation of sets, which various similarity measures

(e.g., Jaccard Index and Dice Index) can be estimated from.

Box Embedding: Boxes [19] are useful abstractions to ex-

press high-order information of the data. Thanks to their

powerful expressiveness, they have been used in diverse ap-

plications including knowledge bases [20]–[25], word em-

bedding [26], image embedding [27], and recommender sys-

tems [28], [29]. For instance, Query2Box [24] uses boxes to

embed queries with conjunctions (∧) or logical disjunctions

(∨). Zhang et al. [28] represent users as boxes to accurately

model the users’ preferences to the items. However, in this

work, we embed sets as boxes to accurately preserve their

structural relationships and also similarities between them. In

an algorithmic aspect, methods for improving the optimization

of learning boxes have been presented, and examples include

smoothing hard edges using Gaussian convolutions [30] and

improving the parameter identifiability of boxes using Gumbel

random variables [31].

Set Embedding: The problem of embedding sets has attracted

much attention, with unique requirements of permutation

invariance and size flexibility. For example, DeepSets [32]

uses simple symmetric functions over input features, and

Set2Set [33] is based on a LSTM-based pooling function. Set

Transformer [34] uses an attention-based pooling function to

aggregate information of the entities. Despite their promising

results in some predictive tasks, they suffer from several

limitations. First, they require attribute information of entities,

which in fact largely affects the quality of the set embeddings.

In addition, set representations are trained specifically for

downstream tasks, and thus they may lose explicit similarity

information of sets, which we aim to preserve in this paper.

In another aspect, sets can be represented as compact binary

vectors by hashing or sketching [4], [10], without requiring

attribute information. Such binary vectors are used by Locality

Sensitive Hashing (LSH) and its variants [35]–[37] for a rapid

search of similar sets based on a predefined similarity measure

(e.g., Jaccard Index). Refer to Section III for further discussion

of set embedding methods.

Differentiable Product Quantization: Product quantization

[38], [39] is an effective strategy for vector compression.

Recently, deep learning methods for learning discrete codes

in an end-to-end manner have been proposed [40], [41], and

they have been applied in knowledge graphs [42] and image

retrieval [43]–[45]. In this paper, we propose a novel box

quantization method for compressing boxes while preserving

their original geometric properties.

III. PRELIMINARIES

In this section, we introduce notations and define the prob-

lem. Then, we review some intuitive methods for the problem.

Notations: Consider a set S={s1,· · · , s|S|}of sets and a set

E={e1,· · · , e|E|}of entities. Each set s∈ S is a non-empty

subset of Eand its size (i.e., cardinality) is denoted by |s|. A

representation of the set sis denoted by zsand its encoding

cost (the number of bits to encode zs) in bits is denoted by

Cost(zs). Refer to Table I for frequently-used notations.

Problem Definition: The problem of learning similarity-

preserving set representations, which we focus in this work,

is formulated as:

Problem 1 (Similarity-Preserving Set Embedding).

•Given: (1) a set Sof sets and (2) a budget b

•Find: a latent representation zsof each set s∈ S

•to Minimize: the difference between (1) the similarity

between sand s0, and (2) the similarity between zsand

zs0for all s6=s0∈ S

•Subject to: the total encoding cost Cost({zs:s∈ S})≤b.

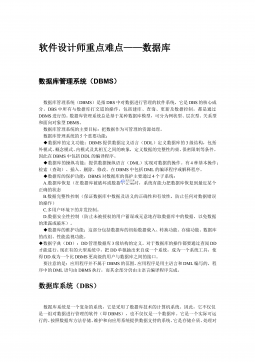

(a) Random Hashing

MSE = 0.0884

Cost = 77.312 KB

(b) Vector Embedding

MSE = 0.0495

Cost = 77.312 KB

(c) SET2BOX+

MSE = 0.0125

Cost = 15.695 KB

Fig. 1: Compared to intuitive methods, SET2BOX+preserves

the Overlap Coefficient between sets in the MovieLens 1M

dataset more accurately while requiring smaller encoding cost.

Rows and columns represent sets, and each cell represents the

estimation error of pairwise set similarity. The indices of the

sets are sorted by the sizes of the sets.

In this paper, we consider four set-similarity measures and

use the mean squared error (MSE)1to measure the differences,

while our proposed methods are not specialized to the choices.

Desirable Properties: We expect set embeddings for Prob-

lem 1 to have the following desirable properties:

•Accuracy: How can we accurately preserve similarities

between sets? Similarities approximated using learned rep-

resentations should be close to ground-truth similarities.

•Conciseness: How can we obtain compact representations

that give a good trade-off between accuracy and encoding

cost? It is desirable to use less amount of memory to store

embeddings while keeping them informative.

•Generalizability: Due to the size flexibility of sets, there

are infinitely many number of combinations of entities, and

thus retraining the entire model for new sets is intractable. It

is desirable for a model to be generalizable to unseen sets.

•Versatility: While there have been various definitions of set

similarities, the choice of the similarity metric plays a key

role in practical analyses and applications. This motivates

us to learn versatile representations of sets that can be used

to approximate diverse similarity measures.

•Speed: Using the obtained embeddings, set similarities

should be rapidly estimated, regardless of their cardinalities.

Intuitive Methods: Keeping the above desirable properties in

mind, we discuss simple and intuitive set-embedding methods

for similarity preservation.

•Random Hashing [4]: Each set sis encoded as a binary

vector zs∈ {0,1}dby mapping each entity into one of

the ddifferent values using a hash function h(·) : E →

{1,· · · , d}. Specifically, the representation zsis derived by:

zs[i] = (1if ∃e∈ss.t. h(e) = i

0otherwise.

The size of the set sis estimated from the L1 norm (or

the number of nonzero elements) of zs, i.e., |s| ≈ kzsk1.

1Ps6=s0∈S |sim(s, s0)−c

sim(zs,zs0)|2sim(·,·)and c

sim(·,·)are similar-

ity between sets and that between latent representations, respectively.

In addition, sizes of the intersection and the union of sets s

and s0are estimated from:

|s∩s0| ≈ kzsAND zs0k1and |s∪s0|≈kzsOR zs0k1,

respectively, where AND and OR are dimension-wise oper-

ations. Based on these approximations, any set similarities

(e.g., Jaccard Index) can be estimated.

•Vector Embedding: Another popular approach is to repre-

sent sets as vectors and compute the inner products between

them to estimate a predefined set similarity. More precisely,

given two sets sand s0and their vector representations zs

and zs0, it aims to approximate predefined sim(s, s0)by the

inner product of zsand zs0, i.e., hzs,zs0i ≈ sim(s, s0).

These methods, however, suffer from several limitations. In

random hashing, the maximum size of a set that a binary vector

can accurately represent is d, and thus sets whose sizes are

larger than dinevitably suffer from information loss. This

is empirically verified in Figure 1a; while estimations are

accurately made in small sets, the error increases as the sizes

of the sets are larger. The vector embedding method avoids

such a problem but shows weakness in its versatility. That is,

vectors are derived to preserve a predefined similarity (e.g.,

Jaccard Index), and thus they are not reusable to estimate other

similarity measures (e.g., Dice Index). To address these issues,

in this work, we propose SET2BOX and SET2BOX+, novel

end-to-end algorithms for similarity preserving set embedding.

As shown in Figure 1, SET2BOX+accurately preserves simi-

larities between sets compared to random hashing and vector

embedding methods, while requiring fewer bits to encode sets.

IV. PROPOSED METHOD

In this section, we present our proposed method

for similarity-preserving set embedding. We first present

SET2BOX, a novel algorithm for learning similarity-preserving

set representations using boxes (Sec. IV-A). Then we propose

SET2BOX+, an advanced version of SET2BOX, which derives

better conciseness and accuracy (Sec. IV-B).

A. SET2BOX: Preliminary Version

How can we derive set embeddings that accurately preserve

similarity in terms of various metrics? Towards this goal,

we first present SET2BOX, a preliminary set representation

method that effectively learns the set itself and the structural

relations with other sets.

Concepts: Abox is a d-dimensional hyper-rectangle whose

representation consists of its center and offset [19]. The center

describes the location of the box in the latent space and the

offset is the length of each edge of the box. Formally, given a

box B = (c,f) whose center c∈Rdand offset f∈Rd

+are in

the same latent space, the box is defined as a bounded region:

B≡ {p∈Rd: c −fpc+f},

where pis any point within the box. We let m∈Rdand

M∈Rdbe the vectors representing the minimum and the

maximum at each dimension, respectively, i.e., m=c−f

摘要:

展开>>

收起<<

Set2Box:SimilarityPreservingRepresentationLearningforSetsGeonLeeKAISTAIgeonlee0325@kaist.ac.krChanyoungParkKAISTISysE&AIcy.park@kaist.ac.krKijungShinKAISTAI&EEkijungs@kaist.ac.krAbstractSetshavebeenusedformodelingvarioustypesofobjects(e.g.,adocumentasthesetofkeywordsinitandacustomerasthesetoftheite...

声明:本站为文档C2C交易模式,即用户上传的文档直接被用户下载,本站只是中间服务平台,本站所有文档下载所得的收益归上传人(含作者)所有。玖贝云文库仅提供信息存储空间,仅对用户上传内容的表现方式做保护处理,对上载内容本身不做任何修改或编辑。若文档所含内容侵犯了您的版权或隐私,请立即通知玖贝云文库,我们立即给予删除!

相关推荐

-

.net笔试题选择题集VIP免费

2024-11-14 29

2024-11-14 29 -

产品需求文档 - 适合敏捷迭代开发的PRD文档应该怎么写VIP免费

2024-11-23 5

2024-11-23 5 -

产品需求文档 - 面向产品需求的验证管理VIP免费

2024-11-23 4

2024-11-23 4 -

产品需求文档 - 没有标准,只有沟通VIP免费

2024-11-23 4

2024-11-23 4 -

产品需求文档 - 产品需求应该怎么写VIP免费

2024-11-23 5

2024-11-23 5 -

产品需求文档 - 产品需求文档 PRD模板VIP免费

2024-11-23 33

2024-11-23 33 -

产品需求文档 - 产品需求核心组件分析VIP免费

2024-11-23 45

2024-11-23 45 -

2024版.新高考版.高考总复习.数学.5·3A版1_1集合VIP免费

2024-11-23 29

2024-11-23 29 -

2024版.新高考版.高考总复习.数学.5·3A版1_1集合(分层集训)VIP免费

2024-11-23 16

2024-11-23 16 -

产品需求文档 - 产品技能树之需求分析(一)VIP免费

2024-11-23 9

2024-11-23 9

分类:图书资源

价格:10玖币

属性:12 页

大小:2.06MB

格式:PDF

时间:2025-05-03

渝公网安备50010702506394

渝公网安备50010702506394