WA VEFIT AN ITERATIVE AND NON-AUTOREGRESSIVE NEURAL VOCODER BASED ON FIXED-POINT ITERATION Yuma Koizumi1 Kohei Yatabe2 Heiga Zen1 Michiel Bacchiani1

2025-05-06

12

0

1.31MB

8 页

10玖币

侵权投诉

WAVEFIT: AN ITERATIVE AND NON-AUTOREGRESSIVE NEURAL VOCODER

BASED ON FIXED-POINT ITERATION

Yuma Koizumi1, Kohei Yatabe2, Heiga Zen1, Michiel Bacchiani1

1Google Research, Japan 2Tokyo University of Agriculture and Technology, Japan

ABSTRACT

Denoising diffusion probabilistic models (DDPMs) and generative

adversarial networks (GANs) are popular generative models for neu-

ral vocoders. The DDPMs and GANs can be characterized by the

iterative denoising framework and adversarial training, respectively.

This study proposes a fast and high-quality neural vocoder called

WaveFit, which integrates the essence of GANs into a DDPM-like

iterative framework based on fixed-point iteration. WaveFit itera-

tively denoises an input signal, and trains a deep neural network

(DNN) for minimizing an adversarial loss calculated from interme-

diate outputs at all iterations. Subjective (side-by-side) listening tests

showed no statistically significant differences in naturalness between

human natural speech and those synthesized by WaveFit with five it-

erations. Furthermore, the inference speed of WaveFit was more

than 240 times faster than WaveRNN. Audio demos are available at

google.github.io/df-conformer/wavefit/.

Index Terms—Neural vocoder, fixed-point iteration, generative

adversarial networks, denoising diffusion probabilistic models.

1. INTRODUCTION

Neural vocoders [1–4] are artificial neural networks that generate a

speech waveform given acoustic features. They are indispensable

building blocks of recent applications of speech generation. For

example, they are used as the backbone module in text-to-speech

(TTS) [5–10], voice conversion [11, 12], speech-to-speech trans-

lation (S2ST) [13–15], speech enhancement (SE) [16–19], speech

restoration [20, 21], and speech coding [22–25]. Autoregressive

(AR) models first revolutionized the quality of speech genera-

tion [1, 26–28]. However, as they require a large number of sequen-

tial operations for generation, parallelizing the computation is not

trivial thus their processing time is sometimes far longer than the

duration of the output signals.

To speed up the inference, non-AR models have gained a lot

of attention thanks to their parallelization-friendly model architec-

tures. Early successful studies of non-AR models are those based

on normalizing flows [3, 4, 29] which convert an input noise to a

speech using stacked invertible deep neural networks (DNNs) [30].

In the last few years, the approach using generative adversarial net-

works (GANs) [31] is the most successful non-AR strategy [32–41]

where they are trained to generate speech waveforms indistinguish-

able from human natural speech by discriminator networks. The lat-

est member of the generative models for neural vocoders is the de-

noising diffusion probabilistic model (DDPM) [42–49], which con-

verts a random noise into a speech waveform by the iterative sam-

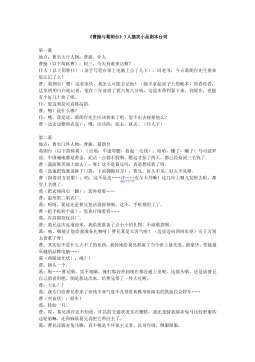

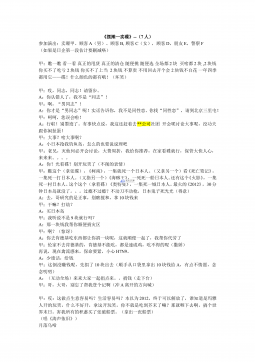

pling process as illustrated in Fig. 1 (a). With hundreds of iterations,

DDPMs can generate speech waveforms comparable to those of AR

models [42, 43].

Noise generation

GAN

Loss

Output

<latexit sha1_base64="nWeGbGNLJAMTdElJLWuIGd8Gu/I=">AAAClXichVHLSsNQFBzjq9ZX1YWCm2JRXJVTERVBKCriTqu2FR+UJF41mBdJWqihP+BacCEKCi7ET3Dpxh9w4SeISwU3LjxJA6KinpDcuXNnzs1wFFvXXI/osUFqbGpuaY21xds7Oru6Ez29BdcqO6rIq5ZuOeuK7ApdM0Xe0zxdrNuOkA1FF0XlYC44L1aE42qWueZVbbFtyHumtqupssfUxpZi+NVayadaKZGiNIWV/AkyEUghqmUrcYst7MCCijIMCJjwGOuQ4fKziQwINnPb8JlzGGnhuUANcfaWWSVYITN7wN893m1GrMn7oKcbulW+RefXYWcSw/RA1/RC93RDT/T+ay8/7BH8S5VXpe4Vdqn7aGD17V+XwauH/U/XHw6F1f/rgmwedjEVZtI4ox0yQVq17q8cnrysTq8M+yN0Sc+c84Ie6Y6TmpVX9SonVk4R50Flvo/lJyiMpTMTacqNp7Kz0chiGMQQRnkuk8hiEcvI870mjnGGc6lfmpHmpYW6VGqIPH34UtLSB6kFla0=</latexit>

y0

<latexit sha1_base64="feNpNBS1NA6fRrK97NgwcBF5KBw=">AAACl3ichVHNLsRQGD3qf/wNNsRGTIiNyVcRxIaQiKXBIDEyaeui0b+0dyYZzbyAB2Bh4SexEI9gaeMFLDyCWJLYWPjaaSIIvqa95557znd78umeZQaS6LFOqW9obGpuaU21tXd0dqW7e9YDt+QbIm+4lutv6logLNMReWlKS2x6vtBs3RIb+sFCdL5RFn5gus6arHhi29b2HHPXNDTJVKGg22GlWgzlmFotpjOUpbgGfwI1ARkkteymb1HADlwYKMGGgAPJ2IKGgJ8tqCB4zG0jZM5nZMbnAlWk2FtilWCFxuwBf/d4t5WwDu+jnkHsNvgWi1+fnYMYpge6phe6pxt6ovdfe4Vxj+hfKrzqNa/wil1Hfatv/7psXiX2P11/OHRW/6+LsknsYjrOZHJGL2aitEbNXz48eVmdWRkOR+iSnjnnBT3SHSd1yq/GVU6snCLFg1K/j+UnWB/PqpNZyk1k5uaTkbVgAEMY5blMYQ5LWEae7/VwjDOcK/3KrLKoLNWkSl3i6cWXUnIfWkyWYw==</latexit>

yt1

<latexit sha1_base64="OspnX+yHSbX7qkurs5eErJTXzw8=">AAACk3ichVE9S8NQFD3G7/pVFUFwEUvFqdyKqOhS1MFF0Go/oEpJ4qsG80XyWqjFP+Do4qCLgoP4Exxd/AMO/gRxVHBx8CYNiIp6Q96777xzbnI4mmsaviR6bFFa29o7Oru6Yz29ff0D8cGhvO9UPV3kdMd0vKKm+sI0bJGThjRF0fWEammmKGgHy8F9oSY833DsLVl3xY6l7tlGxdBVyVBxW7Ma9aOyLMcTlKKwxn826ahJIKp1J36LbezCgY4qLAjYkNybUOHzU0IaBJexHTQY87gzwnuBI8RYW2WWYIbK6AGve3wqRajN52CmH6p1/orJr8fKcSTpga7phe7php7o/ddZjXBG8C913rWmVrjlgePRzbd/VRbvEvufqj8UGrP/5wXeJCqYDz0Z7NENkcCt3tTXDk9fNheyycYkXdIz+7ygR7pjp3btVb/aENkzxDio9PdYfjb56VR6NkUbM4nMUhRZF8YwgSnOZQ4ZrGIduTCPE5zhXBlRFpUlZaVJVVoizTC+lLL2AdJ9lOU=</latexit>

yt

<latexit sha1_base64="IoBXzpB/i/H3onkl9rKzdMt4ZYs=">AAACk3ichVE9S8NQFD3Gr1q/qiIILmJRnMqtiIoupTq4CK21WmilJPFVQ/NFkhZq8Q84ujjoouAg/gRHF/+Agz9BHCu4OHiTBkRFvSHv3XfeOTc5HMXWNdcjeuqQOru6e3ojfdH+gcGh4djI6I5r1RxV5FVLt5yCIrtC10yR9zRPFwXbEbKh6GJXqa7597t14biaZW57DVvsGfKBqVU0VfYYKpQUo9k4Lm+XY3FKUFBTP5tk2MQRVsaK3aGEfVhQUYMBARMe9zpkuPwUkQTBZmwPTcYc7rTgXuAYUdbWmCWYITNa5fWAT8UQNfnsz3QDtcpf0fl1WDmFGXqkG2rRA93SM73/OqsZzPD/pcG70tYKuzx8MpF7+1dl8O7h8FP1h0Jh9v8835uHCpYDTxp7tAPEd6u29fWjs1ZuZWumOUtX9MI+L+mJ7tmpWX9Vr7Ni6xxRDir5PZafzc58IrmYoOxCPJUOI4tgEtOY41yWkMIGMsgHeZziHBfSuLQqpaX1NlXqCDVj+FLS5geNfZTF</latexit>

yT

<latexit sha1_base64="vkOcqP3l5iv4PNLUqQWZXpBdbVs=">AAAClXichVHLSsNQFJzGV62vqgsFN2JRXJVTERVBKCriTqu2ig9KEq8azIskLWroD7gWXIiCggvxE1y68Qdc+AnisoIbF56kAVFRT0ju3Lkz52Y4iq1rrkf0FJPq6hsam+LNiZbWtvaOZGdXwbVKjiryqqVbzpoiu0LXTJH3NE8Xa7YjZEPRxaqyPxOcr5aF42qWueId2mLLkHdNbUdTZY+p9U3F8A8qRZ8qxWSK0hRW/0+QiUAKUS1ayTtsYhsWVJRgQMCEx1iHDJefDWRAsJnbgs+cw0gLzwUqSLC3xCrBCpnZff7u8m4jYk3eBz3d0K3yLTq/Djv7MUiPdENVeqBbeqb3X3v5YY/gXw55VWpeYRc7jnuX3/51Gbx62Pt0/eFQWP2/LsjmYQcTYSaNM9ohE6RVa/7y0Wl1eXJp0B+iK3rhnJf0RPec1Cy/qtc5sXSGBA8q830sP0FhJJ0ZS1NuNJWdjkYWRx8GMMxzGUcW81hEnu81cYJzXEg90pQ0K83VpFIs8nTjS0kLH6bYlaw=</latexit>

x0

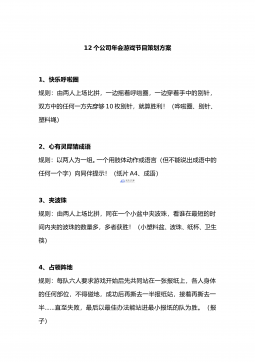

(a) DDPM-based models (b) GAN-based models (c) WaveFit

<latexit sha1_base64="+802x5u8mV5scpnnoIFSdVyQLts=">AAAClnichVHLSsNQFBzjq9ZX1Y3gRiyKq3IqouJCRPGxrNWqUEWSeNsG8yK5LdTiD7gXF4Ki4EL8BJdu/AEX/QRxqeDGhSdpQFTUE5I7d+7MSSZHc03Dl0T1JqW5pbWtPdYR7+zq7ulN9PVv+k7Z00VOd0zH29ZUX5iGLXLSkKbYdj2hWpoptrSDxeB8qyI833DsDVl1xa6lFm2jYOiqZCq/Y6mypKtmbfloL5GkFIU1/BOkI5BEVBkncYcd7MOBjjIsCNiQjE2o8PnKIw2Cy9wuasx5jIzwXOAIcfaWWSVYoTJ7wM8i7/IRa/M+6OmHbp3fYvLtsXMYo/RIN/RCD3RLT/T+a69a2CP4liqvWsMr3L3e48H1t39dFq8SpU/XHw6N1f/rgmwSBcyEmQzO6IZMkFZv+CuHpy/rs9nR2hhd0TPnvKQ63XNSu/KqX6+J7BniPKj097H8BJsTqfRUitYmk/ML0chiGMIIxnku05jHKjLIhX/2BOe4UAaVOWVJWWlIlabIM4AvpWQ+AICdlgg=</latexit>

F

GAN

Loss

Output

<latexit sha1_base64="nWeGbGNLJAMTdElJLWuIGd8Gu/I=">AAAClXichVHLSsNQFBzjq9ZX1YWCm2JRXJVTERVBKCriTqu2FR+UJF41mBdJWqihP+BacCEKCi7ET3Dpxh9w4SeISwU3LjxJA6KinpDcuXNnzs1wFFvXXI/osUFqbGpuaY21xds7Oru6Ez29BdcqO6rIq5ZuOeuK7ApdM0Xe0zxdrNuOkA1FF0XlYC44L1aE42qWueZVbbFtyHumtqupssfUxpZi+NVayadaKZGiNIWV/AkyEUghqmUrcYst7MCCijIMCJjwGOuQ4fKziQwINnPb8JlzGGnhuUANcfaWWSVYITN7wN893m1GrMn7oKcbulW+RefXYWcSw/RA1/RC93RDT/T+ay8/7BH8S5VXpe4Vdqn7aGD17V+XwauH/U/XHw6F1f/rgmwedjEVZtI4ox0yQVq17q8cnrysTq8M+yN0Sc+c84Ie6Y6TmpVX9SonVk4R50Flvo/lJyiMpTMTacqNp7Kz0chiGMQQRnkuk8hiEcvI870mjnGGc6lfmpHmpYW6VGqIPH34UtLSB6kFla0=</latexit>

y0

<latexit sha1_base64="vkOcqP3l5iv4PNLUqQWZXpBdbVs=">AAAClXichVHLSsNQFJzGV62vqgsFN2JRXJVTERVBKCriTqu2ig9KEq8azIskLWroD7gWXIiCggvxE1y68Qdc+AnisoIbF56kAVFRT0ju3Lkz52Y4iq1rrkf0FJPq6hsam+LNiZbWtvaOZGdXwbVKjiryqqVbzpoiu0LXTJH3NE8Xa7YjZEPRxaqyPxOcr5aF42qWueId2mLLkHdNbUdTZY+p9U3F8A8qRZ8qxWSK0hRW/0+QiUAKUS1ayTtsYhsWVJRgQMCEx1iHDJefDWRAsJnbgs+cw0gLzwUqSLC3xCrBCpnZff7u8m4jYk3eBz3d0K3yLTq/Djv7MUiPdENVeqBbeqb3X3v5YY/gXw55VWpeYRc7jnuX3/51Gbx62Pt0/eFQWP2/LsjmYQcTYSaNM9ohE6RVa/7y0Wl1eXJp0B+iK3rhnJf0RPec1Cy/qtc5sXSGBA8q830sP0FhJJ0ZS1NuNJWdjkYWRx8GMMxzGUcW81hEnu81cYJzXEg90pQ0K83VpFIs8nTjS0kLH6bYlaw=</latexit>

x0

Noise generation

MMSE

Output

<latexit sha1_base64="nWeGbGNLJAMTdElJLWuIGd8Gu/I=">AAAClXichVHLSsNQFBzjq9ZX1YWCm2JRXJVTERVBKCriTqu2FR+UJF41mBdJWqihP+BacCEKCi7ET3Dpxh9w4SeISwU3LjxJA6KinpDcuXNnzs1wFFvXXI/osUFqbGpuaY21xds7Oru6Ez29BdcqO6rIq5ZuOeuK7ApdM0Xe0zxdrNuOkA1FF0XlYC44L1aE42qWueZVbbFtyHumtqupssfUxpZi+NVayadaKZGiNIWV/AkyEUghqmUrcYst7MCCijIMCJjwGOuQ4fKziQwINnPb8JlzGGnhuUANcfaWWSVYITN7wN893m1GrMn7oKcbulW+RefXYWcSw/RA1/RC93RDT/T+ay8/7BH8S5VXpe4Vdqn7aGD17V+XwauH/U/XHw6F1f/rgmwedjEVZtI4ox0yQVq17q8cnrysTq8M+yN0Sc+c84Ie6Y6TmpVX9SonVk4R50Flvo/lJyiMpTMTacqNp7Kz0chiGMQQRnkuk8hiEcvI870mjnGGc6lfmpHmpYW6VGqIPH34UtLSB6kFla0=</latexit>

y0

<latexit sha1_base64="feNpNBS1NA6fRrK97NgwcBF5KBw=">AAACl3ichVHNLsRQGD3qf/wNNsRGTIiNyVcRxIaQiKXBIDEyaeui0b+0dyYZzbyAB2Bh4SexEI9gaeMFLDyCWJLYWPjaaSIIvqa95557znd78umeZQaS6LFOqW9obGpuaU21tXd0dqW7e9YDt+QbIm+4lutv6logLNMReWlKS2x6vtBs3RIb+sFCdL5RFn5gus6arHhi29b2HHPXNDTJVKGg22GlWgzlmFotpjOUpbgGfwI1ARkkteymb1HADlwYKMGGgAPJ2IKGgJ8tqCB4zG0jZM5nZMbnAlWk2FtilWCFxuwBf/d4t5WwDu+jnkHsNvgWi1+fnYMYpge6phe6pxt6ovdfe4Vxj+hfKrzqNa/wil1Hfatv/7psXiX2P11/OHRW/6+LsknsYjrOZHJGL2aitEbNXz48eVmdWRkOR+iSnjnnBT3SHSd1yq/GVU6snCLFg1K/j+UnWB/PqpNZyk1k5uaTkbVgAEMY5blMYQ5LWEae7/VwjDOcK/3KrLKoLNWkSl3i6cWXUnIfWkyWYw==</latexit>

yt1

<latexit sha1_base64="OspnX+yHSbX7qkurs5eErJTXzw8=">AAACk3ichVE9S8NQFD3G7/pVFUFwEUvFqdyKqOhS1MFF0Go/oEpJ4qsG80XyWqjFP+Do4qCLgoP4Exxd/AMO/gRxVHBx8CYNiIp6Q96777xzbnI4mmsaviR6bFFa29o7Oru6Yz29ff0D8cGhvO9UPV3kdMd0vKKm+sI0bJGThjRF0fWEammmKGgHy8F9oSY833DsLVl3xY6l7tlGxdBVyVBxW7Ma9aOyLMcTlKKwxn826ahJIKp1J36LbezCgY4qLAjYkNybUOHzU0IaBJexHTQY87gzwnuBI8RYW2WWYIbK6AGve3wqRajN52CmH6p1/orJr8fKcSTpga7phe7php7o/ddZjXBG8C913rWmVrjlgePRzbd/VRbvEvufqj8UGrP/5wXeJCqYDz0Z7NENkcCt3tTXDk9fNheyycYkXdIz+7ygR7pjp3btVb/aENkzxDio9PdYfjb56VR6NkUbM4nMUhRZF8YwgSnOZQ4ZrGIduTCPE5zhXBlRFpUlZaVJVVoizTC+lLL2AdJ9lOU=</latexit>

yt

<latexit sha1_base64="IoBXzpB/i/H3onkl9rKzdMt4ZYs=">AAACk3ichVE9S8NQFD3Gr1q/qiIILmJRnMqtiIoupTq4CK21WmilJPFVQ/NFkhZq8Q84ujjoouAg/gRHF/+Agz9BHCu4OHiTBkRFvSHv3XfeOTc5HMXWNdcjeuqQOru6e3ojfdH+gcGh4djI6I5r1RxV5FVLt5yCIrtC10yR9zRPFwXbEbKh6GJXqa7597t14biaZW57DVvsGfKBqVU0VfYYKpQUo9k4Lm+XY3FKUFBTP5tk2MQRVsaK3aGEfVhQUYMBARMe9zpkuPwUkQTBZmwPTcYc7rTgXuAYUdbWmCWYITNa5fWAT8UQNfnsz3QDtcpf0fl1WDmFGXqkG2rRA93SM73/OqsZzPD/pcG70tYKuzx8MpF7+1dl8O7h8FP1h0Jh9v8835uHCpYDTxp7tAPEd6u29fWjs1ZuZWumOUtX9MI+L+mJ7tmpWX9Vr7Ni6xxRDir5PZafzc58IrmYoOxCPJUOI4tgEtOY41yWkMIGMsgHeZziHBfSuLQqpaX1NlXqCDVj+FLS5geNfZTF</latexit>

yT

Log-mel spectrogram

<latexit sha1_base64="Zv18Jv5MgC4Mxrz/CC1v3efY/dE=">AAACkXichVE9S8NQFD2N3/WjVRfBpVgUp3Ir4tdUdBFcbGtroYok8amx+SJJC7X4B5zcRJ0UHMSf4OjiH3DoTxDHCi4O3qQBUVFvSN55551zXw5XsXXN9YiaEamjs6u7p7cv2j8wOBSLD48UXavqqKKgWrrllBTZFbpmioKneboo2Y6QDUUXm0plxT/frAnH1Sxzw6vbYtuQ901tT1Nlj6nilmI01OOdeJJSFFTiJ0iHIImw1q34PbawCwsqqjAgYMJjrEOGy08ZaRBs5rbRYM5hpAXnAseIsrfKKsEKmdkKf/d5Vw5Zk/d+Tzdwq3yLzq/DzgQm6YluqUWPdEfP9P5rr0bQw/+XOq9K2yvsndjJWP7tX5fBq4eDT9cfDoXV/+v8bB72sBBk0jijHTB+WrXtrx2dtfJLucnGFF3TC+e8oiY9cFKz9qreZEXuElEeVPr7WH6C4kwqPZei7GwysxyOrBfjmMA0z2UeGaxiHQW+9xCnOMeFNCotShkp1EqR0DOKLyWtfQCLJJPo</latexit>

c

Log-mel spectrogram

<latexit sha1_base64="Zv18Jv5MgC4Mxrz/CC1v3efY/dE=">AAACkXichVE9S8NQFD2N3/WjVRfBpVgUp3Ir4tdUdBFcbGtroYok8amx+SJJC7X4B5zcRJ0UHMSf4OjiH3DoTxDHCi4O3qQBUVFvSN55551zXw5XsXXN9YiaEamjs6u7p7cv2j8wOBSLD48UXavqqKKgWrrllBTZFbpmioKneboo2Y6QDUUXm0plxT/frAnH1Sxzw6vbYtuQ901tT1Nlj6nilmI01OOdeJJSFFTiJ0iHIImw1q34PbawCwsqqjAgYMJjrEOGy08ZaRBs5rbRYM5hpAXnAseIsrfKKsEKmdkKf/d5Vw5Zk/d+Tzdwq3yLzq/DzgQm6YluqUWPdEfP9P5rr0bQw/+XOq9K2yvsndjJWP7tX5fBq4eDT9cfDoXV/+v8bB72sBBk0jijHTB+WrXtrx2dtfJLucnGFF3TC+e8oiY9cFKz9qreZEXuElEeVPr7WH6C4kwqPZei7GwysxyOrBfjmMA0z2UeGaxiHQW+9xCnOMeFNCotShkp1EqR0DOKLyWtfQCLJJPo</latexit>

c

Log-mel spectrogram

<latexit sha1_base64="Zv18Jv5MgC4Mxrz/CC1v3efY/dE=">AAACkXichVE9S8NQFD2N3/WjVRfBpVgUp3Ir4tdUdBFcbGtroYok8amx+SJJC7X4B5zcRJ0UHMSf4OjiH3DoTxDHCi4O3qQBUVFvSN55551zXw5XsXXN9YiaEamjs6u7p7cv2j8wOBSLD48UXavqqKKgWrrllBTZFbpmioKneboo2Y6QDUUXm0plxT/frAnH1Sxzw6vbYtuQ901tT1Nlj6nilmI01OOdeJJSFFTiJ0iHIImw1q34PbawCwsqqjAgYMJjrEOGy08ZaRBs5rbRYM5hpAXnAseIsrfKKsEKmdkKf/d5Vw5Zk/d+Tzdwq3yLzq/DzgQm6YluqUWPdEfP9P5rr0bQw/+XOq9K2yvsndjJWP7tX5fBq4eDT9cfDoXV/+v8bB72sBBk0jijHTB+WrXtrx2dtfJLucnGFF3TC+e8oiY9cFKz9qreZEXuElEeVPr7WH6C4kwqPZei7GwysxyOrBfjmMA0z2UeGaxiHQW+9xCnOMeFNCotShkp1EqR0DOKLyWtfQCLJJPo</latexit>

c

<latexit sha1_base64="OLYUr7p9HaNR3EPcARLNgI3OThY=">AAAC6XichVHLThRRED00PnB8MODGxMRMnGBg4aTGGCGsCBCVhZGHAyQMmXQ3F+hMv9J9ZxLszI4VO1YaXalxoS71D9z4Ay7wD4xLTNy48PQjGiRKdbrr1Ll1qqtuWaHrxFrkoM/oP3X6zNmBc6XzFy5eGiwPDS/HQSeyVcMO3CBatcxYuY6vGtrRrloNI2V6lqtWrPZMer7SVVHsBP4jvROqdc/c8p1NxzY1qVb5WtMz9bZtusm93mhzbqNys/Kbudsba5WrUpPMKsdBvQBVFDYflL+giQ0EsNGBBwUfmtiFiZjPGuoQhOTWkZCLiJzsXKGHErUdZilmmGTb/G4xWitYn3FaM87UNv/i8o2orGBEPssbOZRP8k6+ys9/1kqyGmkvO/RWrlVha3DvytKPE1Uevcb2H9V/FBazT85LZ9PYxEQ2k8MZw4xJp7Vzfffxk8OlycWR5Ia8lG+c84UcyEdO6ne/268X1OJzVk/rz1KV33VE9KDo9SErKjJplN5Wwsw57qhHlPsS11z/e6nHwfKtWv1OTRZuV6emi4UP4CquY5RbHccU7mMeDXawi7d4jw9G29g3nhrP8lSjr9BcxhEzXv0CdaKqJA==</latexit>

G(Id F)

<latexit sha1_base64="OLYUr7p9HaNR3EPcARLNgI3OThY=">AAAC6XichVHLThRRED00PnB8MODGxMRMnGBg4aTGGCGsCBCVhZGHAyQMmXQ3F+hMv9J9ZxLszI4VO1YaXalxoS71D9z4Ay7wD4xLTNy48PQjGiRKdbrr1Ll1qqtuWaHrxFrkoM/oP3X6zNmBc6XzFy5eGiwPDS/HQSeyVcMO3CBatcxYuY6vGtrRrloNI2V6lqtWrPZMer7SVVHsBP4jvROqdc/c8p1NxzY1qVb5WtMz9bZtusm93mhzbqNys/Kbudsba5WrUpPMKsdBvQBVFDYflL+giQ0EsNGBBwUfmtiFiZjPGuoQhOTWkZCLiJzsXKGHErUdZilmmGTb/G4xWitYn3FaM87UNv/i8o2orGBEPssbOZRP8k6+ys9/1kqyGmkvO/RWrlVha3DvytKPE1Uevcb2H9V/FBazT85LZ9PYxEQ2k8MZw4xJp7Vzfffxk8OlycWR5Ia8lG+c84UcyEdO6ne/268X1OJzVk/rz1KV33VE9KDo9SErKjJplN5Wwsw57qhHlPsS11z/e6nHwfKtWv1OTRZuV6emi4UP4CquY5RbHccU7mMeDXawi7d4jw9G29g3nhrP8lSjr9BcxhEzXv0CdaKqJA==</latexit>

G(Id F)

<latexit sha1_base64="OLYUr7p9HaNR3EPcARLNgI3OThY=">AAAC6XichVHLThRRED00PnB8MODGxMRMnGBg4aTGGCGsCBCVhZGHAyQMmXQ3F+hMv9J9ZxLszI4VO1YaXalxoS71D9z4Ay7wD4xLTNy48PQjGiRKdbrr1Ll1qqtuWaHrxFrkoM/oP3X6zNmBc6XzFy5eGiwPDS/HQSeyVcMO3CBatcxYuY6vGtrRrloNI2V6lqtWrPZMer7SVVHsBP4jvROqdc/c8p1NxzY1qVb5WtMz9bZtusm93mhzbqNys/Kbudsba5WrUpPMKsdBvQBVFDYflL+giQ0EsNGBBwUfmtiFiZjPGuoQhOTWkZCLiJzsXKGHErUdZilmmGTb/G4xWitYn3FaM87UNv/i8o2orGBEPssbOZRP8k6+ys9/1kqyGmkvO/RWrlVha3DvytKPE1Uevcb2H9V/FBazT85LZ9PYxEQ2k8MZw4xJp7Vzfffxk8OlycWR5Ia8lG+c84UcyEdO6ne/268X1OJzVk/rz1KV33VE9KDo9SErKjJplN5Wwsw57qhHlPsS11z/e6nHwfKtWv1OTRZuV6emi4UP4CquY5RbHccU7mMeDXawi7d4jw9G29g3nhrP8lSjr9BcxhEzXv0CdaKqJA==</latexit>

G(Id F)

<latexit sha1_base64="Kb+9m5lRalDo8sTB/SjNIFpjXOw=">AAAC8HichVHLahRRED1pX3F8ZNSN4GZwGImgQ7WIiqugLnQh5uEkgUwYujs3ySX9ovvOwNj0D+gHKLiQCC7UlVtduvEHXMQ/EJcR3Ljw9ANEg6aa7jp1bp3qqltu7OvUiOxMWAcOHjp8ZPJo49jxEyenmqdOL6bRMPFUz4v8KFl2nVT5OlQ9o42vluNEOYHrqyV363ZxvjRSSaqj8KEZx2o1cDZCva49x5AaNDvxdN8NsnE+yMxlO2/1A73WqhlzqURefnHQbEtXSmvtBXYN2qhtNmp+QR9riOBhiAAKIQyxDwcpnxXYEMTkVpGRS4h0ea6Qo0HtkFmKGQ7ZLX43GK3UbMi4qJmWao9/8fkmVLbQkc/yWnblk7yVr/Lzn7WyskbRy5jerbQqHkw9PrvwY19VQG+w+Vv1H4XL7P3zitkM1nGjnElzxrhkimm9Sj969HR34eZ8J7sgL+Ub59yWHfnIScPRd+/VnJp/zupF/TtUVXedEN2ve33AiopMERW3lTHzHneUE1W+wTXbfy91L1i80rWvdWXuanvmVr3wSZzDeUxzq9cxg7uYRY8dPME7vMcHK7GeWS+s7SrVmqg1Z/CHWW9+Ad7OrVc=</latexit>

p(yt1|yt,c)

<latexit sha1_base64="Kb+9m5lRalDo8sTB/SjNIFpjXOw=">AAAC8HichVHLahRRED1pX3F8ZNSN4GZwGImgQ7WIiqugLnQh5uEkgUwYujs3ySX9ovvOwNj0D+gHKLiQCC7UlVtduvEHXMQ/EJcR3Ljw9ANEg6aa7jp1bp3qqltu7OvUiOxMWAcOHjp8ZPJo49jxEyenmqdOL6bRMPFUz4v8KFl2nVT5OlQ9o42vluNEOYHrqyV363ZxvjRSSaqj8KEZx2o1cDZCva49x5AaNDvxdN8NsnE+yMxlO2/1A73WqhlzqURefnHQbEtXSmvtBXYN2qhtNmp+QR9riOBhiAAKIQyxDwcpnxXYEMTkVpGRS4h0ea6Qo0HtkFmKGQ7ZLX43GK3UbMi4qJmWao9/8fkmVLbQkc/yWnblk7yVr/Lzn7WyskbRy5jerbQqHkw9PrvwY19VQG+w+Vv1H4XL7P3zitkM1nGjnElzxrhkimm9Sj969HR34eZ8J7sgL+Ub59yWHfnIScPRd+/VnJp/zupF/TtUVXedEN2ve33AiopMERW3lTHzHneUE1W+wTXbfy91L1i80rWvdWXuanvmVr3wSZzDeUxzq9cxg7uYRY8dPME7vMcHK7GeWS+s7SrVmqg1Z/CHWW9+Ad7OrVc=</latexit>

p(yt1|yt,c)

<latexit sha1_base64="Kb+9m5lRalDo8sTB/SjNIFpjXOw=">AAAC8HichVHLahRRED1pX3F8ZNSN4GZwGImgQ7WIiqugLnQh5uEkgUwYujs3ySX9ovvOwNj0D+gHKLiQCC7UlVtduvEHXMQ/EJcR3Ljw9ANEg6aa7jp1bp3qqltu7OvUiOxMWAcOHjp8ZPJo49jxEyenmqdOL6bRMPFUz4v8KFl2nVT5OlQ9o42vluNEOYHrqyV363ZxvjRSSaqj8KEZx2o1cDZCva49x5AaNDvxdN8NsnE+yMxlO2/1A73WqhlzqURefnHQbEtXSmvtBXYN2qhtNmp+QR9riOBhiAAKIQyxDwcpnxXYEMTkVpGRS4h0ea6Qo0HtkFmKGQ7ZLX43GK3UbMi4qJmWao9/8fkmVLbQkc/yWnblk7yVr/Lzn7WyskbRy5jerbQqHkw9PrvwY19VQG+w+Vv1H4XL7P3zitkM1nGjnElzxrhkimm9Sj969HR34eZ8J7sgL+Ub59yWHfnIScPRd+/VnJp/zupF/TtUVXedEN2ve33AiopMERW3lTHzHneUE1W+wTXbfy91L1i80rWvdWXuanvmVr3wSZzDeUxzq9cxg7uYRY8dPME7vMcHK7GeWS+s7SrVmqg1Z/CHWW9+Ad7OrVc=</latexit>

p(yt1|yt,c)

Fig. 1. Overview of (a) DDPM, (b) GAN-based model, and (c) pro-

posed WaveFit. (a) DDPM is an iterative-style model, where sam-

pling from the posterior is realized by adding noise to the denoised

intermediate signals. (b) GAN-based models predict y0by a non-

iterative DNN Fwhich is trained to minimize an adversarial loss

calculated from y0and the target speech x0. (c) Proposed WaveFit

is an iterative-style model without adding noise at each iteration, and

Fis trained to minimize an adversarial loss calculated from all inter-

mediate signals yT−1,...,y0, where Id and Gdenote the identity

operator and a gain adjustment operator, respectively.

Since a DDPM-based neural vocoder iteratively refines speech

waveform, there is a trade-off between its sound quality and compu-

tational cost [42], i.e., tens of iterations are required to achieve high-

fidelity speech waveform. To reduce the number of iterations while

maintaining the quality, existing studies of DDPMs have investigated

the inference noise schedule [44], the use of adaptive prior [45, 46],

the network architecture [47, 48], and/or the training strategy [49].

However, generating a speech waveform with quality comparable to

human natural speech in a few iterations is still challenging.

Recent studies demonstrated that the essence of DDPMs and

GANs can coexist [50, 51]. Denoising diffusion GANs [50] use a

generator to predict a clean sample from a diffused one and a dis-

criminator is used to differentiate the diffused samples from the clean

or predicted ones. This strategy was applied to TTS, especially to

predict a log-mel spectrogram given an input text [51]. As DDPMs

and GANs can be combined in several different ways, there will be a

new combination which is able to achieve the high quality synthesis

with a small number of iterations.

This study proposes WaveFit, an iterative-style non-AR neural

vocoder, trained using a GAN-based loss as illustrated in Fig. 1 (c).

It is inspired by the theory of fixed-point iteration [52]. The pro-

posed model iteratively applies a DNN as a denoising mapping that

arXiv:2210.01029v1 [eess.AS] 3 Oct 2022

removes noise components from an input signal so that the out-

put becomes closer to the target speech. We use a loss that com-

bines a GAN-based [34] and a short-time Fourier transform (STFT)-

based [35] loss as this is insensitive to imperceptible phase differ-

ences. By combining the loss for all iterations, the intermediate out-

put signals are encouraged to approach the target speech along with

the iterations. Subjective listening tests showed that WaveFit can

generate a speech waveform whose quality was better than conven-

tional DDPM models. The experiments also showed that the audio

quality of synthetic speech by WaveFit with five iterations is compa-

rable to those of WaveRNN [27] and human natural speech.

2. NON-AUTOREGRESSIVE NEURAL VOCODERS

Aneural vocoder generates a speech waveform y0∈RDgiven a

log-mel spectrogram c= (c1, ..., cK)∈RF K , where ck∈RF

is an F-point log-mel spectrum at k-th time frame, and Kis the

number of time frames. The goal is to develop a neural vocoder so

as to generate y0indistinguishable from the target speech x0∈RD

with less computations. This section briefly reviews two types of

neural vocoders: DDPM-based and GAN-based ones.

2.1. DDPM-based neural vocoder

A DDPM-based neural vocoder is a latent variable model of x0as

q(x0|c)based on a T-step Markov chain of xt∈RDwith learned

Gaussian transitions, starting from q(xT) = N(0,I), defined as

q(x0|c) = ZRDT

q(xT)

T

Y

t=1

q(xt−1|xt,c) dx1···dxT.(1)

By modeling q(xt−1|xt,c),y0∼q(x0|c)can be realized as a

recursive sampling of yt−1from q(yt−1|yt,c).

In a DDPM-based neural vocoder, xtis generated by the dif-

fusion process that gradually adds Gaussian noise to the wave-

form according to a noise schedule {β1, ..., βT}given by p(xt|

xt−1) = N√1−βtxt−1, βtI. This formulation enables us

to sample xtat an arbitrary timestep tin a closed form as xt=

√¯αtx0+√1−¯αt, where αt= 1 −βt,¯αt=Qt

s=1 αs, and

∼ N(0,I). As proposed by Ho et al. [53], DDPM-based neural

vocoders use a DNN Fwith parameter θfor predicting from xtas

ˆ

=Fθ(xt,c, βt). The DNN Fcan be trained by maximizing the

evidence lower bound (ELBO), though most of DDPM-based neu-

ral vocoders use a simplified loss function which omits loss weights

corresponding to iteration t;

LWG =k− Fθ(xt,c, βt)k2

2,(2)

where k·kpdenotes the `pnorm. Then, if βtis small enough,

q(xt−1|xt,c)can be given by N(µt, γtI), and the recursive sam-

pling from q(yt−1|yt,c)can be realized by iterating the following

formula for t=T, . . . , 1as

yt−1=1

√αtyt−βt

√1−¯αtFθ(yt,c, βt)+γt(3)

where γt=1−¯αt−1

1−¯αtβt,yT∼ N(0,I)and γ1= 0.

The first DDPM-based neural vocoders [42, 43] required over

200 iterations to match AR neural vocoders [26, 27] in naturalness

measured by mean opinion score (MOS). To reduce the number of

iterations while maintaining the quality, existing studies have inves-

tigated the use of noise prior distributions [45, 46] and/or better in-

ference noise schedules [44].

2.1.1. Prior adaptation from conditioning log-mel spectrogram

To reduce the number of iterations in inference, PriorGrad [45] and

SpecGrad [46] introduced an adaptive prior N(0,Σ), where Σis

computed from c. The use of an adaptive prior decreases the lower

bound of the ELBO, and accelerates both training and inference [45].

SpecGrad [46] uses the fact that Σis positive semi-definite and

that it can be decomposed as Σ=LL>where L∈RD×Dand

>is the transpose. Then, sampling from N(0,Σ)can be written

as =L˜

using ˜

∼ N(0,I), and Eq. (2) with an adaptive prior

becomes

LSG =

L−1(− Fθ(xt,c, βt))

2

2.(4)

SpecGrad [46] defines L=G+MG and approximates L−1≈

G+M−1G. Here NK ×Dmatrix Grepresents the STFT, M=

diag[(m1,1,...,mN,K )] ∈CN K×N K is the diagonal matrix repre-

senting the filter coefficients for each (n, k)-th time-frequency (T-F)

bin, and G+is the matrix representation of the inverse STFT (iSTFT)

using a dual window. This means Land L−1are implemented as

time-varying filters and its approximated inverse filters in the T-F

domain, respectively. The T-F domain filter Mis obtained by the

spectral envelope calculated from cwith minimum phase response.

The spectral envelope is obtained by applying the 24th order lifter to

the power spectrogram calculated from c.

2.1.2. InferGrad

In conventional DDPM-models, since the DNNs have been trained

as a Gaussian denoiser using a simplified loss function as Eq. (2),

there is no guarantee that the generated speech becomes close to the

target speech. To solve this problem, InferGrad [49] synthesizes y0

from a random signal via Eq. (3) in every training step, then addi-

tionally minimizes an infer loss LIF which represents a gap between

generated speech y0and the target speech x0. The loss function for

InferGrad is given as

LIG =LWG +λIFLIF ,(5)

where λIF >0is a tunable weight for the infer loss.

2.2. GAN-based neural vocoder

Another popular approach for non-AR neural vocoders is to adopt

adversarial training; a neural vocoder is trained to generate a speech

waveform where discriminators cannot distinguish it from the target

speech, and discriminators are trained to differentiate between target

and generated speech. In GAN-based models, a non-AR DNN F:

RF K →RDdirectly outputs y0from cas y0=Fθ(c).

One main research topic with GAN-based models is to design

loss functions. Recent models often use multiple discriminators at

multiple resolutions [34]. One of the pioneering work of using mul-

tiple discriminators is MelGAN [34] which proposed the multi-scale

discriminator (MSD). In addition, MelGAN uses a feature match-

ing loss that minimizes the mean-absolute-error (MAE) between the

discriminator feature maps of target and generated speech. The loss

functions of the generator LGAN

Gen and discriminator LGAN

Dis of the GAN-

based neural vocoder are given as followings:

LGAN

Gen =1

RGAN

RGAN

X

r=1 −Dr(y0) + λFMLFM

r(x0,y0)(6)

LGAN

Dis =1

RGAN

RGAN

X

r=1

max(0,1−Dr(x0))+ max(0,1+Dr(y0)) (7)

摘要:

展开>>

收起<<

WAVEFIT:ANITERATIVEANDNON-AUTOREGRESSIVENEURALVOCODERBASEDONFIXED-POINTITERATIONYumaKoizumi1,KoheiYatabe2,HeigaZen1,MichielBacchiani11GoogleResearch,Japan2TokyoUniversityofAgricultureandTechnology,JapanABSTRACTDenoisingdiffusionprobabilisticmodels(DDPMs)andgenerativeadversarialnetworks(GANs)arepopul...

声明:本站为文档C2C交易模式,即用户上传的文档直接被用户下载,本站只是中间服务平台,本站所有文档下载所得的收益归上传人(含作者)所有。玖贝云文库仅提供信息存储空间,仅对用户上传内容的表现方式做保护处理,对上载内容本身不做任何修改或编辑。若文档所含内容侵犯了您的版权或隐私,请立即通知玖贝云文库,我们立即给予删除!

相关推荐

-

《卖股票》2人仿赵本山小品卖拐VIP免费

2024-11-30 9

2024-11-30 9 -

《罗密欧与茱丽叶》穿越版-10人以上幽默搞笑小品剧本VIP免费

2024-11-30 15

2024-11-30 15 -

《精神病》4人搞笑小品剧本台词VIP免费

2024-11-30 11

2024-11-30 11 -

《超幸福鞋垫》湖南卫视何炅经典之作VIP免费

2024-11-30 14

2024-11-30 14 -

《曹操与葛朗台》3人搞笑小品剧本台词VIP免费

2024-11-30 13

2024-11-30 13 -

《摆摊-卖碟》多人(搞笑)最新9人VIP免费

2024-11-30 14

2024-11-30 14 -

《摆摊-卖碟》多人(搞笑)最新7人VIP免费

2024-11-30 13

2024-11-30 13 -

《摆摊-卖碟》多人(搞笑)最新VIP免费

2024-11-30 15

2024-11-30 15 -

“专心成长 超越自我”主题年会暨经管院就协成立一周年庆典联欢会策划书VIP免费

2024-11-30 18

2024-11-30 18 -

高效团队建设方案-如何组建高效的团队VIP免费

2024-12-09 49

2024-12-09 49

分类:图书资源

价格:10玖币

属性:8 页

大小:1.31MB

格式:PDF

时间:2025-05-06

渝公网安备50010702506394

渝公网安备50010702506394