Unsupervised Few-shot Learning via Deep Laplacian Eigenmaps Kuilin Chen

UnsupervisedFew-shotLearningviaDeepLaplacianEigenmapsKuilinChenUniversityofTorontokuilin.chen@mail.utoronto.caChi-GuhnLeeUniversityofTorontocglee@mie.utoronto.caAbstractLearninganewtaskfromahandfulofexamplesremainsanopenchallengeinmachinelearning.Despitetherecentprogressinfew-shotlearning,mostmethod...

相关推荐

-

2018年全国硕士研究生招生考试考研英语一真题VIP免费

2024-12-02 3

2024-12-02 3 -

2017年全国硕士研究生招生考试考研英语一真题VIP免费

2024-12-02 3

2024-12-02 3 -

2016年全国硕士研究生招生考试考研英语一真题VIP免费

2024-12-02 3

2024-12-02 3 -

2015年全国硕士研究生招生考试考研英语一真题VIP免费

2024-12-02 3

2024-12-02 3 -

2014年全国硕士研究生招生考试考研英语一真题VIP免费

2024-12-02 3

2024-12-02 3 -

2013年全国硕士研究生招生考试考研英语一真题VIP免费

2024-12-02 6

2024-12-02 6 -

2012年全国硕士研究生招生考试考研英语一真题VIP免费

2024-12-02 4

2024-12-02 4 -

2010年全国硕士研究生招生考试考研英语一真题VIP免费

2024-12-02 16

2024-12-02 16 -

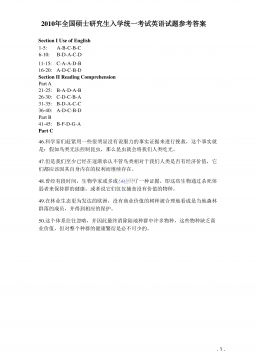

2010-2020全国硕士研究生入学统一考试英语 考研英语一答案VIP免费

2024-12-02 7

2024-12-02 7 -

2011年全国硕士研究生招生考试考研英语一真题VIP免费

2024-12-02 10

2024-12-02 10

作者详情

相关内容

-

2020年1206四川公务员考试《行测》真题

分类:

时间:2025-05-02

标签:无

格式:PDF

价格:10 玖币

-

2020年1205四川省公考《申论》题(下半年)及参考答案

分类:

时间:2025-05-02

标签:无

格式:PDF

价格:10 玖币

-

2020年1011新疆公务员考试《行测》真题

分类:

时间:2025-05-02

标签:无

格式:PDF

价格:10 玖币

-

2020年0725公务员多省联考《申论》题(四川B卷)

分类:

时间:2025-05-02

标签:无

格式:PDF

价格:10 玖币

-

2018年上海市公务员考试《行测》试卷(A卷)

分类:

时间:2025-05-02

标签:无

格式:PDF

价格:10 玖币

渝公网安备50010702506394

渝公网安备50010702506394