SPABERT A Pretrained Language Model from Geographic Data for Geo-Entity Representation Zekun Li1 Jina Kim1 Yao-Yi Chiang1 Muhao Chen2

2025-05-03

2

0

3.04MB

13 页

10玖币

侵权投诉

SPABERT: A Pretrained Language Model from Geographic Data for

Geo-Entity Representation

Zekun Li1, Jina Kim1, Yao-Yi Chiang1, Muhao Chen2

1Department of Computer Science and Engineering, University of Minnesota, Twin Cities

2Department of Computer Science, University of Southern California

{li002666,kim01479,yaoyi}@umn.edu, muhaoche@usc.edu

Abstract

Named geographic entities (geo-entities for

short) are the building blocks of many geo-

graphic datasets. Characterizing geo-entities

is integral to various application domains, such

as geo-intelligence and map comprehension,

while a key challenge is to capture the spatial-

varying context of an entity. We hypothe-

size that we shall know the characteristics of

a geo-entity by its surrounding entities, sim-

ilar to knowing word meanings by their lin-

guistic context. Accordingly, we propose a

novel spatial language model, SPABERT ( ),

which provides a general-purpose geo-entity

representation based on neighboring entities in

geospatial data. SPABERT extends BERT to

capture linearized spatial context, while incor-

porating a spatial coordinate embedding mech-

anism to preserve spatial relations of entities

in the 2-dimensional space. SPABERT is pre-

trained with masked language modeling and

masked entity prediction tasks to learn spatial

dependencies. We apply SPABERT to two

downstream tasks: geo-entity typing and geo-

entity linking. Compared with the existing lan-

guage models that do not use spatial context,

SPABERT shows significant performance im-

provement on both tasks. We also analyze the

entity representation from SPABERT in vari-

ous settings and the effect of spatial coordinate

embedding.

1 Introduction

Interpreting human behaviors requires consider-

ing human activities and their surrounding en-

vironment. Looking at a stopping location,

[

Speedway ,½

],

1

from a person’s trajectory, we

might assume that this person needs to use the loca-

tion’s amenities if

Speedway

implies a gas station,

and

½

is near a highway exit. We might predict a

meetup at [

Speedway ,½

] if the trajectory travels

through many other locations,

½

,

½

, ..., of the same

1

A geographic entity name

Speedway

and its loca-

tion ½(e.g., latitude and longitude). Best viewed in color.

name,

Speedway

, to arrive at [

Speedway ,½

] in

the middle of farmlands. As humans, we are able

to make such inferences using the name of a ge-

ographic entity (geo-entity) and other entities in

a spatial neighborhood. Specifically, we contex-

tualize a geo-entity by a reasonable surrounding

neighborhood learned from experience and, from

the neighborhood, relate other relevant geo-entities

based on their name and spatial relations (e.g., dis-

tance) to the geo-entity. This way, even if two gas

stations have the same name (e.g.,

Speedway

) and

entity type (e.g., ‘gas station’), we can still reason

about their spatially varying semantics and use the

semantics for prediction.

Capturing this spatially varying location seman-

tics can help recognizing and resolving geospa-

tial concepts (e.g., toponym detection, typing and

linking) and the grounding of geo-entities in doc-

uments, scanned historical maps, and a variety

of knowledge bases, such as Wikidata, Open-

StreetMap, and GeoNames. Also, the location se-

mantics can support effective use of spatial textual

information (geo-entities names) in many spatial

computing task, including moving behavior detec-

tion from visiting locations of trajectories (Yue

et al.,2021,2019), point of interest recommen-

dations (Yin et al.,2017;Zhao et al.,2022), air

quality (Lin et al.,2017,2018,2020;Jiang et al.,

2019) and traffic prediction (Yuan and Li,2021;

Gao et al.,2019) using location context.

Recently, the research community has seen a

rapid advancement in pretrained language mod-

els (PLMs) (Devlin et al.,2019;Liu et al.,2019;

Lewis et al.,2020;Sanh et al.,2019), which sup-

ports strong contextualized language representa-

tion abilities (Lan et al.,2020) and serves as the

backbones of various NLP systems (Rothe et al.,

2020;Yang et al.,2019). The extensions of these

PLMs help NL tasks in different data domains (e.g.,

biomedicine (Lee et al.,2020;Phan et al.,2021),

software engineering (Tabassum et al.,2020), fi-

arXiv:2210.12213v1 [cs.CL] 21 Oct 2022

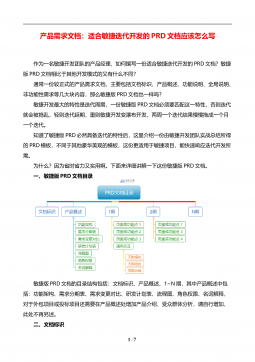

Figure 1: Overview for generating the pivot entity representation, Ep( : pivot entity; : neighboring geo-entities).

SPABERT sorts and concatenate neighboring geo-entities by their distance to pivot in ascending order to form

a pseudo-sentence. [CLS] is prepended at the beginning. [SEP] separates entities. SPABERT generates token

representations and aggregates representations of pivot tokens to produce Ep.

nance (Liu et al.,2021)) and modalities (e.g., tables

(Herzig et al.,2020;Yin et al.,2020;Wang et al.,

2022) and images (Li et al.,2020a;Su et al.,2019)).

However, it is challenging to adopt existing PLMs

or their extensions to capture geo-entities’ spatially

varying semantics. First, geo-entities exist in the

physical world. Their spatial relations (i.e., dis-

tance and orientation) do not have a fixed structure

(e.g., within a table or along a row of a table) that

can help contextualization. Second, existing lan-

guage models (LMs) pretrained on general domain

corpora (Devlin et al.,2019;Liu et al.,2019) re-

quire fine-tuning for domain adaptation to handle

names of geo-entities.

To tackle these challenges, we present

SPABERT ( ), a LM that captures the spatially

varying semantics of geo-entity names using large

geographic datasets for entity representation. Built

upon BERT (Devlin et al.,2019), SPABERT

generates the contextualized representation

for a geo-entity of interest (referred to as the

pivot), based on its geographically nearby geo-

entities. Specifically, SPABERT linearizes the

2-dimensional spatial context by forming pseudo

sentences that consist of names of the pivot and

neighboring geo-entities, ordered by their spatial

distance to the pivot. SPABERT also encodes the

spatial relations between the pivot and neighboring

geo-entities with a continuous spatial coordinate

embedding. The spatial coordinate embedding

models horizontal and vertical distance relations

separately and, in turn, can capture the orientation

relations. These techniques make the inputs

compatible with the BERT-family structures.

In addition, the backbone LM, BERT, in

SPABERT is pretrained with general-purpose cor-

pora and would not work well directly on geo-

entity names because of the domain shift. We

thus train SPABERT using pseudo sentences gen-

erated from large geographic datasets derived from

OpenStreetMap (OSM).

2

This pretraining process

conducts Masked Language Modeling (MLM) and

Masked Entity Prediction (MEP) that randomly

masks subtokens and full geo-entity names in the

pseudo sentences, respectively. MLM and MEP

enable SPABERT to learn from pseudo sentences

for generating spatially varying contextualized rep-

resentations for geo-entities.

SPABERT provides a general-purpose represen-

tation for geo-entities based on their spatial context.

Similar to linguistic context, spatial context refers

to the surrounding environment of a geo-entity.

For example, [

Speedway ,½

], [

Speedway ,½

], and

[

Speedway ,½

] would have different representa-

tions since the surrounding environment of

½

,

½

,

and

½

could vary. We evaluate SPABERT on two

tasks: 1) geo-entity typing and 2) geo-entity linking

to external knowledge bases. Our analysis includes

the performance comparison of SPABERT in var-

ious settings due to characteristics of geographic

data sources (e.g., entity omission).

To summarize, this work has the following con-

tributions. We propose an approach to linearize

the 2-dimensional spatial context, encode the geo-

entity spatial relations, and use a LM to pro-

duce spatial varying feature representations of geo-

entities. We show that SPABERT is a general-

purpose encoder by supporting geo-entity typing

and geo-entity linking, which are keys to effec-

tively integrating large varieties of geographic data

sources, the grounding of geo-entities as well as

supports for a broad range of spatial computing

applications. The experiments demonstrate that

SPABERT is effective for both tasks and outper-

forms the SOTA LMs.

2OpenStreetMap: https://www.openstreetmap.org/

2 SPABERT

SPABERT is a LM built upon a pretrained BERT

and further trained to produce contextualized

geo-entity representations given large geographic

datasets. Fig. 1 shows the outline of SPABERT,

with the details below. This section first presents

the preliminary (§2.1) and then describes the over-

all approach for learning geo-entities’ contextual-

ized representations (§2.2), the pretraining strate-

gies (§2.3), and inference procedures (§2.4).

2.1 Preliminary

We assume that given a geographic dataset (e.g.,

OSM) with many geo-entities,

S={g1, g2, ..., gl}

,

each geo-entity

gi

(e.g., [

Speedway ,½

]) has two

attributes: name

gname

(

Speedway

) and location

gloc

(

½

). The location attribute,

gloc

, is a tuple

gloc = (glocx, glocy, ...)

that identifies the loca-

tion in a coordinate system (e.g., x and y image

pixel coordinates or latitude and longitude geo-

coordinates with altitudes). WLOG, here we as-

sume a 2-dimensional space. SPABERT aims to

generate a contextualized representation for each

geo-entity

gi

in

S

given its spatial context. We

denote a geo-entity that we seek to contextual-

ize as the pivot entity,

gp

,

p

for short. The spa-

tial context of

p

is

SC(p) = {gn1, ..., gnk}

where

distance(p, gnk)< T

.

T

is a spatial distance pa-

rameter defining a local area. We call

gn1, ..., gnk

as

p

’s neighboring geo-entities and denote them as

n1, ..., nkwhen there is no confusion.

2.2 Contextualizing Geo-entities

Linearizing Neighboring Geo-entity Names

For

a pivot,

p

, SPABERT first linearizes its neighbor-

ing geo-entitie names to form a BERT-compatible

input sequence, called a pseudo sentence. The

corresponding pseudo sentence for the example in

Fig. 1 is constructed as:

[CLS] University of Minnesota [SEP]

Minneapolis [SEP] St. Anthony Park [SEP]

Bloom Island Park [SEP] Bell Museum [SEP]

The pseudo sentence starts with the pivot name

followed by the names of the pivot’s neighboring

geo-entities, ordered by their spatial distance to the

pivot in ascending order. The idea is that nearby

geo-entities are more related (for contextualization)

than distant geo-entities. SPABERT also tokenizes

the pseudo sentences using the original BERT to-

kenizer with the special token

[SEP]

to separate

entity names. The subtokens of a neighbor

nk

is

denoted as Tnk

jas in Fig. 2.

Encoding Spatial Relations

SPABERT adopts

two types of position embeddings in addtion to the

token embeddings in the pseudo sentences (Fig. 2).

The sequence position embedding represents the

token order, same as the original position embed-

ding in BERT and other Transformer LMs. Also,

SPABERT incorporates a spatial coordinate em-

bedding mechanism, which seeks to represent the

spatial relations between the pivot and its neigh-

boring geo-entities. SPABERT’s spatial coordinate

embeddings encode each location dimension sep-

arately to capture the relative distance and orien-

tation between geo-entities. Specifically, for the

2-dimensional space, SPABERT generates normal-

ized distance

distnk

x

and

distnk

y

for each neighbor-

ing geo-entity using the following equations:

distnk

x= (glocx

nk−glocx

p)/Z

distnk

y= (glocy

nk−glocy

p)/Z

where

Z

is a normalization factor, and (

glocx

p

,

glocy

p

),

(

glocx

nk

,

glocy

nk

) are the locations of pivot

p

and neigh-

boring entity

nk

. Note that the tokens belong to

the same geo-entity name have the same

distnk

x

and

distnk

y

. Also, SPABERT uses

DSEP

, a constant

numerical value larger than

max(distnk

x, distnk

y)

for all neighboring entities to differentiate special

tokens from entity name tokens.

SPABERT encodes

distnk

x

and

distnk

y

using a

continuous spatial coordinate embedding layer with

real-valued distances as input to preserve the con-

tinuity of the output embeddings. Let

Snk

be the

spatial coordinate embedding of the neighbor en-

tity

nk

,

M

be the embedding’s dimension, and

Snk∈RM. We define Snkas:

S(m)

nk=(sin(distnk/100002j/M ), m = 2j

cos(distnk/100002j/M ), m = 2j+ 1

where

S(m)

nk

is the

m

-th component of

Snk

.

distnk

represents

distnk

x

and

distnk

y

for the spatial coordi-

nate embeddings along the horizontal and vertical

directions, respectively.

The token embedding, sequence position embed-

ding and spatial coordinate embedding are summed

up then fed into the encoder. Similar to BERT,

SPABERT encoder calculates an output embed-

ding for each token. Then SPABERT averages the

摘要:

展开>>

收起<<

SPABERT:APretrainedLanguageModelfromGeographicDataforGeo-EntityRepresentationZekunLi1,JinaKim1,Yao-YiChiang1,MuhaoChen21DepartmentofComputerScienceandEngineering,UniversityofMinnesota,TwinCities2DepartmentofComputerScience,UniversityofSouthernCalifornia{li002666,kim01479,yaoyi}@umn.edu,muhaoche@usc....

声明:本站为文档C2C交易模式,即用户上传的文档直接被用户下载,本站只是中间服务平台,本站所有文档下载所得的收益归上传人(含作者)所有。玖贝云文库仅提供信息存储空间,仅对用户上传内容的表现方式做保护处理,对上载内容本身不做任何修改或编辑。若文档所含内容侵犯了您的版权或隐私,请立即通知玖贝云文库,我们立即给予删除!

相关推荐

-

.net笔试题选择题集VIP免费

2024-11-14 29

2024-11-14 29 -

产品需求文档 - 适合敏捷迭代开发的PRD文档应该怎么写VIP免费

2024-11-23 4

2024-11-23 4 -

产品需求文档 - 面向产品需求的验证管理VIP免费

2024-11-23 4

2024-11-23 4 -

产品需求文档 - 没有标准,只有沟通VIP免费

2024-11-23 3

2024-11-23 3 -

产品需求文档 - 产品需求应该怎么写VIP免费

2024-11-23 5

2024-11-23 5 -

产品需求文档 - 产品需求文档 PRD模板VIP免费

2024-11-23 32

2024-11-23 32 -

产品需求文档 - 产品需求核心组件分析VIP免费

2024-11-23 45

2024-11-23 45 -

2024版.新高考版.高考总复习.数学.5·3A版1_1集合VIP免费

2024-11-23 28

2024-11-23 28 -

2024版.新高考版.高考总复习.数学.5·3A版1_1集合(分层集训)VIP免费

2024-11-23 16

2024-11-23 16 -

产品需求文档 - 产品技能树之需求分析(一)VIP免费

2024-11-23 8

2024-11-23 8

分类:图书资源

价格:10玖币

属性:13 页

大小:3.04MB

格式:PDF

时间:2025-05-03

渝公网安备50010702506394

渝公网安备50010702506394