3

highlight the different approaches and objectives of FL and

meta learning. While FL focuses on collaborative learning with

devices having different data distributions but performing the

same task, meta learning guarantees the adaptation of models

to multiple tasks with their corresponding datasets, often

emphasizing fast adaptation and generalization capabilities,

especially by initialization-based algorithms [40]. The goal

of FedMeta is to collaboratively meta-train a learning model

using data from different tasks distributed among devices.

The server maintains the initialized model and updates it by

collecting testing loss from a mini batch of devices. The

transmitted information in learning process consists of the

model parameter initialization (from a server to devices) and

testing loss (from devices to the server), and no data is required

to be delivered to the server. Compared to FL, FedMeta has

the following advantages:

•FedMeta brings a reduction in the required communica-

tion cost because of faster convergence, and an increase

in learning accuracy. Additionally, it is highly adaptable

and can be employed for arbitrary tasks across multiple

devices. This flexibility allows for efficient and accurate

learning in various scenarios.

•FedMeta enables model sharing and local model training

without substantial expansion in model size. As a result,

it does not consume a large amount of memory, and

the resulting global model can be personalized for each

device. This aspect allows for efficient utilization of

resources and customization of the global model to satisfy

the specific requirements of devices.

A. Related Works

This section provides a brief review of relevant surveys and

tutorials on FL and meta-learning. Additionally, it highlights

the novel contributions of this paper.

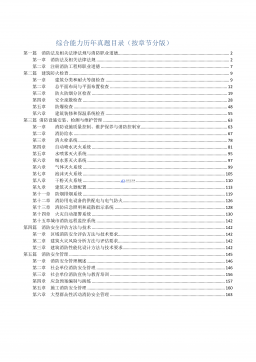

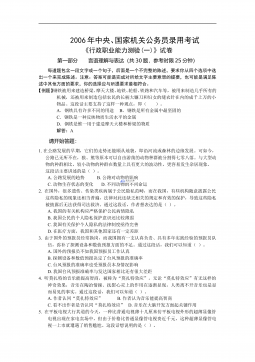

1) FL: In the past 5 years, numerous surveys and tutorials

have been published on FL methodologies [41]–[52] and their

applications over wireless [53]–[65] networks. To differentiate

our tutorial from these existing surveys and tutorials, we have

classified them into different categories based on their primary

focus in Table I. We then compare and summarize the content

of these surveys and tutorials, aligning them with the structure

of our own tutorial, as presented in Tables II and III. Table I

highlights that surveys and tutorials for FL methodologies pri-

marily focus on either fundamental definitions, architectures,

challenges, future directions, and applications of FL [41], [43],

[44], or a specific subfield of FL, such as emerging trends of

FL (FL in the intersection with other learning paradigms) [45],

communication efficiency of FL (challenges and constraints

caused by limited bandwidth and computation ability) [46],

fairness-aware FL (client selection, optimization, contribution

evaluation, incentive distribution, and performance metrics)

[47], federated reinforcement learning (FRL) (definitions, evo-

lution, and advantages of horizontal FRL and vertical FRL)

[48], FL for natural language processing (NLP) (algorithm

challenges, system challenges as well as privacy issues) [49],

incentive schemes for FL (stackelberg game, auction, contract

theory, Shapley value, reinforcement learning, and blockchain)

[50], [51], unlabeled data mining in FL (potential research

directions, application scenarios, and challenges) [42], and

FL over next-generation Ethernet Passive Optical Networks

[52]. On the other hand, FL surveys and tutorials for wire-

less networks mainly focus on either fundamental theories,

key techniques, challenges, future directions, and applications

[54]–[57], [64], or a specific subfield of FL applied in wireless

networks, including FL in IoT (data sharing, offloading and

caching, attack detection, localization, mobile crowdsensing,

and privacy) [58], [61], [62], communication-efficient FL un-

der various challenges incurred by communication, computing,

energy, and data privacy issues [53], [63], FL for mobile edge

computing (MEC) (communication cost, resource allocation,

data privacy, data security, and implementation) [59], collab-

orative FL (definitions, advantages, drawbacks, usage condi-

tions, and performance metrics) [60], and asynchronous FL

(device heterogeneity, data heterogeneity, privacy and security,

and applications) [65]. To the best of the authors’ knowledge,

this is the first paper considering FL methodologies in different

research areas, including model aggregation, gradient descent,

communication efficiency, fairness, Bayesian learning, and

clustering, and how FL algorithms evolve from the canonical

one, namely, federated averaging (FedAvg), in detail. Also,

we present a qualitative comparison considering advantages

and disadvantages among different FL algorithms in the same

research area.

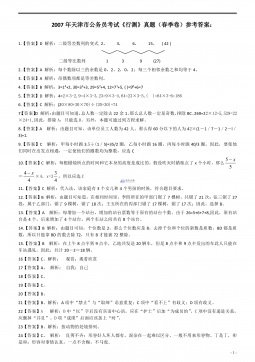

2) Meta Learning: There have been several surveys and

tutorials focusing on meta learning methodologies over the

past 20 years [66]–[72]. However, to the best of the authors’

knowledge, there are no existing surveys and tutorials in meta

learning over wireless networks. To distinguish the scope of

our tutorial from existing literature, we classify the available

meta learning surveys and tutorials into different categories

based on their focus, as presented in Table I. Furthermore, we

compare and summarize the content of these existing surveys

and tutorials in Table IV, aligning them with the structure

and content of our own tutorial. Table I illutrates that the

existing meta learning surveys and tutorials focused either on

the general advancement, including definitions, models, chal-

lenges, research directions, and applications, of meta learning

[66], [67], [70], [71], or exploring the detailed applications

of meta learning in a specific meta learning field, including

algorithm selection (transfer learning, few-shot learning, and

beyond supervised learning) for data mining [68], NLP (es-

pecially few-shot applications, including definitions, research

directions, and some common datasets) [69], and multi-modal

meta learning in terms of the methodologies (few-shot learning

and zero-shot learning) and applications [72]. To the best

of the authors’ knowledge, no existing surveys or tutorials

have covered the application of FedMeta in wireless networks,

making our tutorial a unique contribution to this area of

research.

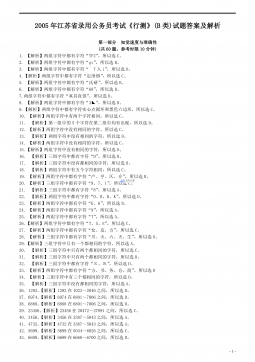

Tables II, III, and IV provide a comparative analysis of

existing surveys and tutorials in relation to our tutorial. It

is observed that the existing literature only covers a limited

number of subtopics related to our tutorial and offers only

brief descriptions of the corresponding learning algorithms. In

contrast, our tutorial goes beyond these limitations by provid-

2024-12-02 4

2024-12-02 4

2024-12-03 7

2024-12-03 7

2024-12-03 4

2024-12-03 4

2024-12-03 32

2024-12-03 32

2024-12-03 34

2024-12-03 34

2024-12-03 15

2024-12-03 15

2024-12-03 13

2024-12-03 13

2024-12-03 23

2024-12-03 23

2024-12-13 119

2024-12-13 119

2025-02-25 9

2025-02-25 9

渝公网安备50010702506394

渝公网安备50010702506394