Enabling ISP-less Low-Power Computer Vision

Gourav Datta, Zeyu Liu, Zihan Yin, Linyu Sun, Akhilesh R. Jaiswal, Peter A. Beerel

Universiy of Southern California, Los Angeles, USA

{gdatta, liuzeyu, zihanyin, linyusun, akhilesh, pabeerel}@usc.edu

Abstract

Current computer vision (CV) systems use an image sig-

nal processing (ISP) unit to convert the high resolution raw

images captured by image sensors to visually pleasing RGB

images. Typically, CV models are trained on these RGB im-

ages and have yielded state-of-the-art (SOTA) performance

on a wide range of complex vision tasks, such as object de-

tection. In addition, in order to deploy these models on

resource-constrained low-power devices, recent works have

proposed in-sensor and in-pixel computing approaches that

try to partly/fully bypass the ISP and yield significant band-

width reduction between the image sensor and the CV pro-

cessing unit by downsampling the activation maps in the

initial convolutional neural network (CNN) layers. How-

ever, direct inference on the raw images degrades the test

accuracy due to the difference in covariance of the raw im-

ages captured by the image sensors compared to the ISP-

processed images used for training. Moreover, it is difficult

to train deep CV models on raw images, because most (if

not all) large-scale open-source datasets consist of RGB im-

ages. To mitigate this concern, we propose to invert the ISP

pipeline, which can convert the RGB images of any dataset

to its raw counterparts, and enable model training on raw

images. We release the raw version of the COCO dataset,

a large-scale benchmark for generic high-level vision tasks.

For ISP-less CV systems, training on these raw images re-

sult in a ∼7.1% increase in test accuracy on the visual wake

works (VWW) dataset compared to relying on training with

traditional ISP-processed RGB datasets. To further improve

the accuracy of ISP-less CV models and to increase the en-

ergy and bandwidth benefits obtained by in-sensor/in-pixel

computing, we propose an energy-efficient form of ana-

log in-pixel demosaicing that may be coupled with in-pixel

CNN computations. When evaluated on raw images cap-

tured by real sensors from the PASCALRAW dataset, our ap-

proach results in a 8.1% increase in mAP. Lastly, we demon-

strate a further 20.5% increase in mAP by using a novel ap-

plication of few-shot learning with thirty shots each for the

novel PASCALRAW dataset, constituting 3 classes.

1. Introduction

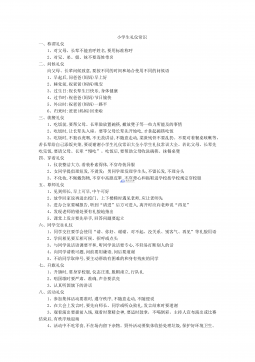

Modern high-resolution cameras generate huge amount

of visual data arranged in the form of raw Bayer color fil-

ter arrays (CFA), also known as a mosaic pattern, as shown

in Fig. 1, that need to be processed for downstream CV

tasks [43, 1]. An ISP unit, consisting of several pipelined

processing stages, is typically used before the CV process-

ing to convert the raw mosaiced images to RGB counter-

parts [20, 42, 26, 29]. The ISP step that converts these

single-channel CFA images to three-channel RGB images

is called demosaicing. Historically, ISP has been proven to

be extremely effective for computational photography ap-

plications, where the goal is to generate images that are

aesthetically pleasing to the human eye [29, 8]. How-

ever, is it important for high-level CV applications, such

as face detection by smart security cameras, where the sen-

sor data is unlikely to be viewed by any human? Exist-

ing works [42, 20, 26] show that most ISP steps can be

discarded with a small drop in the test accuracy for large-

scale image recognition tasks. The removal of the ISP

can potentially enable existing in-sensor [31, 10, 2] and in-

pixel [5, 27, 12, 13, 14] computing paradigms to process

CV computations, such as CNNs partly in the sensor, and

reduce the bandwidth and energy incurred in the data trans-

fer between the sensor and the CV system. Moreover, most

low-power cameras with a few MPixels resolution, do not

have an on-board ISP [3], thereby requiring the ISP to be

implemented off-chip, increasing the energy consumption

of the total CV system.

Although the ISP removal can facilitate model deploy-

ments in resource-constrained edge devices, one key chal-

lenge is that most large-scale datasets, that are used to train

CV models, are ISP-processed. Since there is a large co-

variance shift between the raw and RGB images (please see

Fig. 1 where we show the histogram of the pixel inten-

sity distributions of RGB and raw images), models trained

on ISP-processed RGB images and inferred on raw im-

ages, thereby removing the ISP, exhibit a significant drop

in the accuracy. One recent work has leveraged train-

able flow-based invertible neural networks [44] to convert

raw to RGB images and vice-versa using open-source ISP

datasets. These networks have recently yielded SOTA test

1

arXiv:2210.05451v1 [cs.CV] 11 Oct 2022

2024-12-01 8

2024-12-01 8

2024-12-01 8

2024-12-01 8

2024-12-01 7

2024-12-01 7

2024-12-01 12

2024-12-01 12

2024-12-01 13

2024-12-01 13

2024-12-01 31

2024-12-01 31

2024-12-01 25

2024-12-01 25

2024-12-01 29

2024-12-01 29

2024-12-01 12

2024-12-01 12

2024-12-01 22

2024-12-01 22

渝公网安备50010702506394

渝公网安备50010702506394