son’s face is occluded, it is possible to send alert messages

to the user’s device when an emergency occurs. Connect-

ing a pedestrian’s vision data to a wireless identifier such as

a smartphone ID necessitates an opt-in requirement for the

messaging application.

Existing approaches associating information across

modalities use information such as camera and laser ranging

[26, 23], or clothing color and motion patterns [28]. Among

multimodal methods for vision and wireless, [4] fuses cam-

era and received signal strength (RSS) data, and [29, 9] fuse

camera and WiFi channel state information (CSI). These

prior vision and wireless methods have limitations such as

requiring multiple WiFi access points (AP). Most similar

to our work are Vi-Fi [17] and ViTag [5], which use the

vision modality, (camera and depth data) along with the

WiFi modality (FTM and inertial measurement unit IMU),

to perform cross-modal association using a single AP. How-

ever, in our work we compare and use fewer features, us-

ing only depth data and FTM information for creating the

multimodal association, while using the camera data along

with off-the-shelf detected bounding boxes to extract depth.

Moreover, we make no use of hand-labeled data unlike Vi-

Fi and ViTag.

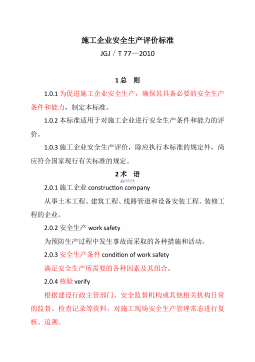

In this paper, we present ViFiCon, a self-supervised con-

trastive learning model to make associations between cam-

era and wireless modalities without hand-crafted labeled

or ground truth data. Hand-labelling datasets (providing

ground truth associations between vision and wireless) is

expensive and time-consuming, and so instead, we create

multimodal associations without using hand-labeled data.

We leverage bounding boxes for person detection using an

off-the-shelf object detection model [1] to obtain combina-

tions of pedestrian depths from the vision modality, with-

out knowledge of which pedestrians are contributing FTM

data. We then construct positive and negative pairings of

these depth combinations from the vision domain and the

known FTM distances from the wireless domain based on

passively collected timestamp information, as inspired by

the time contrastive audio and video self-supervised syn-

chronization task proposed in [14]. To bring the two modal-

ities into a joint representation, we create a novel band im-

age representation that maps the signals into a sequence

of gray-scale bands. Not only can we represent a single

signal for each modality, but we can combine multiple of

these signals in a singular image to learn both a scene-wide

temporal synchronization of the data as well as a down-

stream signal-to-signal association for the depth and FTM

data. As the vision domain uses unlabeled bounding boxes,

this scene-wide synchronization allows the representation

to know how many signals should be considered in a single

image representation by considering the fixed sized FTM

image representation. That is, the number of smartphones is

known and constrains the number of relevant bounding box

depth signals. We then train a siamese convolutional neu-

ral network model on the scene-wide synchronization task

to embed the positive and negative pairings into a joint la-

tent space with a Euclidean distance-based contrastive loss.

When trained on the pseudo-labeled data, we can then apply

the task downstream without any more training on the indi-

vidual association task. We show the motivation of ViFiCon

in Figure 1.

Summary of Contributions We summarize our contribu-

tions as follows:

• We generate a novel representation of signal data,

which represents a group of signals from two modalities as

a set of gray-scale bands.

• We devise a self-supervised learning framework to

learn a multimodal latent space representation of signal data

without the use of hand-labelling.

• We demonstrate the strength of the convolutional neu-

ral network in generalizing a global scene view of signal

data in a pretext synchronization task to a one-to-one indi-

vidual association downstream task without further training,

yielding an 84.77% Identity Precision on a one-to-one asso-

ciation with a 10-frame temporal window view.

2. Background and Related Work

Multimodal Association Multimodal association attempts

to enhance a deep learning model’s performance by intro-

ducing a more robust context of a scene through shared

knowledge between domains. Traditionally, these modal-

ities are fused together by mapping the data from the two

into a shared latent space representation, where vector rep-

resentations of the data can be directly compared with one

another. Multimodal association has been applied to learn-

ing information between audio and video domains to learn

an association between the two, such as whether or not

an input video of an instrument corresponds audio sam-

ples [14] or matching a video of lip movements to mel-

spectrogram audio representations [7]. Other work utilize

the notion of multimodal deep learning for fusing audio

and text for sentiment recognition [12] and video and wire-

less sensors for human activity recognition [29] or tracking

[21, 22].

We specifically build off of work associating vision do-

main information (such as RGB or depth data) with wireless

domain sensor data such as WiFi Fine Time Measurements

(FTM) or inertial measurement unit (IMU). Because such

signals are able to overcome obstructions such as walls [3],

we can make use of the WiFi modality along with the vision

domain to re-identify or track individuals behind obstruc-

tions, not possible with vision alone. RGB-W [4] lever-

ages captured video data and received signal strength (RSS)

data from cell phones’ WiFi or Bluetooth in the scene to

associate detected bounding boxes with cell phone MAC

addresses. Despite working indoors, an improvement over

2024-11-29 19

2024-11-29 19

2024-11-29 22

2024-11-29 22

2024-11-29 20

2024-11-29 20

2024-11-29 22

2024-11-29 22

2024-11-29 22

2024-11-29 22

2024-11-29 24

2024-11-29 24

2024-12-14 263

2024-12-14 263

2024-12-14 74

2024-12-14 74

2024-12-15 81

2024-12-15 81

2025-01-13 148

2025-01-13 148

渝公网安备50010702506394

渝公网安备50010702506394