object, probably because it is very small or highly transpar-

ent. Using hand features, our model implicitly focuses on

these challenging objects.

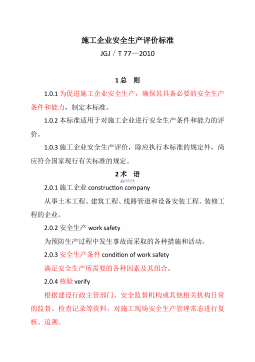

Our proposed Holistic Interaction Transformer (HIT)

network uses fine-grained context, including person pose,

hands, and objects, to construct a bi-modal interaction struc-

ture. Each modality comprises three main components:

person interaction, object interaction, and hand interaction.

Each of these components learns valuable local action pat-

terns. We then use an Attentive Fusion Mechanism to com-

bine the different modalities before learning temporal infor-

mation from neighboring frames that help us better detect

the actions occurring in the current frame. We perform ex-

periments on the J-HMDB [13], UCF101-24 [35], Multi-

sports [18] and AVA [10] datasets, and our method achieves

state-of-the-art performance on the first three while being

competitive with the SOTA methods on AVA.

The main contributions in this paper can be summarized as

follows:

• We propose a novel framework that combines RGB,

pose and hand features for action detection.

• We introduce a bi-modal Holistic Interaction Trans-

former (HIT) network that combines different kinds of

interactions in an intuitive and meaningful way.

• We propose an Attentive Fusion Module (AFM) that

works as a selective filter to keep the most informa-

tive features from each modality and an Intra-Modality

Aggregator (IMA) for learning useful action represen-

tations within the modalities.

• Our method achieves state-of-the-art performance on

three of the most challenging spatio-temporal action

detection datasets.

2. Related Work

2.1. Video Classification

Video classification consists in recognizing the activity

happening in a video clip. Usually, the clip spans a few sec-

onds and has a single label. Most recent approaches to this

task use 3D CNNs [1, 5, 6, 41] since they can process the

whole video clip as input, as opposed to considering it as

a sequence of frames [30, 39]. Due to the scarcity of la-

beled video datasets, many researchers rely on models pre-

trained on ImageNet [1, 42, 48] and use them as backbones

to extract video features. Two-stream networks [5, 6] are

another widely used approach to video classification thanks

to their ability to only process a fraction of the input frames,

striking a good balance between accuracy and complexity.

2.2. Spatio-Temporal Action Detection

In recent years, more attention has been given to spatio-

temporal action detection [5, 7, 17, 28, 40]. As the name

(spatio-temporal) suggests, instead of classifying the whole

video into one class, we need to detect the actions in space,

i.e., the actions of everyone in the current frame, and in

time since each frame might contain different sets of ac-

tions. Most recent works on spatio-temporal action detec-

tion use a 3D CNN backbone [27, 43] to extract video fea-

tures and then crop the person features from the video fea-

tures either using ROI pooling [8] or ROI align [12]. Such

methods discard all the other potentially useful information

contained in the video.

2.3. Interaction Modeling

What if the spatio-temporal action detection task really is

an interaction modeling task? In fact, most of our everyday

actions are interactions with our environment (e.g., other

persons, objects, ourselves) and interactions between our

actions (for instance, it is very likely that“open the door”

is followed by “close the door”). The interaction model-

ing idea spurs a wave of research about how to effectively

model interaction for video understanding [28, 40, 43].

Most researches in this area use the attention mecha-

nism. [25, 52] propose Temporal Relation Network (TRN),

which learns temporal dependencies between frames or, in

other words, the interaction between entities from adjacent

frames. Other methods further model not just temporal but

spatial interactions between different entities from the same

frame [26, 40, 43, 49, 53]. Nevertheless, the choice of en-

tities for which to model the interactions differs by model.

Rather than using only human features, [28, 46] chose to

use the background information to model interactions be-

tween the person in the frame and the context. They still

crop the persons’ features but do not discard the remaining

background features. Such an approach provides rich in-

formation about the person’s surroundings. However, while

the context says a lot, it might induce noise.

Attempting to be more selective about the features to

use, [26, 40] first pass the video frames through an ob-

ject detector, crop both the object and person features, and

then model their interactions. This extra layer of interac-

tion provides better representations than standalone human

interaction modeling models and helps with classes related

to objects such as “work on a computer”. However, they

still fall short when the objects are too small to be detected

or not in the current frame.

2.4. Multi-modal Action Detection

Most recent action detection frameworks use only RGB

features. The few exceptions such as [10, 34, 36, 38] and

[29] use optical flow to capture motion. [38] employs an

2

2024-11-29 19

2024-11-29 19

2024-11-29 22

2024-11-29 22

2024-11-29 20

2024-11-29 20

2024-11-29 22

2024-11-29 22

2024-11-29 22

2024-11-29 22

2024-11-29 24

2024-11-29 24

2024-12-14 263

2024-12-14 263

2024-12-14 74

2024-12-14 74

2024-12-15 81

2024-12-15 81

2025-01-13 148

2025-01-13 148

渝公网安备50010702506394

渝公网安备50010702506394