for data-scarce issues due to a small amount of

human-annotated corpora and privacy concerns.

In this work, we fine-tune PLMs from the gen-

eral domain BERT, biomedical domain BioBERT,

and clinical domain ClinicalBERT, examining how

well they perform on clinical information extrac-

tion task, namely drugs and drug-related attributes

using n2c2-2018 shared task data via adaptation

and fine-tuning. We then compare their results with

ones of a lightweight Transformer model trained

from scratch, and further investigate the impact of

an additional CRF layer on the deployed models.

Section 2gives more details on related works,

Section 3introduces the methodologies for

our investigation, Section 4describes our data-

preprocessing and experimental setups, Section 5

presents the evaluation results and ablation studies,

Section 6further discusses data-constrained train-

ing looking back at n2c2-2018 shared tasks; finally,

Section 7concludes this paper and opens ideas for

future works. Readers can refer to the Appendix for

more details on experimental analysis and relevant

findings.

2 Related Work

The integration of pre-trained language models into

applications within the biomedical and clinical do-

mains has emerged as a prominent trend in recent

years. A significant contribution to this field is

BioBERT (Lee et al.,2019), which was among the

first to explore the advantages of training a BERT-

based model from domain-specific knowledge, i.e.

using biomedical data. BioBERT demonstrated

that training BERT using PubMed abstracts and

PubMed Central (PMC) full-text articles resulted

in superior performances on Named Entity Recog-

nition (NER) and Relation Extraction (RE) tasks,

within the biomedical domain.

However, since BioBERT was pre-trained

on general-domain data such as Wikipedia

or BooksCorpus (Zhu et al.,2015) and then

continuously-trained on biomedical data, PubMed-

BERT (Gu et al.,2021) further examined the ad-

vantages of training a model from scratch solely on

biomedical data, employing the same PubMed data

as BioBERT to avoid influences of mixed domains.

This choice was motivated by the observation that

word distributions from different domains are rep-

resented differently in their respective vocabularies.

Furthermore, PubMedBERT created a new bench-

mark dataset named BLURB covering more tasks

than BioBERT and including the terms: disease,

drug, gene, organ, and cell.

PubMedBERT and BioBERT both focused on

biomedical knowledge, leaving other closely re-

lated domains such as the clinical one for fu-

ture exploration. Subsequently, (Alsentzer et al.,

2019) demonstrated that ClinicalBERT, trained us-

ing generic clinical text and discharge summaries,

exhibited superior performances on medical lan-

guage inference (i2b2-2010 and 2012) and de-

identification tasks (i2b2-2006 and 2014). Simi-

larly, (Huang et al.,2019) found that ClinicalBERT

trained on clinical notes achieved improved pre-

dictive performance for hospital readmission after

fine-tuning on this specific task.

In our work on the clinical domain, we use

the n2c2-2018 shared task corpus which provides

electric health records (EHR) as semi-structured

letters (their heading specifying drug names, pa-

tient names, doses, relations, etc., and the body

describing the diagnoses and treatment as free text).

We aim to examine how fine-tuned PLMs perform

against domain-specific transformers trained from

scratch, at biomedical and clinical text mining.

Regarding the usage of Transformer models for

text mining, (Wu et al.,2021) implemented the

Transformer structure with an adaptation layer for

information and communication technology (ICT)

entity extraction. (Al-Qurishi and Souissi,2021)

proposed to add a CRF layer on top of the BERT

model to carry out Arabic NER on mixed do-

main data, such as news and magazines. (Yan

et al.,2019) demonstrated that the Encoder-only

Transformer could improve previous results on tra-

ditional NER tasks in comparison to BiLSTMs.

Other related works include (Zhang and Wang,

2019;Gan et al.,2021;Zheng et al.,2021;Wang

and Su,2022) which applied Transformer and CRF

for spoken language understanding, Chinese NER,

power-meter NER, and forest disease text.

3

Methodology and Experimental Designs

Figure 1displays the design of our investigation,

which includes the pre-trained LLMs BERT (De-

vlin et al.,2019), BioBERT (Lee et al.,2019),

and ClinicalBERT (Alsentzer et al.,2019), in ad-

dition to an Encoder-only Transformer (Vaswani

et al.,2017) implementing the “distilbert-base-

cased” structure and trained from scratch.

The first step is to adapt these models to Named

Entity Recognition by adding an Adaptation (or

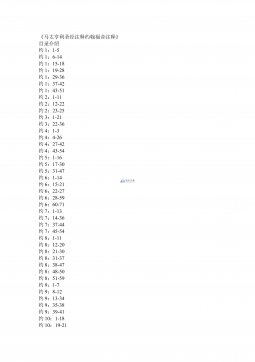

2024-12-10 56

2024-12-10 56

2024-12-10 84

2024-12-10 84

2024-12-26 163

2024-12-26 163

2024-12-26 422

2024-12-26 422

2024-12-26 283

2024-12-26 283

2024-12-26 388

2024-12-26 388

2025-08-09 237

2025-08-09 237

2025-08-09 61

2025-08-09 61

2025-08-18 12

2025-08-18 12

2025-11-20 47

2025-11-20 47

渝公网安备50010702506394

渝公网安备50010702506394