Generating Synthetic Data with Locally Estimated Distributions for Disclosure Control Ali Furkan Kalay

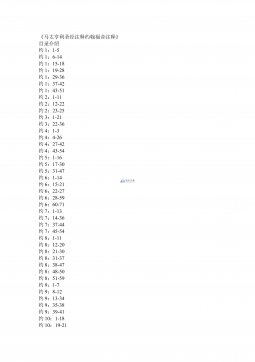

GeneratingSyntheticDatawithLocallyEstimatedDistributionsforDisclosureControl∗AliFurkanKalay†February18,2025AbstractSensitivedatasetsareoftenunderutilizedinresearchandindustryduetoprivacyconcerns,limitingthepotentialofvaluabledata-driveninsights.Syntheticdatagen-erationpresentsapromisingsolutiontoadd...

相关推荐

-

《发电厂电气部分》(第四版)07-配电装置VIP免费

2024-12-10 56

2024-12-10 56 -

《发电厂电气部分》(第四版)04-04-限制短路电流的方法VIP免费

2024-12-10 84

2024-12-10 84 -

《协议出让国有土地使用权规范》VIP免费

2024-12-26 163

2024-12-26 163 -

《圣经研究-耶利米哀歌》VIP免费

2024-12-26 422

2024-12-26 422 -

《摩根-路加福音》VIP免费

2024-12-26 283

2024-12-26 283 -

《马太亨利圣经注释约翰福音注释》VIP免费

2024-12-26 388

2024-12-26 388 -

高达—行政事业单位内部控制规范解读3.0(99页)VIP免费

2025-08-09 237

2025-08-09 237 -

2024年度行政事业单位内部控制报告编报指标讲解VIP免费

2025-08-09 61

2025-08-09 61 -

TBT3238-2010 动车组牵引电动机技术条件

2025-08-18 12

2025-08-18 12 -

内控报告佐证材料清单及示例(参考)

2025-11-20 47

2025-11-20 47

作者详情

相关内容

-

TBT3241-2010 移动式焊轨车

分类:

时间:2025-08-18

标签:无

格式:PDF

价格:10 玖币

-

TBT3240-2010 内燃机车柴油机用高压油管

分类:

时间:2025-08-18

标签:无

格式:PDF

价格:10 玖币

-

TBT3239-2010 铁路用微合金化钢魏氏组织金相检验图谱

分类:

时间:2025-08-18

标签:无

格式:PDF

价格:10 玖币

-

TBT3238-2010 动车组牵引电动机技术条件

分类:

时间:2025-08-18

标签:无

格式:PDF

价格:10 玖币

-

内控报告佐证材料清单及示例(参考)

分类:

时间:2025-11-20

标签:无

格式:DOC

价格:10 玖币

渝公网安备50010702506394

渝公网安备50010702506394