Hierarchical reinforcement learning for in-hand

robotic manipulation using Davenport chained

rotations

Francisco Roldan Sanchez

Dublin City University

Insight Centre for Data Analytics

Dublin, Ireland

francisco.sanchez@insight-centre.org

Qiang Wang

University College Dublin

Dublin, Ireland

qiang.wang@ucdconnect.ie

David Cordova Bulens

University College Dublin

Dublin, Ireland

david.cordovabulens@ucd.ie

Kevin McGuinness

Dublin City University

Insight Centre for Data Analytics

Dublin, Ireland

kevin.mcguinness@insight-centre.org

Stephen J. Redmond

University College Dublin

Insight Centre for Data Analytics

Dublin, Ireland

stephen.redmond@ucd.ie

Noel E. O’Connor

Dublin City University

Insight Centre for Data Analytics

Dublin, Ireland

noel.oconnor@insight-centre.org

Abstract—End-to-end reinforcement learning techniques are

among the most successful methods for robotic manipulation

tasks. However, the training time required to find a good policy

capable of solving complex tasks is prohibitively large. Therefore,

depending on the computing resources available, it might not be

feasible to use such techniques. The use of domain knowledge

to decompose manipulation tasks into primitive skills, to be

performed in sequence, could reduce the overall complexity of

the learning problem, and hence reduce the amount of training

required to achieve dexterity. In this paper, we propose the use of

Davenport chained rotations to decompose complex 3D rotation

goals into a concatenation of a smaller set of more simple rotation

skills. State-of-the-art reinforcement-learning-based methods can

then be trained using less overall simulated experience. We

compare this learning approach with the popular Hindsight

Experience Replay method, trained in an end-to-end fashion

using the same amount of experience in a simulated robotic hand

environment. Despite a general decrease in performance of the

primitive skills when being sequentially executed, we find that

decomposing arbitrary 3D rotations into elementary rotations

is beneficial when computing resources are limited, obtaining

increases of success rates of approximately 10% on the most

complex 3D rotations with respect to the success rates obtained

by a HER-based approach trained in an end-to-end fashion, and

increases of success rates between 20% and 40% on the most

simple rotations.

Index Terms—Robotic manipulation, deep reinforcement

learning, hierarchical reinforcement learning

This publication has emanated from research supported by Science Founda-

tion Ireland (SFI) under Grant Number SFI/12/RC/2289 P2, co-funded by the

European Regional Development Fund, by Science Foundation Ireland Future

Research Leaders Award (17/FRL/4832), and by China Scholarship Council

(CSC No.202006540003). For the purpose of Open Access, the author has

applied a CC BY public copyright licence to any Author Accepted Manuscript

version arising from this submission.

I. INTRODUCTION

A primary reason why it takes so long to train state-of-the-

art reinforcement learning techniques in manipulation tasks is

that they are very complex to solve without domain knowledge

[1]–[3]. However, most manipulation tasks can be decomposed

into a number of easier tasks that a robot can learn more

easily with much less training experience required [4], [5].

For example, robots can easily learn how to reach a point in

space, or how to push an object, when these tasks are trained

independently [6]. However, if the robot needs to learn from

scratch how to both reach for an object and then push it,

the training time required increases in a nonlinear manner,

meaning it often requires more simulated experience overall

to learn these skills [7].

Training time becomes an important matter for tasks that

have high degrees of complexity, like the block manipulation

tasks in OpenAI’s Gym environment [8]. The most popular

method for learning in this kind of goal-based environment is

Hindsight Experience Replay (HER) [9] trained in conjunction

with Deep Deterministic Policy Gradients (DDPG) [1]. HER

is capable of successfully solving most tasks implemented in

this environment, but the amount of simulated experience it

requires for training is immense, exceeding 38 ×107time-

steps in the most complex tasks, and even then it is not able

to discover an optimal policy that is able to solve all object

rotation goals. Furthermore, 19 CPU cores are required to

generate simulated experience in parallel [10].

Traditional robotic manipulation systems usually define a set

of non-primitive and primitive tasks that have a hierarchy [11]–

[13]. Non-primitive actions are a composition of primitive

actions performed in a particular order, where a primitive

action is one which cannot or should not be further divided into

arXiv:2210.00795v2 [cs.RO] 15 Nov 2022

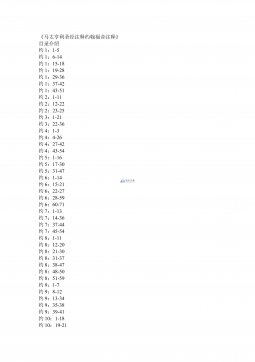

2024-12-10 56

2024-12-10 56

2024-12-10 84

2024-12-10 84

2024-12-26 163

2024-12-26 163

2024-12-26 421

2024-12-26 421

2024-12-26 282

2024-12-26 282

2024-12-26 386

2024-12-26 386

2025-08-09 237

2025-08-09 237

2025-08-09 61

2025-08-09 61

2025-08-18 12

2025-08-18 12

2025-11-20 47

2025-11-20 47

渝公网安备50010702506394

渝公网安备50010702506394