ProGReST Prototypical Graph Regression Soft Trees for Molecular Property Prediction Dawid RymarczykDaniel DobrowolskiTomasz Danel

2025-05-02

0

0

741.64KB

10 页

10玖币

侵权投诉

ProGReST: Prototypical Graph Regression Soft Trees for Molecular

Property Prediction

Dawid Rymarczyk∗† Daniel Dobrowolski∗Tomasz Danel∗

Abstract

In this work, we propose the novel Prototypical Graph Re-

gression Self-explainable Trees (ProGReST) model, which

combines prototype learning, soft decision trees, and Graph

Neural Networks. In contrast to other works, our model can

be used to address various challenging tasks, including com-

pound property prediction. In ProGReST, the rationale is

obtained along with prediction due to the model’s built-in

interpretability. Additionally, we introduce a new graph pro-

totype projection to accelerate model training. Finally, we

evaluate PRoGReST on a wide range of chemical datasets for

molecular property prediction and perform in-depth analysis

with chemical experts to evaluate obtained interpretations.

Our method achieves competitive results against state-of-

the-art methods.

Key words. Drug design; Graph Neural Networks; Inter-

pretability; Deep Learning

1 Introduction

In chemistry, the accurate and rapid examination of the

compounds is often the key to a successful drug discov-

ery. Searching through millions of compounds, synthe-

sizing them, and evaluating their properties consumes

astounding amounts of money and does not guarantee

any success at the end of the discovery process. That

is why currently in silico molecular property prediction

is indispensable in modern drug discovery, material de-

sign, synthesis planning, etc. Computer methods can

accelerate compound screening and mitigate the risk of

selecting futile compounds for the in vitro examination.

Recent advancements in deep learning, especially

in Graph Neural Networks (GNNs), raised the us-

ability of in vitro cheminformatics tools to the next

level [7]. Tasks such as molecular property prediction,

detection of active small molecules, hit identification,

and optimization can be accelerated with models such

∗Faculty of Mathematics and Computer

Science, Jagiellonian University, Krakow,

Poland. (dawid.rymarczyk@student.uj.edu.pl,

daniel.dobrowolski@student.uj.edu.pl,

tomasz.danel@doctoral.uj.edu.pl)

†Ardigen SA, Krakow, Poland.

2

prediction

prototypes

molecule

because

+

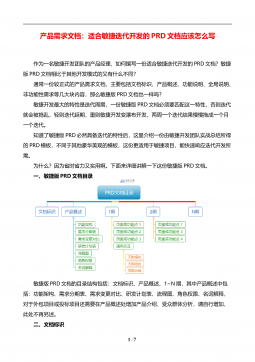

Figure 1: Overview of the ProGReST approach. Molec-

ular substructures are matched against the trained pro-

totypical parts, and the prediction is based on the com-

bination of these features.

as Molecule Attention Transformer (MAT) [25], Deep-

GLSTM [28], and Junction Tree Variational Autoen-

coder (JT-VAE) [16]. Despite the early adoption of

artificial intelligence (AI) methods in the drug design

process, the initial results are encouraging [24]. Unfor-

tunately, most AI methods do not offer insight into the

reasoning behind the decision process.

Due to the complexity of biological systems and

drug design processes, insights into the knowledge gath-

ered by the deep learning model are highly sought. Even

if the model fails to achieve its goals, the explainabil-

ity component can hint at the medicinal chemist, e.g.

by showing a mechanistic interpretation of the drug ac-

tion [15]. Most of the current eXplainable Artificial In-

telligence (XAI) approaches are post-hoc methods and

are applied to already trained models [43]. However,

the reliability of those methods is questionable [34]. It

assumes that the second model is built to explain an

existing trained model. It may result in an unnecessar-

ily increased bias in the explanations, which come from

the trained model and the post-hoc model. That is why

Copyright ©2023 by SIAM

Unauthorized reproduction of this article is prohibited

arXiv:2210.03745v2 [q-bio.QM] 27 Dec 2022

self-interpretable models are being developed, such as

self-explainable neural networks (SENN) [2] and Proto-

type Graph Neural Network (ProtGNN) [46]. Only the

latter can be applied to the graph prediction problem.

However, ProtGNN is designed for classification

problems only since it requires a fixed assignment of

prototypes to the classes. While for a regression prob-

lem, the model predicts a single label making such an

assignment impossible. To overcome the limited ap-

plicability of ProtGNN, we introduce the Prototypical

Graph Regression Soft Trees (ProGReST) model that is

suitable for a graph regression problem, common in the

molecular property prediction [41]. It employs prototyp-

ical parts (in the paper, we use the terms ”prototypical

parts” and ”prototypes” interchangeably.) [6] that pre-

serve information about activation patterns and ensure

intrinsic interpretability (see Fig. 1). Prototypes are

derived from the training examples and used to explain

the model’s decision. To build a model with prototypes,

we use Soft Neural Trees [8].

Hence the regression task is more challenging than

the classification, it also requires more training epochs

for a model to converge. And, prototypical-part-based

methods use projection operation periodically [6, 46] to

enforce the closeness of prototypes to the training data.

In ProtGNN, projection is based on an MCTS algorithm

that requires lots of computational time to find mean-

ingful prototypes. In ProGReST, we propose proxy pro-

jection to reduce the training time and perform MCTS-

based at the end to ensure the full interpretability of

the derived prototypes.

The ProGReST achieves state-of-the-art results on

five cheminformatics datasets for molecular property

prediction and provides intuitive explanations of its

prediction in the form of a tree. Also, we confronted

the findings of the ProGReST with chemists to validate

the usability of our model.

Our contributions can be summarized as follows:

•we introduce ProGReST, a self-explainable

prototype-based model for regression of molecular

properties,

•we employ a tree-based model to derive meaningful

prototypes,

•we define a novel proxy projection function that

substantially accelerates the training process.

2 Related Works

2.1 Molecular property prediction The accurate

prediction of molecular properties is critical in chemical

modeling. In machine learning, chemical compounds

can be described using calculated molecular descrip-

tors, which are computed as a function of the compound

structure [37]. Many successful applications of machine

learning in drug discovery utilize chemical structures di-

rectly by employing molecular fingerprints [5] or molec-

ular graphs as an input to the model [9].

Currently, molecular graphs are a preferable rep-

resentation in cheminformatics because they can cap-

ture nonlinear structure of the data. In a molecular

graph, atoms are represented as nodes, and the chemi-

cal bonds are graph edges. Each atom is attributed with

atomic features that encode chemical symbols of the

atom and other relevant features [32]. This graphical

representation can be processed by graph neural net-

works that learn the molecule-level vector representa-

tion of the compound and use it for property prediction.

Graph neural networks usually implement the message

passing scheme [11], in which information is passed be-

tween nodes along the edges, and the atom features are

updated [45]. However, more recent architectures focus

on modeling long-range dependencies between atoms,

e.g. by implementing graph transformers [26].

2.2 Interpretability of deep learning Methods

explaining deep learning models can be divided into the

post-hoc and interpretable [34]. The first one creates

explainer that reveals the reasoning process of a black

box model. Post-hoc methods include: a saliency

map [3] that highlights crucial input parts. Another

one is Concept Activation Vectors (CAV), that uses

concepts to explain the neural network predictions [17].

Other methods analyze the output of the model on the

perturbation of the input [33] or determine contribution

of a given feature to a prediction [44]. Implementation

of post hoc methods is straightforward since there is

no intervention into its architecture. However, they

can produce biased and unreliable explanations [1].

That is why more focus is recently on designing self-

explainable models [2] to make the decision process

directly visible. Recently, a widely used self-explainable

model introduced in [6] (ProtoPNet) has a hidden layer

of prototypes representing the activation patterns.

Many of the works extended the ProtoPNet, such

as TesNet [38] employing Grassman manifold to find

prototypes. Also, methods like ProtoPShare [35], Pro-

toPool [36] and ProtoTree [29] reduce the number of

used prototypes. Lastly, those solutions are widely

adopted in various fields such as medical imaging [18]

and graph classification [46]. Yet, none of these do not

consider regression.

3 ProGReST

3.1 Architecture The architecture of ProGReST,

depicted in Fig. 2, consists of a graph representation

Copyright ©2023 by SIAM

Unauthorized reproduction of this article is prohibited

摘要:

展开>>

收起<<

ProGReST:PrototypicalGraphRegressionSoftTreesforMolecularPropertyPredictionDawidRymarczyk*DanielDobrowolski*TomaszDanel*AbstractInthiswork,weproposethenovelPrototypicalGraphRe-gressionSelf-explainableTrees(ProGReST)model,whichcombinesprototypelearning,softdecisiontrees,andGraphNeuralNetworks.Incont...

声明:本站为文档C2C交易模式,即用户上传的文档直接被用户下载,本站只是中间服务平台,本站所有文档下载所得的收益归上传人(含作者)所有。玖贝云文库仅提供信息存储空间,仅对用户上传内容的表现方式做保护处理,对上载内容本身不做任何修改或编辑。若文档所含内容侵犯了您的版权或隐私,请立即通知玖贝云文库,我们立即给予删除!

相关推荐

-

.net笔试题选择题集VIP免费

2024-11-14 30

2024-11-14 30 -

产品需求文档 - 适合敏捷迭代开发的PRD文档应该怎么写VIP免费

2024-11-23 5

2024-11-23 5 -

产品需求文档 - 面向产品需求的验证管理VIP免费

2024-11-23 4

2024-11-23 4 -

产品需求文档 - 没有标准,只有沟通VIP免费

2024-11-23 4

2024-11-23 4 -

产品需求文档 - 产品需求应该怎么写VIP免费

2024-11-23 5

2024-11-23 5 -

产品需求文档 - 产品需求文档 PRD模板VIP免费

2024-11-23 33

2024-11-23 33 -

产品需求文档 - 产品需求核心组件分析VIP免费

2024-11-23 45

2024-11-23 45 -

2024版.新高考版.高考总复习.数学.5·3A版1_1集合VIP免费

2024-11-23 29

2024-11-23 29 -

2024版.新高考版.高考总复习.数学.5·3A版1_1集合(分层集训)VIP免费

2024-11-23 16

2024-11-23 16 -

产品需求文档 - 产品技能树之需求分析(一)VIP免费

2024-11-23 9

2024-11-23 9

分类:图书资源

价格:10玖币

属性:10 页

大小:741.64KB

格式:PDF

时间:2025-05-02

渝公网安备50010702506394

渝公网安备50010702506394