Published at 1st Conference on Lifelong Learning Agents, 2022

effects between factors, not just the isolated effects of the modifications they introduce. This reinforces the case for

agents leveraging these systematic curricula, originally designed for human players, through transfer learning.

Contributions: (1) We show that discrete changes to Atari game environments, challenging for human players, also

modulate the performance of a popular model-free deep reinforcement learning algorithm starting from scratch. (2) Ze-

ro-shot transfer of policies trained on one game variation and tested on others can be significant, but performance is far

from uniform across respective environments. (3) Interestingly, zero-shot transfer variance from default game experts

is also well explained by game design factors, especially their interactions. (4) We empirically evaluate the perfor-

mance of value-function finetuning, a general transfer learning technique compatible with model-free deep RL, and

confirm that it can lead to positive transfer from basic game experts. (5) We point out that more complex challenges of

transfer with deep RL are captured by Atari game variations, e.g. appropriate source task selection, fast policy transfer

and evaluation using experts, as well as data efficient behaviour adaptation more generally.

2 BACKGROUND

Reinforcement Learning. A Markov Decision Processes (MDP) (Puterman,1994) is the classic abstraction used to

characterise the sequential interaction loop between an action taking agent and its environment, which responds with

observations and rewards (Sutton & Barto,2018). Formally, a finite MDP is a tuple M=hX ,A,T,R, γi, where X

is the state space, Ais the action space, both finite sets, T:X × A × X 7→ [0,1] is the stochastic transition function

which maps each state and action to a probability distribution over possible future states T(x, a, x0) = P(x0|x, a),

R:X × A × X 7→ Ris the reward distribution function, with r:X × A 7→ Rthe expected immediate reward

r(x, a) = ET[R(x, a, x0)], and γ∈[0,1] is the discount factor (Bellman,1957). A stochastic policy function is the

action selection strategy π:X ×A 7→ [0,1] which maps states to probability distributions over actions π(x) = P(a|x).

The discounted sum of future rewards is the random variable Zπ

M(x, a) = P∞

t=0 γtr(xt, at), where x0=x,a0=a,

xt∼ T (·|xt−1, at−1)and at∼π(·|xt). Given an MDP Mand a policy π, the value function is the expectation

over the discounted sum of future rewards, also called the expected return: Vπ

M(x) = E[Zπ

M(x, π(x))]. The goal

of Reinforcement Learning (RL) is to find a policy π∗

M:X 7→ A which is optimal, in the sense that it maximises

expected return in M. The state-action Q-function is defined as Qπ

M(x, a) = E[Zπ

M(x, a)] and it satisfies the Bellman

equation Qπ

M(x, a) = ET[R(x, a, x0)] + γET,π [Qπ

M(x0, a0)] for all states x∈ X and actions a∈ A.Mnih et al.

(2013) adapted a reinforcement learning algorithm called Q-Learning (Watkins,1989) to train deep neural networks

end-to-end, mastering several Atari 2600 games, with inputs consisting of high-dimensional observations, in the form

of console screen pixels, and differences in game scores. For more details please consult Appendix A.

Note that value functions depend critically on all aspects of the MDP. For any policy π, in general Qπ

M6=Qπ

M0for

MDPs Mand M0defined over the same state and action sets, with T 6=T0or R 6=R0. Even if differences in

dynamics or rewards are isolated to a subset of X × A, changes may be induced across the support of Qπ

M0, since

value functions are expectations over sums of discounted future rewards, issued according to R0, along sequences of

states decided entirely by the new environment dynamics T0when following a fixed behaviour policy π. Nevertheless,

many particular cases of interest exist, which we discuss below.

Transfer learning. Described and motivated in its general form by Caruana (1997); Thrun & Pratt (1998); Bengio

(2012), the goal of transfer learning is to use knowledge acquired from one or more source tasks to improve the

learning process, or its outcomes, for one or more target tasks, e.g. by using fewer resources compared to learning

from scratch. When this is achieved, we call it positive transfer. We often further qualify transfer learning by the

metric used to measure specific effects. Several transfer learning metrics have been defined for the RL setting (Taylor

& Stone,2009), but none capture all aspects of interest on their own. A first metric we use is “jumpstart” or “zero-shot”

transfer, which is performance on the target task before gaining access to its data. Another highly relevant metric is

“performance with fixed training epochs” (Zhu et al.,2020), defined as returns achieved in the target task after using

fixed computation and data budgets under transfer learning conditions.

One way to classify such approaches in RL is by the format of knowledge being transferred (Zhu et al.,2020), com-

monly: datasets, predictions, and/or parameters of neural networks encoding representations of observations, policies,

value-functions or approximate state-transition “world models” acquired using source tasks. Another way to classify

transfer learning approaches in RL is by their respective sets of assumptions about source and target tasks, also well

illustrated by their associated benchmark domains: (1) Differences are limited to observations, but the underlying

MDP is the same, e.g. domain adaptation and randomisation (Tobin et al.,2017), mastered through the generalisation

abilities of large models (Cobbe et al.,2019;2020). (2) MDP states and dynamics are the same, but reward functions

are different: successor features and representations (Barreto et al.,2017). (3) Overlapping state spaces with similar

dynamics, e.g. multitask training and policy transfer (Rusu et al.,2016a;Schmitt et al.,2018), contextual parametric

approaches, e.g. UVFAs (Schaul et al.,2015) and collections of skills/goals/options (Barreto et al.,2019). (4) Sus-

2

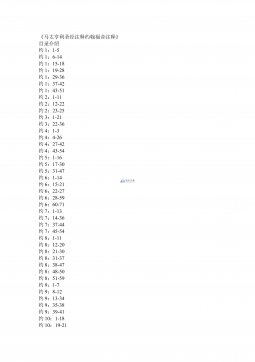

2024-12-10 56

2024-12-10 56

2024-12-10 84

2024-12-10 84

2024-12-26 163

2024-12-26 163

2024-12-26 421

2024-12-26 421

2024-12-26 282

2024-12-26 282

2024-12-26 386

2024-12-26 386

2025-08-09 237

2025-08-09 237

2025-08-09 61

2025-08-09 61

2025-08-18 12

2025-08-18 12

2025-11-20 47

2025-11-20 47

渝公网安备50010702506394

渝公网安备50010702506394