Policy Gradients for Probabilistic Constrained

Reinforcement Learning

Weiqin Chen

Department of Electrical,

Computer, and Systems Engineering

Rensselaer Polytechnic Institute

Troy, NY, USA

chenw18@rpi.edu

Dharmashankar Subramanian

IBM T. J. Watson Research Center

Yorktown Heights, NY, USA

dharmash@us.ibm.com

Santiago Paternain

Department of Electrical,

Computer, and Systems Engineering

Rensselaer Polytechnic Institute

Troy, NY, USA

paters@rpi.edu

Abstract—This paper considers the problem of learning safe

policies in the context of reinforcement learning (RL). In particu-

lar, we consider the notion of probabilistic safety. This is, we aim

to design policies that maintain the state of the system in a safe

set with high probability. This notion differs from cumulative

constraints often considered in the literature. The challenge of

working with probabilistic safety is the lack of expressions for

their gradients. Indeed, policy optimization algorithms rely on

gradients of the objective function and the constraints. To the

best of our knowledge, this work is the first one providing such

explicit gradient expressions for probabilistic constraints. It is

worth noting that the gradient of this family of constraints can

be applied to various policy-based algorithms. We demonstrate

empirically that it is possible to handle probabilistic constraints

in a continuous navigation problem.

Index Terms—reinforcement learning, probabilistic constraint,

safe policy, policy gradient

I. INTRODUCTION

Reinforcement learning (RL) has gained traction as a

solution to the problem of computing policies to perform

challenging and high-dimensional tasks, e.g., playing video

games [1], mastering Go [2], robotic manipulation [3] and

locomotion [4], etc. However, in general, RL algorithms are

only concerned with maximizing a cumulative reward [5], [6],

which may lead to risky behaviors [7] in realistic domains

such as robot navigation [8].

Taking into account the safety requirements motivates the

development of policy optimization under safety guaran-

tees [9]–[11]. Some approaches consider risk-aware objectives

or regularized solutions where the reward is modified to

take into account the safety requirements [12]–[14]. Other

formulations include Constrained Markov Decision Processes

(CMDPs) [15] where additional cumulative (or average) re-

wards need to be kept above a desired threshold. This approach

has been commonly used to induce safe behaviors [16]–

[24]. To solve these constrained problems, primal-dual algo-

rithms [16]–[20] combined with classical and state-of-the-art

policy-based algorithms, e.g., REINFORCE [25], DDPG [26],

TRPO [27], PPO [28] are generally used.

In this cumulative constraint setting, safety violations are

acceptable as long as the amount of violations does not exceed

This work was supported by the IBM Rensselaer Research Collaboration.

the desired thresholds. This makes them often not suitable

for safety-critical applications. For instance, in the context of

autonomous driving, even one collision is unacceptable.

A more suitable notion of safety in this context is to guaran-

tee that the whole trajectory of the system remains within a set

that is deemed to be safe (see e.g., [29]). Ideally, one would

like to achieve this goal for every possible trajectory. This

being an ambitious goal, in this work we settle for solutions

that guarantee every time safety with high probability. We

describe this setting in detail in Section II.

The main challenge in solving problems under probabilistic

safety constraints is that explicit policy gradient-like expres-

sions for such constraints are not readily available. Indeed in

[20], [30] replace this constraint with a suitable lower bound.

In [31] an approximation of the gradient is also provided.

These limitations, prevent us from running the aforementioned

policy-based algorithms. In Section III, we present the main

contribution of this work: an expression for the gradient

that enables stochastic approximations. Other than conclud-

ing remarks, the paper finishes (Section IV) with numerical

examples that demonstrate the use of the probabilistic safe

gradient to train safe policies in a navigation task.

II. PROBLEM FORMULATION

In this work, we consider the problem of finding optimal

policies for Markov Decision Processes (MDPs) under prob-

abilistic safety guarantees. In particular, we are interested in

situations where the state transition distributions are unknown

and thus the policies need to be computed from data. An

MDP [32] is defined by a tuple (S,A, r, P, µ, T ), where S

is the state space, Ais the action space, r:S × A → R

is the reward function describing the quality of the decision,

Pat

st→st+1 (ˆ

S) := P(st+1 ∈ˆ

S | st, at)where ˆ

S ⊂ S, st∈

S, at∈ A, t ∈Nis the transition probability describing the

dynamics of the system, µ(ˆ

S) := P(s∈ˆ

S)is the initial state

distribution, and Tis the time horizon. The state and action at

time tare random variables denoted respectively by Stand At.

Apolicy is a conditional distribution πθ(a|s)parameterized

by θ∈Rd(for instance the weights and biases of neural

networks), from which the agent draws action a∈ A when

in the corresponding state s∈ S. In the context of MDPs the

arXiv:2210.00596v2 [cs.LG] 18 Apr 2023

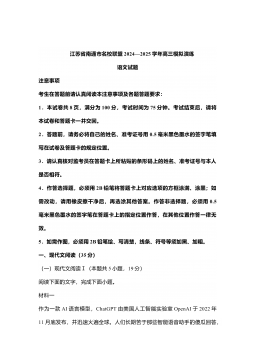

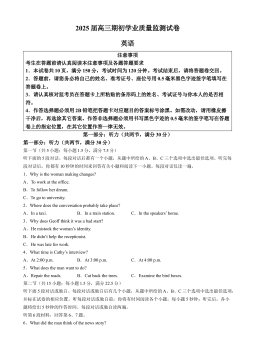

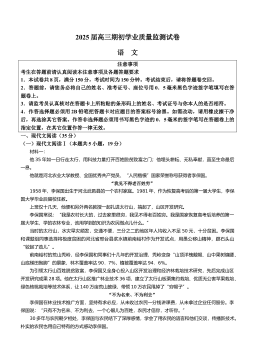

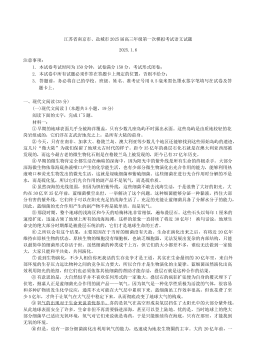

2024-12-06 5

2024-12-06 5

2024-12-06 16

2024-12-06 16

2024-12-06 30

2024-12-06 30

2024-12-06 27

2024-12-06 27

2024-12-06 33

2024-12-06 33

2024-12-06 14

2024-12-06 14

2024-12-06 38

2024-12-06 38

2024-12-06 20

2024-12-06 20

2024-12-06 53

2024-12-06 53

2024-12-06 33

2024-12-06 33

渝公网安备50010702506394

渝公网安备50010702506394