Running Title for Header

1 Introduction

Named Entity Recognition is an essential task in natural

language processing that aims to recognize the boundaries

and types of entities with specific meanings in the text,

including names of people, places, institutions, etc. The

Named Entity Recognition task is not only a vital tool for

information extraction, but also a crucial component in

many downstream tasks, such as text understanding [1].

Named entity recognition is usually modeled as a sequence

labeling problem and can be efficiently solved by an RNN-

based approach [

2

]. The sequence labeling modeling ap-

proach simplifies the problem based on the assumption

that entities never nested with each other. However, en-

tities may be overlapping or deeply nested in real-world

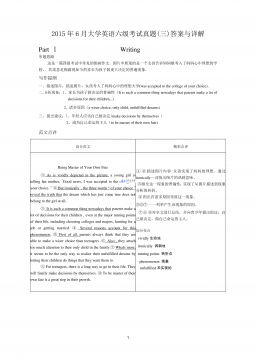

language environments, as in Figure 1. More and more

studies are exploring modified models to deal with this

more complex situation.

ME: Chronic diseases identified: Hypertension.

NDT: Cytomegalovirus modulates interleukin-6 gene expression.

DIS

ABBR

NST: Characterization of the human elk-1 promoter.

DNA

DNA

DNA

PRO

ME: Chronic diseases identified: Hypertension.

NDT: Cytomegalovirus modulates interleukin-6 gene expression.

DIS

ABBR

NST: Characterization of the human elk-1 promoter.

DNA

DNA

DNA

PRO

Figure 1: Example of entity nesting from GENIA [

3

] and

Chilean Waiting List [

4

]. The colored arrows indicate

the category and span of the entities. The bolded black

abbreviations denote the type of entity nesting.

Some works like [

5

] employ a layered model to handle

entities nesting, which iteratively utilizes the result of the

previous layer to be further annotated until reaches the

maximum number of iterations or generate no more new

entities. Nevertheless, these models suffer from the prob-

lem of interlayer disarrangement, that is, the model may

output a nested entity from a wrong layer and pass the

error to the subsequent iterations. The main reason for this

phenomenon is that the target layer to generate the nested

entity is determined by its nesting levels rather than its

semantics or structure.

Some other work like [

6

,

7

] identifies nested entities by

enumerating entity proposals. Although these methods

are theoretically perfect, they still confront difficulties in

model training, high complexity, and negative samples.

These obstacles stem from the fact that the enumeration

approach does not take into account the a priori structural

nature of nested entities.

In recent years, graph neural networks have received a lot

of attention. Most early graph neural networks like [

8

] are

homogeneous graphs. But the graphs encountered in prac-

tical applications are generally heterogeneous graphs with

nodes and edges of multiple types. An increasing number

of studies are dedicated to applying graph models in NLP

tasks. Among them, [

9

] introduces a heterogeneous doc-

ument entity graph for multi-hop reading comprehension

containing information at multiple granularities. And [

10

]

proposes a neural network for summary extraction based

on heterogeneous graphs with semantic nodes of different

granularity levels, including sentences.

In this paper, we design a multi-layer decoder for the NER

task. To address the interlayer disarrangement, the model

groups entities directly according to their categories, in-

stead of grouping entities based on the nesting depth. Each

layer individually recognizes entities of the same cate-

gory. This method extends the traditional sequence label-

ing method and eases the problem of nested entities to a

certain extent. Meanwhile, this annotation method can

recognize multi-label entities overlooked by most models

targeting the nested NER task. This nesting scenario is first

mentioned in [

11

], and is very common in some datasets

like [

12

]. In addition, to deal with the case of the nested

entities of the same type, this paper designs an extended la-

beling and decoding scheme that further recognize nested

entities in a single recognition layer. The proposed type-

supervised sequence labeling model can naturally combine

with a heterogeneous graph. For this purpose, we propose

a heterogeneous star graph model.

In summary, the contributions of our work are as follows:

•

To the best of our knowledge, we are the first to

apply the heterogeneous graph in the NER task.

The proposed graph network efficiently learns the

representation of nodes, which can be smoothly

incorporated with the type-supervised sequence

labeling method. Our model achieved state-of-

the-art performance on flat and nested datasets

1.

•

We design a stacked star graph topology with type

nodes as the center and text nodes as the planetary

nodes. It greatly facilitates the exchange of local

and global information and implicitly represents

location information. This graph structure also

significantly reduces the computational complex-

ity to

O(tn)

from the

O(n2)

of general attention

mechanisms.

•

Our graph attention mechanism is proposed for

addressing the specific scenarios in which tra-

ditional graph attention mechanisms fail. The

favorable properties of our attention mechanism

can naturally express the edge orientation.

•

The proposed type-supervised labeling method

and the corresponding decoding algorithm not

only can recognize vast majority of nested entities

but also cope with the cases neglected by most

nested entity recognition models.

1

Access the code at https://github.com/Rosenberg37/GraphNER

2

2024-12-08 18

2024-12-08 18

2024-12-08 21

2024-12-08 21

2024-12-08 19

2024-12-08 19

2024-12-08 17

2024-12-08 17

2024-12-08 21

2024-12-08 21

2024-12-08 28

2024-12-08 28

2024-12-08 34

2024-12-08 34

2024-12-08 36

2024-12-08 36

2024-12-08 23

2024-12-08 23

2024-12-08 37

2024-12-08 37

渝公网安备50010702506394

渝公网安备50010702506394