– receivers can verify that a multicast message is properly

delivered by AOM, and they can prove authenticity of the

message to other receivers in the system. We additionally

propose a mixed failure model [45,54] in which the network

infrastructure can be either crash- or Byzantine-faulty. For de-

ployments that trust the network infrastructure, AOM provides

ordering guarantees with minimum network-level overhead.

For systems that need to tolerate Byzantine network devices,

AOM uses a simple round of cross-receiver communication to

handle equivocating sequencers.

We demonstrate the feasibility of our approach by imple-

menting our AOM primitive in off-the-shelf programmable

switches. The switch data plane performs both sequencing and

authentication for AOM messages. While packet sequencing

is relatively straightforward, generating secure authentication

codes – a complex mathematical procedure – is a major chal-

lenge given the switch’s limited resources and computational

constraints. We propose two implementations of in-switch

message authentication, each with trade-offs among switch

resource utilization, performance, and scalability. The first

variant implements message authentication code (HMAC)

vectors in the switch ASICs using SipHash [9] as the hash-

ing algorithm. The second variant generates signatures using

public-key cryptography. Due to hardware constraints, di-

rect in-switch implementation of cryptographic algorithms

such as RSA [52] and ECDSA [35] remains infeasible. We

design a new heterogeneous switch architecture that tightly

couples FPGA-based cryptographic accelerators to the switch

pipelines. Our design enables efficient in-network processing

and signing of AOM messages, scales linearly with the number

of cryptographic accelerators attached, and requires minimum

hardware resources on the switch data plane.

Leveraging the strong properties provided by AOM, we co-

design a new BFT protocol, Matrix. In the common case,

Matrix replicas rely on the ordering guarantees of AOM to

commit client requests in a single round trip, eliminating all

cross replica communication and authentication. Moreover,

Matrix stays in this fast path protocol even in the presence of

(up to

f

) faulty replicas, while requiring the theoretical mini-

mum replication factor (

3f+1

). In the rare case of network

failures, we design efficient protocols to handle packet drops

and faulty switch sequencers while guaranteeing correctness.

By evaluating against state-of-the-art BFT protocols, we show

that Matrix can improve protocol throughput by up to 3.4

×

and end-to-end latency by 42

×

, demonstrating the benefit

of our authenticated in-network ordering approach for BFT

systems.

2 Background

In this section, we give an overview of state-of-the-arts

BFT protocols. We then review recent proposals that use in-

network ordering to accelerate crash fault-tolerant systems.

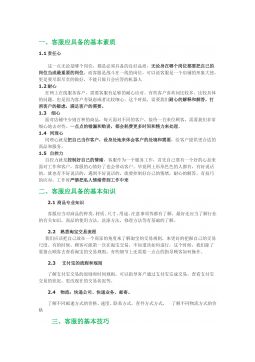

2.1 State-of-the-Art BFT Protocols

There has been a long line of work on BFT state machine

replication (SMR) protocols. Table 1 presents a summary

of the properties and comparison of some recent represen-

tative protocols. PBFT [19] is the first practical BFT proto-

col that tolerates up to

f

Byzantine nodes, requiring at least

3f+1

replicas which has been shown to be theoretical lower

bound [17]. In PBFT, client requests are committed in five

message delays: clients send requests to a primary replica; the

primary replica sequences and forwards the requests to the

backup replicas; backup replicas authenticate the requests and

broadcast their acceptance; after a replica receives quorum

acceptance, it broadcasts a commit decision; replicas execute

the request and reply to the client once they collect quorum

commit decisions. As replicas exchange messages in an all-

to-all fashion, each replica processes

O(N)

messages, making

the authenticator complexity O(N2).

Zyzzyva [38] speculatively executes client requests before

they are committed to reduce the communication overhead.

The protocol includes a fast path with three message delays

when clients receiving matching replies from all replicas, and

otherwise a slow path with at least five message delays. The

primary replica in Zyzzyva still sends signed messages to all

back up replicas (

O(N)

), but with all-to-all communication

removed, the authenticator complexity is reduced to

O(N)

.

Replicas in Zyzzyva may need to rollback speculatively exe-

cuted operations during view changes.

Unlike Zyzzyva which pushes the responsibility of collect-

ing authenticators to the client, SBFT [29] uses round-robin

message collector among all replicas to remove all-to-all com-

munication, similarly reducing authenticator complexity to

O(N)

. SBFT also leverages threshold signatures to reduce

message size, and simultaneously decreases the number of

client replies to one per decision.

Many BFT protocols ( [19,29,38]) use an expensive view

change protocol to handle primary leader failure. The standard

view change protocol used in PBFT requires

O(N3)

message

authenticators, limiting the overall scalability. HotStuff [58]

addresses this issue by adding an extra phase in normal oper-

ation. This design reduces the authenticator complexity of the

leader failure protocol to

O(N)

, matching that of the normal

case protocol. In return, HotStuff adds a one-way network

latency to the request commit delay.

BFT with trusted components.

To reduce protocol com-

plexity, a recent line of work [23,25,41,56,61] introduces

trusted components on each replica. These trusted compo-

nents can be implemented in a Trusted Platform Module

(TPM) [5] or run in a trusted hypervisor, and are assumed to

always behave correctly even if residing on Byzantine nodes.

A2M-PBFT-EA [23] uses an attested append-only memory

(A2M) to securely store operations as entries in a log. Each

A2M log entry is associated with a monotonically increasing,

gap-less sequence number. Once appended, a log entry be-

2

2025-02-28 8

2025-02-28 8

2025-02-28 6

2025-02-28 6

2025-02-28 6

2025-02-28 6

2025-02-28 5

2025-02-28 5

2025-02-28 6

2025-02-28 6

2025-02-28 5

2025-02-28 5

2025-02-28 4

2025-02-28 4

2025-02-28 7

2025-02-28 7

2025-02-28 7

2025-02-28 7

2025-02-28 6

2025-02-28 6

渝公网安备50010702506394

渝公网安备50010702506394