UMAP ’21, June 21–25, 2021, Utrecht, Netherlands Wang, et al.

1 INTRODUCTION

Personalized online learning systems have recently drawn a lot of attention because of the growing need to assist and

improve students’ learning. A fundamental part of the user modeling task in these systems is estimating students’

knowledge states as they work with learning materials [

3

]. This task, known as knowledge tracing (KT), is necessary for

predicting students’ performance in future assessments, personalizing problems and exercises for students, identifying

at-risk students, and providing teachers with a detailed view of overall student progress. In particular, KT models use

student attempt sequences, including student performance (e.g., success or failure) on past problems, to estimate student

knowledge at the end of a sequence and predict student performance on the next attempts.

To quantify student knowledge, traditional KT models rely on a predened domain knowledge model that represents

the associations between the problems and course concepts. Such models individually trace student knowledge in each

of these concepts, neglecting the potential relationships between dierent concepts. As these models learn the same set

of parameters for all students, they are not personalized to the student specications. For example, Bayesian knowledge

tracing (BKT) [

3

], which is one of the pioneer KT models, represents student knowledge states in each concept using a

two-state HMM, which imposes a Markovian assumption on knowledge states from one attempt to the next.

In recent years, modern KT models have been developed to address the above problems. For example, many variants of

BKT have been proposed to improve the model by considering the potential to forget the learned concepts [

7

], accounting

for the dependencies between concepts [

8

], and personalizing the model parameters for dierent students [

27

]. In addition

to the Bayesian models, latent factor approaches have been successful in considering the concept relationships [

11

,

20

,

21

,

29

]. For example, Lan et al. [

11

] proposed a sparse factor analysis framework for both student knowledge tracing

and domain knowledge estimation. Sahebi et al. [

20

] proposed a tensor factorization method to explicitly model student

learning processes by assuming a strictly monotonic increasing learning gain. Zhao etal. [

29

] leverage the multiview

tensor factorization method for modeling student knowledge using multiple learning resource types. Similarly, deep

learning models, such as DKT [17] and DKVMN [28], have recently been introduced into the KT domain.

However, the majority of KT models have assumed that each attempt in a sequence considered by tracing is relatively

simple and involves the application of one or very few concepts, such as small steps in solving either a complex problem

or an elementary problem. With this assumption, the observed student performance can be directly associated with a

few involved domain concepts, and each correct or incorrect attempt by the student can provide a relatively condent

evaluation of student knowledge in those concepts. As a result, when considering these kinds of problems, current

KT models assume that every attempt in student history is equally important in quantifying student knowledge. This

assumption can be sucient for domains in which each problem consists of a few atomic concepts. However, it is

decient for domains with more complex problems, such as writing a program or solving an assignment with multiple

steps.

In complex problem solving, each problem can include multiple concepts, such that knowing all of them to some

extent is necessary for correctly answering the problem. Because of this complexity, student attempt observations will

be noisier, as slipping in even one of the required concepts can signicantly harm student performance. Additionally,

identifying the concepts that are responsible for an imperfect performance will be more challenging in such complex

problems. Similarly, solving a complex problem correctly by guessing a dicult unknown concept or by trial and error

on that important concept will be wrongly attributed to a student’s high knowledge of all of the involved concepts. As

a result, such noisy observations could easily cause traditional KT models to provide an inaccurate estimation of overall

levels of student knowledge. For example, consider a student who has already mastered some concepts. This student

2

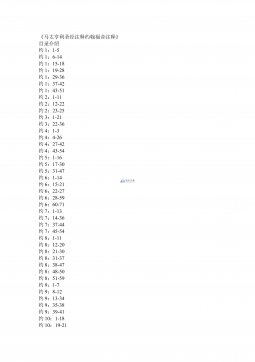

2024-12-10 56

2024-12-10 56

2024-12-10 84

2024-12-10 84

2024-12-26 163

2024-12-26 163

2024-12-26 422

2024-12-26 422

2024-12-26 283

2024-12-26 283

2024-12-26 388

2024-12-26 388

2025-08-09 237

2025-08-09 237

2025-08-09 61

2025-08-09 61

2025-08-18 12

2025-08-18 12

2025-11-20 47

2025-11-20 47

渝公网安备50010702506394

渝公网安备50010702506394