Optimal Discriminant Analysis in High-Dimensional Latent Factor Models Xin BingMarten Wegkamp

OptimalDiscriminantAnalysisinHigh-DimensionalLatentFactorModelsXinBing*MartenWegkampAbstractInhigh-dimensionalclassi cationproblems,acommonlyusedapproachisto rstprojectthehigh-dimensionalfeaturesintoalowerdimensionalspace,andbasetheclassi cationontheresultinglowerdimensionalprojections.Inthispaper,...

相关推荐

-

机器人技术讲稿第八章 机器人编程VIP免费

2024-12-10 12

2024-12-10 12 -

1,PCB专业用语VIP免费

2024-12-10 12

2024-12-10 12 -

7,通信高频开关电源系统VIP免费

2024-12-10 12

2024-12-10 12 -

单片微型计算机原理及接口技术第00章 目录VIP免费

2024-12-10 15

2024-12-10 15 -

交大电气-信号与系统分析《信号与系统》期末-简单卷2000年VIP免费

2024-12-10 13

2024-12-10 13 -

交大电气-信号与系统分析《信号与系统》期末-简单卷2001年VIP免费

2024-12-10 15

2024-12-10 15 -

交大电气-信号与系统分析《信号与系统》期末-简单卷2002年VIP免费

2024-12-10 11

2024-12-10 11 -

文献检索、调研、获取、管理方法医学文献和文献检索概论VIP免费

2024-12-10 18

2024-12-10 18 -

文献检索、调研、获取、管理方法第九章__综合VIP免费

2024-12-10 17

2024-12-10 17 -

传感器原理及工程应用第10章 超声波传感器VIP免费

2024-12-10 12

2024-12-10 12

作者详情

相关内容

-

5.江苏省扬州市扬州中学高三年级物理学科高考模拟试卷

分类:高等教育

时间:2025-01-03

标签:无

格式:DOC

价格:5.9 玖币

-

4.湖南省衡阳市高三年级物理学科高考模拟试卷

分类:高等教育

时间:2025-01-03

标签:无

格式:DOC

价格:5.9 玖币

-

3.河北省沧州市第一中学高三年级物理学科高考模拟试卷

分类:高等教育

时间:2025-01-03

标签:无

格式:DOC

价格:5.9 玖币

-

2.广东省潮州市高三年级物理学科高考模拟试卷

分类:高等教育

时间:2025-01-03

标签:无

格式:DOC

价格:5.9 玖币

-

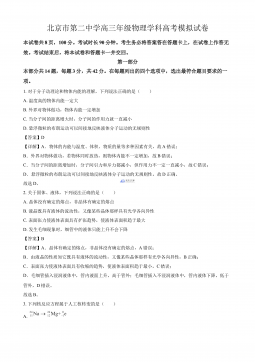

1.北京市第二中学高三年级物理学科高考模拟试卷

分类:高等教育

时间:2025-01-03

标签:无

格式:DOC

价格:5.9 玖币

渝公网安备50010702506394

渝公网安备50010702506394