ACM SIGKDD ’22, August 14–18, 2022, Washington, DC Kamranfar, et al.

computer vision, where videos are naturally structured into bags

and require signicant single-instance labeling eorts, has bene-

ted tremendously from MIL-based methods. Application of MIL for

AD remains largely under-leveraged [

4

,

22

,

38

]. Yet, MIL provides

an appealing formulation for semi-supervised AD over real-world

datasets, and it is the primary contribution of this paper.

In this paper, we propose an MIL-based formulation and propose

various algorithmic instantiations of this framework based on dif-

ferent design decisions for key components of the framework. In

particular, we leverage the combination of MIL and the Strangeness

based OUtlier Detection (StrOUD) algorithm [

8

]. StrOUD computes

a strangeness/anomaly value for each data point and detects out-

liers by means of statistical testing and calculation of p-value. Thus,

the framework is dependent on two primary design decisions: de-

nition of the strangeness factor and the aggregation function. The

degree of outlying/strangeness is needed for recognizing anomalous

data points, while the aggregate function is needed to aggregate

the measures of strangeness into a single anomaly score of a bag.

In this paper, we utilize two alternative scores, the ’Local Outlier

Factor’ and the Autoencoder (AE) reconstruction error. We utilize

six aggregate functions (minimum, maximum, average, median,

spread, dspread) for the strangeness measure of a bag. We note that

we are not limited to these design decisions, and they can be easily

generalized to any other choices. To the best of our knowledge, the

eort we describe here is the rst to truly leverage the power of

MIL in AD by hybridizing the concept of bags naturally with AD

methods that are routinely used in the eld.

We evaluate various algorithmic instantiations of the MIL frame-

work over four datasets that capture dierent physical processes

along dierent modalities (video and vibration signals). The results

show that the MIL-based formulation performs no worse than sin-

gle instance learning on easy to moderate datasets and outperforms

single-instance learning on more challenging datasets. Altogether,

the results show that the framework generalizes well over diverse

benchmark datasets resulting from dierent real-world application

domains.

The rest of the paper is organized as follows. Section 2 relates

prior work in AD. The proposed methodology is described in Sec-

tion 3, and the experimental evaluation is related in Section 4. Sec-

tion 5 concludes the paper.

2 PRIOR WORK

Extensive studies have been performed on AD in many applica-

tion domains, from fraud detection in credit cards, to structural

health monitoring in engineering, to bioinformatics in molecular

biology [

3

,

27

,

43

]. Dierent types of data have been considered,

from sequential time series data [

9

,

13

], to image data, to molecular

structure data [3, 16, 40].

Many methods have been developed for AD, varying from tra-

ditional density-based methods [

25

,

35

] to more recent AE-based

ones [

7

,

15

]. Density-based methods assign an anomaly score to a

single data point/instance by comparing the local neighborhood

of a point to the local neighborhoods of its

𝑘

nearest neighbours.

Higher scores are indicative of anomalous instances.

The Local Outlier Factor (LOF) and its variants are density-based

anomaly scores that are utilized extensively in AD literature [

1

,

24

].

For instance, work in [

35

] introduced an LOF variant score called

’Connectivity based Outlier Factor’ (COF) which diers from LOF in

the way that the neighborhood of an instance is computed. ’Outlier

Detection using In-degree Number’ (ODIN) score has been pre-

sented in [

24

]. ODIN measures the number

𝑘

of nearest neighbors

of a data point which also have that data point in their neighbor-

hood. The inverse of ODIN is dened as the anomaly score.

There are other methods, known as deviation-based methods,

that also utilize anomaly scores. These methods attempt to nd

a lower-dimensional space of normal data by capturing the cor-

relation among the features. The data are projected onto a latent,

lower-dimensional subspace, and unseen test data points with large

reconstruction errors are determined to be anomalies. As these

methods only encounter normal data in the training phase that

seeks to learn the latent space, they are known as semi-supervised

methods.

PCA- and AE-based methods are in the category of AD meth-

ods that seek to capture linear and non-linear feature correlations,

respectively [

15

,

32

]. Due to the capability of AEs in nding more

complex, non-linear correlations, AE-based AD methods tends to

perform better than PCA-based ones, generating fewer false anom-

alies.

In [

15

], conventional and convolutional AE-based (CAE) meth-

ods are presented and compared with PCA-based methods. Work

in [

5

] proposes a variational AE-based (VAE) method which takes

advantage of the probabilistic nature of VAE. The method leverages

the reconstruction probability instead of the reconstruction error as

the anomaly score. AE-based methods, however, require setting a

threshold for how large the reconstruction error or reconstruction

probability has to be for an instance to be predicted as anomalous.

Generally, most AD methods require careful setting of many

parameters, including the anomaly threshold, which is an ad-hoc

process. This hyperparameter regulates sensitivity to anomalies

and the false alarm rate (rate of normal instances detected as out-

liers/anomalies), and is the indicator of AD performance. To main-

tain a low false alarm rate, conformal AD (CAD) methods build on

the conformal prediction (CFD) concept [28].

The underlying idea in CFD methods is to predict potential

labels for each test data point by means of the p-value (one p-

value per possible label). The non-conformity measure is utilized

as an anomaly score, and p-values are calculated To this end, the

signicance level needs to be determined in order to retain or reject

the null hypothesis [29].

In their utilization of signicance testing to avoid overtting,

CFD methods are highly similar to the classic Strangeness based

OUtlier Detection (StrOUD) method [8]. StrOUD was proposed as

an AD method that combines the ideas of transduction and hypoth-

esis testing. It eliminates the need for anomaly ad-hoc thresholds

and can additionally be used for dataset cleaning.

Apart from determination of the anomaly score or the AD cate-

gory, there are many fundamental challenges in AD, not the least of

which is nding appropriate training instances. It is generally easier

to obtain instances from the normal behaviour of a system rather

than anomalies, especially in real-world settings (i.e. large engineer-

ing infrastructures or industrial systems) [

37

], where anomalous

physical processes that generate anomalous data are rare events.

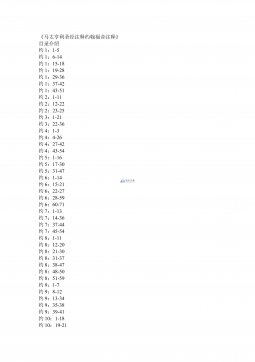

2024-12-10 56

2024-12-10 56

2024-12-10 84

2024-12-10 84

2024-12-26 163

2024-12-26 163

2024-12-26 434

2024-12-26 434

2024-12-26 295

2024-12-26 295

2024-12-26 400

2024-12-26 400

2025-08-09 237

2025-08-09 237

2025-08-09 61

2025-08-09 61

2025-08-18 12

2025-08-18 12

2025-11-20 47

2025-11-20 47

渝公网安备50010702506394

渝公网安备50010702506394