MM-Align Learning Optimal Transport-based Alignment Dynamics for Fast and Accurate Inference on Missing Modality Sequences Wei Han

2025-05-02

0

0

999.34KB

14 页

10玖币

侵权投诉

MM-Align: Learning Optimal Transport-based Alignment Dynamics for

Fast and Accurate Inference on Missing Modality Sequences

Wei Han

DeCLaRe

Hui Chen

DeCLaRe

Min-Yen Kan♣Soujanya Poria

DeCLaRe

DeCLaRe

DeCLaRelab, Singapore University of Technology and Design, Singapore

♣National University of Singapore, Singapore

{wei_han,hui_chen}@mymail.sutd.edu.sg

kanmy@comp.nus.edu.sg,sporia@sutd.edu.sg

Abstract

Existing multimodal tasks mostly target at

the complete input modality setting, i.e., each

modality is either complete or completely miss-

ing in both training and test sets. How-

ever, the randomly missing situations have

still been underexplored. In this paper, we

present a novel approach named MM-Align to

address the missing-modality inference prob-

lem. Concretely, we propose 1) an align-

ment dynamics learning module based on

the theory of optimal transport (OT) for in-

direct missing data imputation; 2) a denois-

ing training algorithm to simultaneously en-

hance the imputation results and backbone

network performance. Compared with pre-

vious methods which devote to reconstruct-

ing the missing inputs, MM-Align learns to

capture and imitate the alignment dynam-

ics between modality sequences. Results of

comprehensive experiments on three datasets

covering two multimodal tasks empirically

demonstrate that our method can perform

more accurate and faster inference and relieve

overfitting under various missing conditions.

Our code is available at https://github.

com/declare-lab/MM-Align.

1 Introduction

The topic of multimodal learning has grown un-

precedentedly prevalent in recent years (Ramachan-

dram and Taylor,2017;Baltrušaitis et al.,2018),

ranging from a variety of machine learning tasks

such as computer vision (Zhu et al.,2017;Nam

et al.,2017), natural langauge processing (Fei

et al.,2021;Ilharco et al.,2021), autonomous driv-

ing (Caesar et al.,2020) and medical care (Nascita

et al.,2021), etc. Despite the promising achieve-

ments in these fields, most of existent approaches

assume a complete input modality setting of train-

ing data, in which every modality is either complete

or completely missing (at inference time) in both

training and test sets (Pham et al.,2019;Tang et al.,

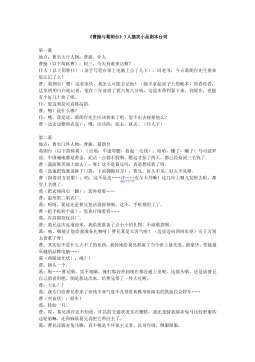

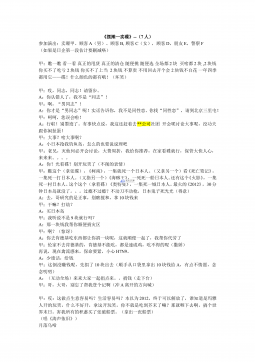

2021;Zhao et al.,2021), as shown in Fig. 1a and 1b.

Such synergies between train and test sets in the

modality input patterns are usually far from the

realistic scenario where there is a certain portion

of data without parallel modality sequences, prob-

ably due to noise pollution during collecting and

preprocessing time. In other words, data from each

modality are more probable to be missing at ran-

dom (Fig.1c and 1d) than completely present or

missing (Fig.1a and 1b) (Pham et al.,2019;Tang

et al.,2021;Zhao et al.,2021). Based on the com-

plete input modality setting, a family of popular

routines regarding the missing-modality inference

is to design intricate generative modules attached

to the main network and train the model under full

supervision with complete modality data. By mini-

mizing a customized reconstruction loss, the data

restoration (a.k.a. missing data imputation (Van Bu-

uren,2018)) capability of the generative modules

is enhanced (Pham et al.,2019;Wang et al.,2020;

Tang et al.,2021) so that the model can be tested

in the missing situations (Fig. 1b). However, we

notice that (i) if modality-complete data in the train-

ing set is scarce, a severe overfitting issue may

occur, especially when the generative model is

large (Robb et al.,2020;Schick and Schütze,2021;

Ojha et al.,2021); (ii) global attention-based (i.e.,

attention over the whole sequence) imputation may

bring unexpected noise since true correspondence

mainly exists between temporally adjacent parallel

signals (Sakoe and Chiba,1978). Ma et al. (2021)

proposed to leverage unit-length sequential repre-

sentation to represent the missing modality from

the seen complete modality from the input for train-

ing. Nevertheless, such kinds of methods inevitably

overlook the temporal correlation between modal-

ity sequences and only acquire fair performance on

the downstream tasks.

To mitigate these issues, in this paper we present

MM-Align, a novel framework for fast and effec-

tive multimodal learning on randomly missing mul-

arXiv:2210.12798v1 [cs.CL] 23 Oct 2022

It is really intense which surprises me …

Because he says issue and they think …

There was enough in there for you to …

However, it is this loyalty to the original …

But the two big characters in this movie …

You know going into it or these watching …

Te s t

Train

(a)

Train

It is really intense which surprises me …

Because he says issue and they think …

There was enough in there for you to …

Te s t

However, it is this loyalty to the original …

But the two big characters in this movie …

You know going into it or these watching …

(b)

It is really intense which surprises me …

Because he says issue and they think …

There was enough in there for you to …

Train

Te s t

However, it is this loyalty to the original …

But the two big characters in this movie …

You know going into it or these watching …

(c)

Te s t

It is really intense which surprises me …

Because he says issue and they think …

There was enough in there for you to …

However, it is this loyalty to the original …

But the two big characters in this movie …

You know going into it or these watching …

Train

(d)

Figure 1: Input patterns of different modality inference

problems. Here visual modality is the victim modal-

ity that may be missing randomly. (a) modalities are

both complete in train and test set; (b) modalities are

both complete in the train set but the victim modality

is completely missing in the test set; (c) victim modal-

ity is missing randomly in the train set but completely

missing in the test set; (d) modalities are missing with

the same probability in train and test set.

timodal sequences. The core idea behind the frame-

work is to imitate some indirect but informative

clues for the paired modality sequences instead of

learning to restore the missing modality directly.

The framework consists of three essential func-

tional units: 1) a backbone network that handles the

main task; 2) an alignment matrix solver based on

the optimal transport algorithm to produce context-

window style solutions only part of whose values

are non-zero and an associated meta-learner to im-

itate the dynamics and perform imputation in the

modality-invariant hidden spaces; 3) a denoising

training algorithm that optimizes and coalesces the

backbone network and the learner so that they can

work robustly on the main task in missing-modality

scenarios. To empirically study the advantages of

our models over current imputation approaches, we

test on two settings of the random missing con-

ditions, as shown in Fig. 1c and Fig. 1d, for all

possible modality pair combinations. To the best of

our knowledge, it is the first work that applies opti-

mal transport and denoising training to the problem

of inference on missing modality sequences. In a

nutshell, the contribution of this work is threefold:

•

We propose a novel framework to facilitate the

missing modality sequence inference task, where

we devise an alignment dynamics learning mod-

ule based on the theory of optimal transport and

a denoising training algorithm to coalesce it into

the main network.

•

We design a loss function that enables a context-

window style solution for the dynamics solver.

•

We conduct comprehensive experiments on three

publicly available datasets from two multimodal

tasks. Results and analysis show that our method

leads to a faster and more accurate inference of

missing modalities.

2 Related Work

2.1 Multimodal Learning

Multimodal learning has raised prevalent concen-

tration as it offers a more comprehensive view

of the world for the task that researchers intend

to model (Atrey et al.,2010;Lahat et al.,2015;

Sharma and Giannakos,2020). The most funda-

mental technique in multimodal learning is multi-

modal fusion (Atrey et al.,2010), which attempts to

extract and integrate task-related information from

the input modalities into a condensed representative

feature vector. Conventional multimodal fusion

methods encompass cross-modality attention (Tsai

et al.,2018,2019;Han et al.,2021a), matrix alge-

bra based method (Zadeh et al.,2017;Liu et al.,

2018;Liang et al.,2019) and invariant space regu-

larization (Colombo et al.,2021;Han et al.,2021b).

While most of these methods focus on complete

modality input, many take into account the missing

modality inference situations (Pham et al.,2019;

Wang et al.,2020;Ma et al.,2021) as well, which

usually incorporate a generative network to impute

the missing representations by minimizing the re-

construction loss. However, the formulation under

missing patterns remains underexplored, and that

is what we dedicate to handling in this paper.

2.2 Meta Learning

Meta-learning, or learning to learn, is a hot research

topic that focuses on how to generalize the learning

approach from a limited number of visible tasks

to broader task types. Early efforts to tackle this

problem are based on comparison, such as relation

networks (Sung et al.,2018) and prototype-based

methods (Snell et al.,2017;Qi et al.,2018;Lifchitz

et al.,2019). Other achievements reformulate this

problem as transfer learning (Sun et al.,2019) and

multi-task learning (Pentina et al.,2015;Tian et al.,

2020), which devote to seeking an effective trans-

formation from previous knowledge that can be

adapted to new unseen data, and further fine-tune

the model on the handcrafted hard tasks. In our

framework, we treat the alignment matrices as the

training target for the meta-learner. Combined with

a self-adaptive denoising training algorithm, the

meta-learner can significantly enhance the predic-

tions’ accuracy in the missing modality inference

problem.

3 Method

3.1 Problem Definition

Given a multimodal dataset

D=

{Dtrain,Dval,Dtest}

, where

Dtrain,Dval,Dtest

are the training, validation and test set, respectively.

In the training set

Dtrain ={(xm1

i, xm2

i, yi)n

i=1}

,

where

xmk

i={xmk

1,1, ..., xmk

i,t }

are input modality

sequences and

m1, m2

denote the two modality

types, some modality inputs are missing with prob-

ability

p0

. Following Ma et al. (2021), we assume

that modality

m1

is complete and the random miss-

ing only happens on modality

m2

, which we call

the victim modality. Consequently, we can divide

the training set into the complete and missing

splits, denoted as

Dtrain

c={(xm1

i, xm2

i, yi)nc

i=1}

and

Dtrain

m={(xm1

i, yi)n

i=nc+1}

, where

|Dtrain

m|/|Dtrain|=p0

. For the validation and

test set, we consider two settings: a) the victim

modality is missing completely (Fig. 1c), denoted

as “setting A” in the experiment section; b)

the victim modality is missing with the same

probability

p0

(Fig. 1d), denoted as “Setting B”,

in line with Ma et al. (2021). We consider two

multimodal tasks: sentiment analysis and emotion

recognition, in which the label

yi

represents the

sentiment value (polarity as positive/negative

and value as strength) and emotion category,

respectively.

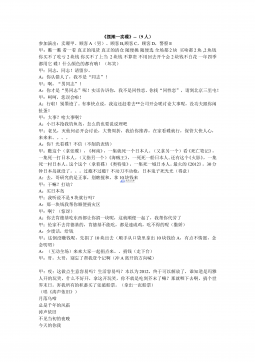

!𝑦

𝑦

Transformer

Encoder

Transformer

Encoder

𝑍!𝑍"

Alignment

Dynamics

Solver

Alignment

Dynamics

Fitter

𝑋!𝑋"

Cross-modality

Transformer

Fusion Layers

%

𝑍"

Output Layer

ℒ#$%&

ℒ'%(

ℒ)*&

Figure 2: Overall architecture of our framework. Solid

lines are the forward paths when training on the

modality-complete split and dashed lines are the for-

ward paths when training and testing on the split with

missing modality.

3.2 Overview

Our framework encompasses a backbone network

(green), an alignment dynamics learner (ADL,

blue), and a denoising training algorithm to op-

timize both the learner and backbone network con-

currently. We highlight the ADL which serves as

the core functional unit in the framework. Moti-

vated by the idea of meta-learning, we seek to gen-

erate substitution representations for the missing

modality through an indirect imputation clue, i.e.,

alignment matrices, instead of learning to restore

the missing modality by minimizing the reconstruc-

tion losses. To this end, the ADL incorporates an

alignment matrix solver based on the theory of op-

timal transport (Villani,2009), a non-parametric

method to capture alignment dynamics between

time series (Peyré et al.,2019;Chi et al.,2021),

as well as an auxiliary neural network to fit and

generate meaningful representations as illustrated

in §3.4.

3.3 Architecture

Backbone Network

The overall architecture of

our framework is depicted in Fig. 2. We harness

MulT (Tsai et al.,2019), a fusion network derived

from Transformer (Vaswani et al.,2017) as the

backbone structure since we find a number of its

variants in preceding works acquire promising out-

comes in multimodal (Wang et al.,2020;Han et al.,

2021a;Tang et al.,2021). MulT has two essen-

摘要:

展开>>

收起<<

MM-Align:LearningOptimalTransport-basedAlignmentDynamicsforFastandAccurateInferenceonMissingModalitySequencesWeiHanHuiChenMin-YenKan|SoujanyaPoriaDeCLaRelab,SingaporeUniversityofTechnologyandDesign,Singapore|NationalUniversityofSingapore,Singapore{wei_han,hui_chen}@mymail.sutd.edu.sgkanmy@comp.nus.e...

声明:本站为文档C2C交易模式,即用户上传的文档直接被用户下载,本站只是中间服务平台,本站所有文档下载所得的收益归上传人(含作者)所有。玖贝云文库仅提供信息存储空间,仅对用户上传内容的表现方式做保护处理,对上载内容本身不做任何修改或编辑。若文档所含内容侵犯了您的版权或隐私,请立即通知玖贝云文库,我们立即给予删除!

相关推荐

-

《卖股票》2人仿赵本山小品卖拐VIP免费

2024-11-30 9

2024-11-30 9 -

《罗密欧与茱丽叶》穿越版-10人以上幽默搞笑小品剧本VIP免费

2024-11-30 15

2024-11-30 15 -

《精神病》4人搞笑小品剧本台词VIP免费

2024-11-30 11

2024-11-30 11 -

《超幸福鞋垫》湖南卫视何炅经典之作VIP免费

2024-11-30 14

2024-11-30 14 -

《曹操与葛朗台》3人搞笑小品剧本台词VIP免费

2024-11-30 13

2024-11-30 13 -

《摆摊-卖碟》多人(搞笑)最新9人VIP免费

2024-11-30 14

2024-11-30 14 -

《摆摊-卖碟》多人(搞笑)最新7人VIP免费

2024-11-30 13

2024-11-30 13 -

《摆摊-卖碟》多人(搞笑)最新VIP免费

2024-11-30 15

2024-11-30 15 -

“专心成长 超越自我”主题年会暨经管院就协成立一周年庆典联欢会策划书VIP免费

2024-11-30 18

2024-11-30 18 -

高效团队建设方案-如何组建高效的团队VIP免费

2024-12-09 49

2024-12-09 49

分类:图书资源

价格:10玖币

属性:14 页

大小:999.34KB

格式:PDF

时间:2025-05-02

渝公网安备50010702506394

渝公网安备50010702506394