FedMT Federated Learning with Mixed-type Labels Qiong Zhang Institute of Statistics and Big Data

2025-04-27

1

0

1.01MB

23 页

10玖币

侵权投诉

FedMT: Federated Learning with Mixed-type Labels

Qiong Zhang

Institute of Statistics and Big Data

Renmin University of China

Jing Peng∗

Institute of Statistics and Big Data

Renmin University of China

Xin Zhang∗

MetaAI

Aline Talhouk

Department of Obstetrics & Gynecology

The University of British Columbia

Gang Niu

RIKEN

Xiaoxiao Li†

Department of Electrical and Computer Engineering

The University of British Columbia

February 16, 2024

Abstract

In federated learning (FL), classifiers (e.g., deep networks) are trained on datasets from multiple

data centers without exchanging data across them, which improves the sample efficiency. However, the

conventional FL setting assumes the same labeling criterion in all data centers involved, thus limiting its

practical utility. This limitation becomes particularly notable in domains like disease diagnosis, where

different clinical centers may adhere to different standards, making traditional FL methods unsuitable. This

paper addresses this important yet under-explored setting of FL, namely FL with mixed-type labels, where

the allowance of different labeling criteria introduces inter-center label space differences. To address this

challenge effectively and efficiently, we introduce a model-agnostic approach called FedMT, which estimates

label space correspondences and projects classification scores to construct loss functions. The proposed

FedMT is versatile and integrates seamlessly with various FL methods, such as FedAvg. Experimental

results on benchmark and medical datasets highlight the substantial improvement in classification accuracy

achieved by FedMT in the presence of mixed-type labels.

1 Introduction

Federated learning (FL) allows centers to collaboratively train a model while maintaining data locally,

avoiding the data centralization constraints imposed by regulations such as the California Consumer Privacy

Act (Legislature, 2018), Health Insurance Portability and Accountability Act (Act, 1996), and the General Data

Protection Regulation (Voigt et al., 2018). This approach has gained popularity across various applications.

Well-established FL methods, such as FedAvg (McMahan et al., 2017), employ iterative optimization algorithms

for joint model training across centers. In each round, individual centers perform stochastic gradient descent

(SGD) for several steps before communicating their current model weights to a central server for aggregation.

In the conventional FL classification framework, a classifier is trained jointly, assuming the same labeling

criterion across all participating centers. However, in real applications such as healthcare, the standards for

disease diagnosis may be different across clinical centers due to the varying levels of expertise or technology

available at different centers. Different centers may adhere to distinct diagnostic and statistical manuals (Ep-

stein and Loren, 2013; McKeown et al., 2015), making it difficult to enforce a unified labeling criterion or

perform relabeling, especially when labeling based on studies that cannot be replicated. Consequently, this

results in disparate label spaces across centers. Furthermore, the center with the most intricate labeling

criterion, crucial for future predictions thus referred as desired label space, typically has a limited number of

samples due to labeling complexity or associated costs. We consider this practical but underexplored scenario

as learning from mixed-type labels and aim to answer the following important question:

∗contributed equally

†Corresponding to: Xiaoxiao Li (xiaoxiao.li@ece.ubc.ca).

1

arXiv:2210.02042v4 [cs.LG] 15 Feb 2024

In the context of labeling criteria varying across centers, how can we effectively incorporate the

commonly used FL pipeline (e.g., FedAvg) to jointly learn an FL model in the desired label space?

100

010

010

001

0.6 00.4

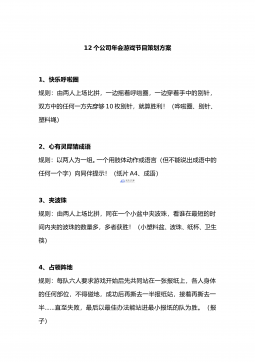

(a) Different label spaces (b) Our proposed FedMT

!"!

"*

Class overlap

{%!,'

%!}&and&{%",'

%!}

!

!

"= FedAvg(!

#!

")

'#$(", #

")

!$

!#

!

")

!

"!

!!

'#$(!

", 2#

#!)'#$(!

", 2#

##)

FedMT(T)

Desired label space

The other label space

Known correspondence matrix .

Same backbone

Client update

Estimate .

#

'

Predict

Client True label

Pseudo label

Count

!

"!!

""!

"#

"!3 0 0

""0 2 0

"#0 4 0

"$0 0 4

"%3 0 2

Normalize

+

,!

=

FedMT(E) : plug-in

FedAvg

.

"

#

Update order

Same backbone

Client update

Same backbone

Server update

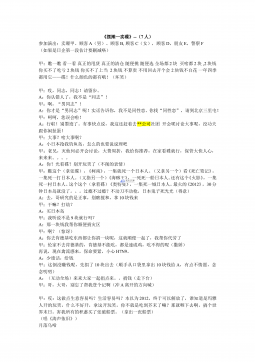

Figure 1: Illustration of the problem setting and our proposed FedMT method. (a) We consider different

label spaces (i.e., desired label space

Y

with

K

classes and the other space

e

Y

with

J

classes) where classes may overlap,

such as

e

Y1

and

Y2

. Annotation within the desired label space is typically more challenging and resource-intensive,

resulting in a scarcity of labeled samples. (b) We employ a fixed label space correspondence matrix

M

to establish

associations between label spaces, effectively linking

e

Y

and

Y

. Our method, denoted as FedMT (T), locally corrects

class scores

f

using

M

within the FedAvg framework. In instances where the correspondence matrix

M

is unknown, we

propose a pseudo-label based method to estimate

b

M

. Subsequently, FedMT (E) incorporates

b

M

into the loss function

to correct class scores.

Problem setting: To address the question, we consider a simplified but generalizable setting as illustrated in

Fig. 1 that two types of labeling criteria exist in FL. The label spaces are not necessarily nested, namely, it is

possible for a class in one label space to overlap with multiple classes in another space (e.g., disease diagnoses

often exhibit imperfect agreement). Additionally, drawing inspiration from a healthcare scenario, we assume a

limited availability of labeled data (

<

5%) within the desired label space. One supercenter adheres to the

complex labeling criterion according to the desired label space, while others use another distinct simpler

labeling criterion. The supercenter serves as the coordinating server for FL but also engages in local model

updating like other clients. All centers jointly train an FL model following the standard FL training protocol,

as shown in Fig. 1 (b).

Under the problem setting described above, alternative approaches to handling different label spaces

include personalized FL (Collins et al., 2021). However, these methods often neglect to exploit the inherent

correspondence between different label spaces. Alternatively, transfer learning (Yang et al., 2019), which

involves pre-training a model in one space and fine-tuning it in other spaces, can be an alternative solution in

FL, but suboptimal pre-training may lead to negative transfer (Chen et al., 2019). Other centralized strategies

that require the pooling of all data features for similarity comparison through complex training strategies (Hu

et al., 2022) increase privacy risk and are impractical for widely used FL methods like FedAvg. In light of the

limitations associated with these methods, we aim to address two key challenges: a) simultaneously leveraging

different types of labels and their correspondences without additional feature exchange, and b) learning the

FL model end-to-end.

In this work, we introduce a plug-and-play method called FedMT. This versatile strategy seamlessly

integrates with various FL pipelines, such as FedAvg. Specifically, we use models with identical architectures,

with the output dimension matching the number of classes in the desired label space across all centers. To

utilize client data from other label spaces for supervision, we employ a probability projection to align the two

spaces by mapping class scores.

Contributions: Our contributions are multifaceted. First, we explore a novel and underexplored problem

setting–FL under mixed-type labels. This is particularly significant in real-world applications, notably

within the realm of medical care. Second, we propose a novel and versatile FL method, FedMT, which is a

computationally efficient and versatile solution; Third, we provide theoretical analysis on the generalization

error of learning from data using mixed-type with projection matrix; Lastly, our approach shows better

performance in predicting in the desired label space compared to other methods, demonstrated by extensive

experiments on benchmark datasets in different settings and real medical data. Additionally, we are also able

to predict in the other space as a byproduct and we observe improved classification compared with other

2

feasible baseline methods.

2 Related Work

Federated learning (FL) FL is emerging as a learning paradigm for distributed clients that bypasses

data sharing to train local models collaboratively. To aggregate the model parameters, FedAvg (McMahan

et al., 2017) would be the most widely used approach in FL. FedAvg variants have been proposed to improve

optimization (Reddi et al., 2020; Rothchild et al., 2020) and for non-iid data (Li et al., 2021, 2020; Karimireddy

et al., 2020). Semi-supervised FL (Jeong et al., 2021; Bdair et al., 2021; Diao et al., 2022) considers scenarios

where client samples are unlabeled. In contrast, our client data contains labeled samples but from different

label spaces. Additionally, FL with samples from a single class on each client (Yu et al., 2020) differs from

our setting, as all samples are labeled using the same criterion in theirs.

Learning with labels of varying granularity In centralized setting, learning with labels of different

granularity often involves the coarse-to-fine (C2F) label learning scenario, aiming to learn fine-grained labels

given a set of coarse-grained labels. Notably, approaches such as Chen et al. (2021a); Touvron et al. (2021);

Taherkhani et al. (2019); Ristin et al. (2015) heavily rely on the hierarchical assumption of coarse and fine

labels, assuming knowledge of the hierarchical structure. In contrast, our proposed method refrains from

assuming the hierarchical structure of the two sets of labels, and we do not presume knowledge of this

hierarchical structure. Moreover, centralized C2F methods, including Touvron et al. (2021); Hu et al. (2022),

typically necessitate access to and communication of features from both types of labels (and consequently

different clients) to calculate their losses. In our problem setting, each client possesses only one type of label.

Extend these centralized C2F methods to the FL setting would incur additional communication costs and

increase privacy risk.

3 Methods

In this section, we first overview the classical FL approach, then present the mathematical formulation of our

problem and the proposed method.

3.1 Problem Formulation

We address a classification problem in which different labeling criteria are used at various data centers. For

the sake of clarity, we consider two labeling criteria, denoted as

Y

=

{Yk}K

k=1

and

e

Y

=

{e

Yj}J

j=1

with

K > J

.

In this scenario, let (

x, y, ey

)

∈ X × Y × e

Y

, where both

y

and

ey

serve as labels for the feature

x

under

two different labeling criteria. The key constraint is the observation of only one label at each data center.

Driven by applications in the medical field, a ‘specialized center’ is established, and its limited dataset

originates from the desired label space. This center is referred to as a server, and its dataset is denoted

as

Ds

=

{

(

xs

i, ys

i

) :

i∈

[

nK

]

}

, where

ys

i∈ Y

. Furthermore, a total of

N

labeled data, characterized by

a different criterion, is distributed across

C

clients. For each

c∈

[

C

],

Sc

denotes the indices of data in

Dc

=

{

(

xc

i,eyc

i

) :

i∈Sc}

on the

c

-th client. Here,

eyc

i∈e

Y

represents labels from the alternative label space.

The corresponding unobserved label in the desired label space is denoted as

yc

i

. Importantly, we assume

disjoint datasets across all centers, i.e.,

Si∩Sj

=

∅

. Let

Nc

=

|Sc|

, and the total labeled data is given by

N

=

Pc∈[C]Nc

. Our objective is to train a global classifier in the desired label space denoted as

f

:

X →

∆

K−1

using data from different label spaces within the system.

3.2 Preliminary: Classical FL

We begin by examining the classical Federated Learning (FL) approach, specifically FedAvg (McMahan et al.,

2017), wherein all centers adhere to the same labeling criterion. Consider the feature

x

and label

y

with joint

density

p

(

x, y

). A

K

-class classifier

f

:

X →

∆

K−1

models the class score as

p

(

y

=

k|x

) =

fk

(

x

), where

fk

denotes the kth element of f. The label is predicted via by= arg maxk∈[K]fk(x).

In the classical FL setup, each client

c∈

[

C

] possesses an independent identically distributed (IID) labeled

training set

Dc

=

{

(

xc

i, yc

i

)

}Nc

i=1

of size

Nc

. The objective of classical FL is to enable

C

clients to jointly train

a global classifier

f

that generalizes well with respect to

p

(

x, y

) without sharing their local data

Dc

. With

3

access to data in all centers, the overall risk is R(f) = C−1PC

c=1 b

Rc(f;Dc) where

b

Rc(f;Dc) = 1

Nc

Nc

X

i=1

ℓCE(f(xc

i), yc

i),(1)

the cross-entropy loss ℓCE(fc(x), y) = −PK

k=1 (y=k) log fc

k(x), and (·) is the indicator function.

To minimize the overall loss

R

(

·

) without data sharing, FedAvg involves alternating between a few local

stochastic gradient updates on clients using client data, followed by an average update on the server.

However, in cases where labeling criteria differ across data centers, class scores across clients may not align.

For instance, in a deep neural network, the number of neurons in the last layer may vary due to mismatches

in the number of classes on different clients, leading to the failure of the server to average the model weights.

Therefore, we propose a novel approach to deal with different labeling criteria.

3.3 Proposed Method

For classification problems, the standard CE loss, denoted as

ℓCE

, assumes that the dimension of class scores

matches the number of classes. In our scenario, we can construct the server CE loss

b

Rs

(

f

;

Ds

) as in

(1)

using

server data. However, the class scores

f

(

xc

)

∈

∆

K−1

, while

eyc∈e

Y

is a

J

-class label on clients. This mismatch

prevents the direct use of the conventional CE loss on clients, prompting the question of how to evaluate

risk on these clients. To leverage the communication efficiency of FedAvg, we employ identical backbones on

both the server and the clients. Constructing loss functions on clients becomes crucial for our classification

problem with mixed-type labels. The core idea behind our proposed method is to align class scores and labels

through projection. Further details of the method are provided below.

Client loss construction Each client sample has an unobserved label in the desired label space

Y

. For a

given image

xc

, our objective is to find the

K

-class scores

{P

(

yc

=

Yk|xc

)

}K

k=1

. According to the law of total

probability:

P(eyc=e

Yj|xc) = X

k

P(eyc=e

Yj|yc=Yk,xc)P(yc=Yk|xc)

=X

k

P(eyc=e

Yj|yc=Yk)P(yc=Yk|xc).

Here, the last equality is based on the assumption of instance independence in conditional probability. This

equality allows K-class scores to be expressed linearly in terms of J-class scores. Let M∈[0,1]J×Kwith

Mjk =P(eyc=e

Yj|yc=Yk).(2)

When Mis known, we naturally obtain the following local loss based on projection class scores:

b

Rp

c(f;Dc) = 1

Nc

Nc

X

i=1

ℓCE(Mf(xc

i),eyc

i) (3)

for any

c∈

[

C

]. As each local loss involves only samples from the corresponding client and does not require

coordination with other clients, we could jointly optimize all data centers using general FL strategies, such

as FedAvg (McMahan et al., 2017) we use, or other variants such as Li et al. (2020), Li et al. (2021),

and Karimireddy et al. (2020).

Moreover, it is worth emphasizing that our proposed method readily extends to scenarios where label

spaces differ across clients. This extension can be achieved by integrating the client-specific correspondence

matrix, denoted as Mc, into the projection-based local loss (3).

3.4 Estimation of the correspondence matrix

The correspondence matrix

M

plays a crucial role in our proposed method. While the method description

above assumes knowledge of the projection matrix, practical application often involves direct computation of

M

using domain knowledge. However, such domain knowledge is not available in some cases. To address this,

we introduce an effective procedure for estimating Mfrom the data.

4

Algorithm 1 FL using FedMT (Ours)

Server Input: server model fs,small server dataset Ds={(xs,ys)}where ys∈ Y

Client Input: aggregation step-size

ηagg

, and global communication round

R

, regularization parameters

λ1

,

λ2, client dataset Dc={(xc,yc)}

1: For r= 1 →Rrounds, run Aon server and B &C on each client iteratively.

2: procedure A.ServerModelUpdate(r)

3: fs←f▷Receive updated model from proc. C

4: for τ= 1 →tdo

5: fs←fs−ηsgd · ∇b

Rs(fs;Ds)

6: send fsto proc. B

7: procedure B.ClientModelUpdate(r)

8: fc←f▷Receive updated model from proc. A

9: Generate pseudo-labels byc

i=fc(xc

i)

10: Construct high-confidence sample subset Sfix

c(ξ) = {i∈Sc: maxkfc

k(xc

i)> ξ}

11: if Sfix

c(ξ) = ∅then

12: stop & return.

13: else

14: Construct an equal-size mixup subset Smix

c(ξ) = Sample |Sfix

c(ξ)|with replacement from Sc}

15: for τ= 1 →tdo

16: for batch (xfix

b,byfix

b),(xmix

b,bymix

b) with b∈Sfix

c, Smix

cdo

17: λmix ∼Beta(a, a)

18: xmix ←λmixxfix

b+ (1 −λmix)xmix

b

19: Lfix ←ℓ(f(A(xfix

b)),byfix

b)

20: Lmix ←λmixℓ(f(xmix),byfix

b) + (1 −λmix)ℓ(f(xmix),bymix

b))

21: if Mis not known then

22: Estimate b

Mvia (4)

23: Construct local loss b

Rreg

c(fc;Dc) via (3.4)

24: fc←fc−ηsgd∇b

Rreg

c(fc;Dc)

25: send fl−fto proc. C for l∈[C]

26: procedure C. ModelAgg(r)

27: receive model updates from proc. B

28: f←f−ηagg ·Pc∈{[C]}(fc−f)

29: broadcast fto proc. A

Recalling the definition of the correspondence matrix in

(2)

, when both sets of labels are known for

each sample, a practical estimate for

Mjk

is to use its empirical version

e

Mjk

=

Pi∈Sc

(

eyc

i

=

e

Yj, yc

i

=

Yk

)

/Pi∈Sc

(

yc

i

=

Yk

). However, a challenge arises with this estimate as the true label

yc

i

in the desired

label space remains unknown for client data. To overcome this, we use the aggregated model to predict

yc

i

and

view the predicted pseudo-label as the true label for estimation. To enhance the efficiency of the estimator,

we estimate the correspondence matrix using only samples with high-confidence pseudo-labels.

In particular, let

fr

be the aggregated model weight in the

r

th communication round. Let

byc

i

=

arg maxkfr

k(xc

i) represent the pseudo-label for the ith sample on the cth client. Additionally, define

Sfix

c(ξ) = {i∈Sc: max

kfr

k(xc

i)> ξ}

as the set of high-confidence samples on the

c

th client in the

r

th communication round. The confidence

threshold 0

< ξ <

1 serves as a preselected hyperparameter for all clients. If, for a specific client

c

, we find

that

Sfix

c

(

ξ

) =

∅

, then the process halts and the client refrains from transmitting data to the server. Otherwise,

the correspondence matrix on cth client in rth communication round is defined as

b

Mc

jk =Pi∈Sfix

c(ξ)(eyc

i=e

Yj,byc

i=Yk)

Pi∈Sfix

c(ξ)(byc

i=Yk).(4)

The estimated correspondence matrix also applies to the scenario where label spaces differ across clients.

5

摘要:

展开>>

收起<<

FedMT:FederatedLearningwithMixed-typeLabelsQiongZhangInstituteofStatisticsandBigDataRenminUniversityofChinaJingPeng∗InstituteofStatisticsandBigDataRenminUniversityofChinaXinZhang∗MetaAIAlineTalhoukDepartmentofObstetrics&GynecologyTheUniversityofBritishColumbiaGangNiuRIKENXiaoxiaoLi†DepartmentofElect...

声明:本站为文档C2C交易模式,即用户上传的文档直接被用户下载,本站只是中间服务平台,本站所有文档下载所得的收益归上传人(含作者)所有。玖贝云文库仅提供信息存储空间,仅对用户上传内容的表现方式做保护处理,对上载内容本身不做任何修改或编辑。若文档所含内容侵犯了您的版权或隐私,请立即通知玖贝云文库,我们立即给予删除!

相关推荐

-

《卖股票》2人仿赵本山小品卖拐VIP免费

2024-11-30 9

2024-11-30 9 -

《罗密欧与茱丽叶》穿越版-10人以上幽默搞笑小品剧本VIP免费

2024-11-30 15

2024-11-30 15 -

《精神病》4人搞笑小品剧本台词VIP免费

2024-11-30 11

2024-11-30 11 -

《超幸福鞋垫》湖南卫视何炅经典之作VIP免费

2024-11-30 14

2024-11-30 14 -

《曹操与葛朗台》3人搞笑小品剧本台词VIP免费

2024-11-30 13

2024-11-30 13 -

《摆摊-卖碟》多人(搞笑)最新9人VIP免费

2024-11-30 14

2024-11-30 14 -

《摆摊-卖碟》多人(搞笑)最新7人VIP免费

2024-11-30 13

2024-11-30 13 -

《摆摊-卖碟》多人(搞笑)最新VIP免费

2024-11-30 15

2024-11-30 15 -

“专心成长 超越自我”主题年会暨经管院就协成立一周年庆典联欢会策划书VIP免费

2024-11-30 18

2024-11-30 18 -

高效团队建设方案-如何组建高效的团队VIP免费

2024-12-09 49

2024-12-09 49

分类:图书资源

价格:10玖币

属性:23 页

大小:1.01MB

格式:PDF

时间:2025-04-27

渝公网安备50010702506394

渝公网安备50010702506394