1

Follow-up Attention: An Empirical Study of

Developer and Neural Model Code Exploration

Matteo Paltenghi, Rahul Pandita, Austin Z. Henley, Albert Ziegler

Abstract—Recent neural models of code, such as OpenAI

Codex and AlphaCode, have demonstrated remarkable pro-

ficiency at code generation due to the underlying attention

mechanism. However, it often remains unclear how the models

actually process code, and to what extent their reasoning and

the way their attention mechanism scans the code matches the

patterns of developers. A poor understanding of the model

reasoning process limits the way in which current neural models

are leveraged today, so far mostly for their raw prediction. To

fill this gap, this work studies how the processed attention signal

of three open large language models - CodeGen, InCoder and

GPT-J - agrees with how developers look at and explore code

when each answers the same sensemaking questions about code.

Furthermore, we contribute an open-source eye-tracking dataset

comprising 92 manually-labeled sessions from 25 developers

engaged in sensemaking tasks. We empirically evaluate five

heuristics that do not use the attention and ten attention-based

post-processing approaches of the attention signal of CodeGen

against our ground truth of developers exploring code, including

the novel concept of follow-up attention which exhibits the highest

agreement between model and human attention. Our follow-up

attention method can predict the next line a developer will look

at with 47% accuracy. This outperforms the baseline prediction

accuracy of 42.3%, which uses the session history of other

developers to recommend the next line. These results demonstrate

the potential of leveraging the attention signal of pre-trained

models for effective code exploration.

I. INTRODUCTION

Large language models (LLMs) pre-trained on code such

as Codex [1], CodeGen [2], and AlphaCode [3] have demon-

strated remarkable proficiency at program synthesis and com-

petitive programming tasks. Yet our understanding of why they

produce a particular solution is limited. In large-scale practical

applications, the models are often used for their prediction

alone, i.e., as generative models, and the way they reason about

code internally largely remains untapped.

These models are often based on the attention mechanism

[4], a key component of the transformer architecture [5].

Besides providing substantial performance benefits, attention

weights have been used to provide interpretability of neural

models [6, 7, 8]. Additionally, existing work [9, 10, 11, 12]

also suggests that the attention mechanism reflects or encodes

objective properties of the source code processed by the model.

We argue that just as software developers consider different

locations in the code individually and follow meaningful

Matteo Paltenghi is with the University of Stuttgart, Stuttgart, Germany. E-

mail: mattepalte@live.it. Work done while at GitHub Next for a research

internship. Rahul Pandita and Albert Ziegler are with GitHub Inc, San

Francisco, CA, USA. E-mail: {rahulpandita, wunderalbert}@github.com.

Austin Z. Henley is with Microsoft Research, Redmond, WA, USA. E-mail:

azh321@gmail.com.

connections between them, the self-attention of transformers

connects and creates information flow between similar and

linked code locations. This raises a question:

Are human attention and model attention comparable? And

if so, can the knowledge about source code conveyed by the

attention weights of neural models be leveraged to support

code exploration?

Although there are other observable signals that might cap-

ture the concept of relevance, such as gradients-based [13, 14]

or layer-wise relevance propagation [15], this work focuses on

approaches using only the attention signal. The reasons for

this choice are two: (1) almost all state-of-the-art models of

code are based on the transformer block [5], and the attention

mechanism is ultimately its fundamental component, so we

expect the corresponding attention weights to carry directly

meaningful information about the models’ decision process;

(2) attention weights can be extracted almost for free during

the generation with little runtime overhead since the attention

is computed automatically during a single forward pass.

Answering the main question of this study requires a dataset

tracking developers’ attention. In this work, we use visual

attention as a proxy for the elements to which developers

are paying mental attention while looking at code. However,

the existing datasets of visual attention are not suitable for

our purposes. Indeed, they either put the developers in an

unnatural, and thus possibly biasing, environment where most

of the vision is blurred [8], requiring participants to move the

mouse over tokens to reveal them, or they contain few and

very specific code comprehension tasks [16] on code snippets

too short to exhibit any interesting code navigation pattern.

This blurring method can introduce bias by forcing unnatural

interactions, potentially affecting how developers naturally

explore and understand code. To address these limitations

and stimulate developers to not only glance at code, but

also to deeply reason about it, we prepare an ad-hoc code

understanding assignment called the sensemaking task. This

involves questions on code, including mental code execution,

side-effects detection, algorithmic complexity, and deadlock

detection. Moreover, using eye-tracking, we collect and share

a dataset of 92 valid sessions with developers.

On the neural model side, motivated by some recent suc-

cessful applications of few-shot learning in code generation

and code summarization [17, 18] and even zero-shot in pro-

gram repair [19], the sensemaking task is designed to be

a zero-shot task for the model with a specific prompt that

triggers it to reason about the question at hand. Then we

query three LLMs of code, namely CodeGen [2], InCoder [20]

arXiv:2210.05506v2 [cs.SE] 29 Aug 2024

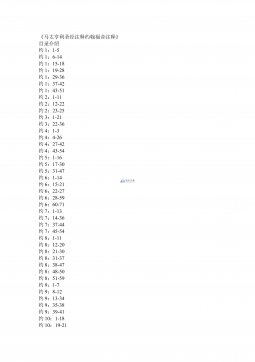

2024-12-10 56

2024-12-10 56

2024-12-10 84

2024-12-10 84

2024-12-26 163

2024-12-26 163

2024-12-26 422

2024-12-26 422

2024-12-26 283

2024-12-26 283

2024-12-26 388

2024-12-26 388

2025-08-09 237

2025-08-09 237

2025-08-09 61

2025-08-09 61

2025-08-18 12

2025-08-18 12

2025-11-20 47

2025-11-20 47

渝公网安备50010702506394

渝公网安备50010702506394