Model and Data Transfer for Cross-Lingual Sequence Labelling in Zero-Resource Settings Iker García-Ferrero Rodrigo Agerri German Rigau

ModelandDataTransferforCross-LingualSequenceLabellinginZero-ResourceSettingsIkerGarcía-FerreroRodrigoAgerriGermanRigauHiTZBasqueCenterforLanguageTechnologies-IxaNLPGroupUniversityoftheBasqueCountryUPV/EHU{iker.garciaf,rodrigo.agerri,german.rigau}@ehu.eusAbstractZero-resourcecross-lingualtransferap-p...

相关推荐

-

Michael Moorcock - Elric 6 - StormbringerVIP免费

2024-12-08 18

2024-12-08 18 -

Michael Crichton - PreyVIP免费

2024-12-08 20

2024-12-08 20 -

Mercedes Lackey - WintermoonVIP免费

2024-12-08 18

2024-12-08 18 -

Mercedes Lackey - SE 1- Born To RunVIP免费

2024-12-08 16

2024-12-08 16 -

Mercedes Lackey - Heralds of Valdemar 1 - Arrows Of The QueeVIP免费

2024-12-08 21

2024-12-08 21 -

Melville, Herman - TypeeVIP免费

2024-12-08 26

2024-12-08 26 -

MaryJanice Davidson - [Betsy 5] - Undead and Unpopular (v1.0)VIP免费

2024-12-08 33

2024-12-08 33 -

Marion Zimmer Bradley - Darkover - The Heirs of HammerfellVIP免费

2024-12-08 33

2024-12-08 33 -

MacDonnell, J E - 096 - Execute!VIP免费

2024-12-08 22

2024-12-08 22 -

Lovecraft, H P - The Dream Quest Of Unknown KadadthVIP免费

2024-12-08 36

2024-12-08 36

作者详情

相关内容

-

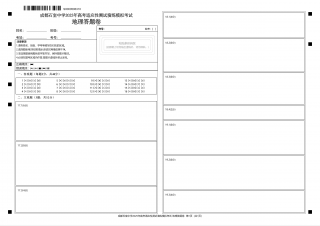

[16] 学位英语:2007年阅读理解分析

分类:高等教育

时间:2025-04-08

标签:无

格式:DOC

价格:5.9 玖币

-

[15] 学位英语:2006年阅读理解分析

分类:高等教育

时间:2025-04-08

标签:无

格式:DOC

价格:5.9 玖币

-

[14] 学位英语:2005年阅读理解分析

分类:高等教育

时间:2025-04-08

标签:无

格式:DOC

价格:5.9 玖币

-

[13] 学位英语:2004年阅读理解分析

分类:高等教育

时间:2025-04-08

标签:无

格式:DOC

价格:5.9 玖币

-

[10] 学位英语:长难句拆分(二)

分类:高等教育

时间:2025-04-08

标签:无

格式:DOC

价格:5.9 玖币

渝公网安备50010702506394

渝公网安备50010702506394