also achieve great performance for graph-structured data. With the success of Manifold-Mixup, utilizing Mixup-based

data augmentation in graph learning has emerged as a mainstream paradigm.

As indicated by existing studies [10, 11], Mixup-based graph learning is mainly influenced by two factors, namely,

1) the hyperparameters in Mixup itself, such as the Mixup ratio that balances the proportion of the source data, and 2)

the Mixup strategies that are associated with representation generation. Over these factors, the hyperparameter issue is

a common one across several different Mixup-applied fields, and has been extensively studied in fields such as image

classification [12, 13]. However, the second issue about Mixup strategies is highly correlated to the context in which

Mixup is applied, and for graph-structured data, the influence of such an issue has not been well studied.

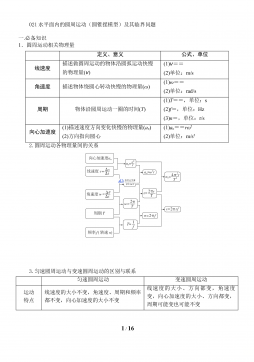

In the context of Mixup-based graph learning, as shown in Figure 1, Manifold-Mixup is fed with the inputs from

the graph pooling layer, which use graph pooling operators (e.g., Max-pooling) to produce coarsened representations

of the given graph while preserving its semantic information. Namely, this layer is the key to representation generation

of Manifold-Mixup, in that Manifold-Mixup generates augmented training data by interpolating these representations.

Therefore, the performance of Manifold-Mixup can be highly affected by the graph pooling operators. Recent

works [14, 15, 16] have attempted to systematically analyze the importance of graph pooling in representation

generation; however, the following question, namely, how different graph pooling operators affect the effectiveness of

Mixup-based graph learning, still remains open.

In this paper, we tackle this problem by empirically analyzing the difference when Mixup is applied in different

graph representations generated by different graph pooling operators. Specifically, we focus on two types of graph

pooling methods, namely, standard pooling methods and a unifying formulation of hybrid (mixture) pooling operators.

For the standard pooling, the Max-pooling, which is the most widely used one [15], and the state-of-the-art graph

multiset transformer pooling (GMT) [17] which is a global pooling layer based on multi-head attention and capturing

node interactions based on structural dependencies, are considered. For the hybrid pooling, we extend the prior work

[16, 18] and design 9 types of hybrid pooling strategies, and more details are introduced in Table 1. Here, GMT

and hybrid pooling operators are considered more advanced strategies. We conduct empirical experiments to evaluate

the effectiveness of graph learning using Manifold-Mixup [11], under different hybrid pooling operators. In total,

our experiments cover diverse types of datasets, including two programming languages (JAVA and Python) and one

natural language (English), and consider different tasks, two widely-studied graph-level classification tasks (program

classification and Fake news detection), and six GNN model architectures. Based on that, we answer the following

research questions:

RQ1: How effective are hybrid pooling operators for enhancing the accuracy of Mixup-based graph learning?

The results on NLP datasets (Gossipcop and Politifact used for fake news detection) show that the hybrid pooling

operator Type 1 (Msum(Patt,Pmax)) outperforms GMT by up to 4.38% accuracy. On PL datasets (JAVA250 and

Python800 used for problem classification), also the hybrid pooling operator Type 1 (Msum(Patt,Pmax)) surpasses

GMT by up to 2.36% accuracy.

RQ2: How effective are hybrid pooling operators for enhancing the robustness of Mixup-based graph learning?

The results demonstrate that in terms of robustness, the hybrid pooling operator Type 6 (Mconcat(Patt,Psum)) surpasses

GMT by up to 23.23% in fake news detection, while the hybrid pooling operator Type 1 (Msum(Patt,Pmax)) outperforms

GMT by up to 10.23% in program classification.

RQ3: How does the hyperparameter setting affect the effectiveness of Manifold-Mixup when hybrid pooling

operators are applied? According to [8], the hyperparameter λdenotes the interpolation ratio, and it is sampled from

aBeta distribution with a shape parameter α(λ∼Beta (α, α)). Existing works [10, 11] show that the hyperparameter

setting λaffects the performance of Mixup. Therefore, we study the effectiveness of Manifold-Mixup when using

hybrid pooling operators under different hyperparameters of Mixup. Experimental results indicate that a smaller value

of the hyperparameter leads to better robustness and accuracy.

In summary, the contributions of this paper are as follows:

•This is the first work that explores the potential influence of graph pooling operators on Mixup-based graph-

structured data augmentation. To facilitate reproducibility, our code and data are available online 1.

•We discuss and further extend the hybrid pooling operators from existing works.

1https://github.com/zemingd/HybridPool4Mixup

2

2024-12-12 367

2024-12-12 367

2024-12-12 47

2024-12-12 47

2025-01-04 45

2025-01-04 45

2025-04-08 6

2025-04-08 6

2025-04-08 4

2025-04-08 4

2025-04-08 4

2025-04-08 4

2025-04-08 10

2025-04-08 10

2025-04-08 12

2025-04-08 12

2025-04-08 9

2025-04-08 9

2025-04-08 11

2025-04-08 11

渝公网安备50010702506394

渝公网安备50010702506394