Conformal Prediction for Hidden Markov Models with Exact Validity

plying that, for k= 1 ...,T + 1,

P(T+1

X

t=1

1(σ(Xt, Yt)≤σ(XT+1, YT+1)) ≤k)=k

T+ 1.

(7)

Then (7) and (6) yield the coverage guarantee in (5).

Therefore, exchangeability is the crucial assumption in the

classical conformal prediction framework. As such, the clas-

sical conformal prediction framework does not guarantee

the desired coverage

(5)

in non-exchangeable settings such

as the HMM setting that we are dealing with.

2.2. Relaxing the Assumption of Exchangeability in

Conformal Prediction

Several works in the literature aimed to generalize the classi-

cal conformal prediction framework summarized in Sec. 2.1

to non-exchangeable settings. We briefly discuss some of

those works that are most relevant to our work below.3

Our work is in particular motivated by the approach pre-

sented in (Chernozhukov et al.,2018) that proposed a

method to extend the conformal prediction framework to

time series data via a randomization method which accounts

for potential temporal dependencies in the data. The key

idea is to construct an algebraic group of block-wise per-

mutations (instead of the element-wise permutations used

in classical conformal prediction) such that each permuta-

tion in that group is likely to preserve the potential tempo-

ral dependencies. Extending the work in (Chernozhukov

et al.,2018) further, (Xu & Xie,2021) proposed to derive

prediction intervals using an ensemble of bootstrapped esti-

mators to avoid having to split data into blocks. However,

when exchangeability assumption fails, the approaches pre-

sented in (Chernozhukov et al.,2018;Xu & Xie,2021) are

only approximately valid (i.e., not guaranteed to satisfy the

bound

(2)

). In contrast, the aim of our work is to devise a

method that is guaranteed to satisfy the bound

(2)

in any

unknown HMM. In particular, the approach we present is

based on constructing a block-wise permutation (which ex-

ploits the de Finetti’s theorem for Markov chains reviewed in

Sec. 3.1) that adapts to the observed sequence

{(Xt, Yt)}T

t=1

in a manner that guarantees the exact exchangeability of the

blocks. As a consequence, the method that we propose is

exactly valid in the sense that it is guaranteed to achieve the

bound (2).

In another direction, (Cherubin & Nouretdinov,2016;

Stankeviciute et al.,2021) proposed to apply the classical

conformal prediction algorithm as a solution when multiple

independent calibration sequences are available. However,

3

We refer the reader to (Fontana et al.,2023) for a comprehen-

sive review of more versions of the classical conformal prediction

framework.

our problem (stated in Sec. 1) assumes that only one real-

ized sequence is available and since a Markov chain is not

exchangeable in general, the classical conformal prediction

framework is not applicable to our setting.

Making conformal prediction robust to changes in the un-

derlying distributions has also received significant attention

in the literature that focuses on generalizing conformal pre-

diction. When the distributions of calibration states and

test states are both exchangeable but different from each

other, (Tibshirani et al.,2019) proposed an approach based

on re-weighting the calibration data with a likelihood ratio

(of test and training distributions). (Cauchois et al.,2020;

Gibbs & Candes,2021;Barber et al.,2022) proposed meth-

ods for even more general settings such as arbitrary number

of changes in both the state and observation distributions,

etc. In contrast to (Tibshirani et al.,2019;Cauchois et al.,

2020;Gibbs & Candes,2021;Barber et al.,2022), our aim

is to extend the conformal prediction framework specifically

to HMMs with exact validity (instead of approximate va-

lidity) for any sequence length. Additionally, our approach

is based on finding exchangeable blocks in the Markovian

data whereas (Tibshirani et al.,2019;Cauchois et al.,2020;

Gibbs & Candes,2021;Barber et al.,2022) utilize meth-

ods such as online updates to reflect the difference between

empirically achieved confidence and target confidence, non-

uniformly weighting calibration data, etc.

3. Quantifying the Uncertainty in Hidden

Markov Models via Conformal Prediction

In this section, we first review the notions of mixtures of

Markov chains and partial exchangeability. We then exploit

a characterization of partial exchangeability in terms of mix-

tures of Markov chains presented in (Diaconis & Freedman,

1980) to extend the classical conformal prediction frame-

work to Hidden Markov Models in a manner that provably

preserves all its key theoretical guarantees.

3.1. Mixtures of Markov Chains and Partial

Exchangeability

This subsection provides a brief review of the main result of

(Diaconis & Freedman,1980) which characterizes a mixture

of Markov chains as a partially exchangeable process.

Formally, a process

X1, X2, . . .

is a mixture of Markov

chains, if there exists a probability measure

µ

on the space

of all

n×n

stochastic matrices

P

(for the state space

X={1,2, . . . , n}) such that,

P{Xt=xtfor 1≤t≤T}=ZP

T−1

Y

t=1

Pxtxt+1 µ(dP )

(8)

for any sequence of states

x1, x2, . . . , xT∈ X

. (Di-

3

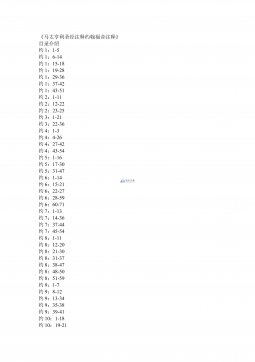

2024-12-10 56

2024-12-10 56

2024-12-10 84

2024-12-10 84

2024-12-26 163

2024-12-26 163

2024-12-26 421

2024-12-26 421

2024-12-26 282

2024-12-26 282

2024-12-26 386

2024-12-26 386

2025-08-09 237

2025-08-09 237

2025-08-09 61

2025-08-09 61

2025-08-18 12

2025-08-18 12

2025-11-20 47

2025-11-20 47

渝公网安备50010702506394

渝公网安备50010702506394