Causal Structure Learning with Recommendation System

Shuyuan Xu†, Da Xu‡, Evren Korpeoglu‡, Sushant Kumar‡

Stephen Guo§, Kannan Achan‡, Yongfeng Zhang†

†Rutgers University ‡Walmart Labs §Indeed

shuyuan.xu@rutgers.edu,{da.xu,EKorpeoglu,Sushant.Kumar}@walmart.com

sguo@indeed.com,kannan.achan@walmart.com,yongfeng.zhang@rutgers.edu

ABSTRACT

A fundamental challenge of recommendation systems (RS) is under-

standing the causal dynamics underlying users’ decision making.

Most existing literature addresses this problem by using causal

structures inferred from domain knowledge. However, there are

numerous phenomenons where domain knowledge is insucient,

and the causal mechanisms must be learnt from the feedback data.

Discovering the causal mechanism from RS feedback data is both

novel and challenging, since RS itself is a source of intervention

that can inuence both the users’ exposure and their willingness

to interact. Also for this reason, most existing solutions become

inappropriate since they require data collected free from any RS.

In this paper, we rst formulate the underlying causal mechanism

as a causal structural model and describe a general causal structure

learning framework grounded in the real-world working mecha-

nism of RS. The essence of our approach is to acknowledge the

unknown nature of RS intervention. We then derive the learning ob-

jective from our framework and propose an augmented Lagrangian

solver for ecient optimization. We conduct both simulation and

real-world experiments to demonstrate how our approach com-

pares favorably to existing solutions, together with the empirical

analysis from sensitivity and ablation studies.

KEYWORDS

Recommender Systems, Causal Discovery, Explainability, Graphical

Model, Structural Equation, Unknown Intervention

1 INTRODUCTION

In recent years, there has been growing interest in understanding

how the actions taken by a recommendation system (RS) can in-

duce changes to the subsequent feedback data. This type of causal

reasoning is critical to the explainability, fairness, and transparency

of RS. In contrast to machine learning who primarily focuses on

data-driven problem solving, causal discovery investigates into the

data generating mechanism and tries to understand how the ob-

servations are formed. Therefore, depending on the question of

interest, the fact that RS itself can interfere with the users’ feedback

can be both troublesome and useful.

For example, most machine learning model assumes that the col-

lected data is generated by a static distribution. However, making

a recommendation is likely to intervene with the user’s decision

making, thus changing the potential feedback [

49

]. Also, notice that

RS interventions are often systematic, meaning they are designed

by developers in specic patterns rather than being purely ran-

dom, which is very dierent from ordinary statistical uctuations

[

5

]. Therefore, when it comes to machine learning and evaluation,

those interventions will cause various types of bias, and a great

deal of literature has been devoted to addressing such issues [

8

].

On the other hand, discovering causal relationships can benet sig-

nicantly from systematic interventions. The reason is that when

an intervention takes place during the data-generation process, the

systematic changes it causes provides an opportunity for us to track

down the underlying cause-eect mechanisms.

To our knowledge, discovering causal mechanisms with RS has

rarely been studied previously due to various challenges. Most ex-

isting solutions cannot handle unknown interventions made by

RS that are unrelated to causal discovery. Consequently, the RS

community relies primarily on causal mechanism inferred from

domain knowledge [

50

,

52

], but they often lack the coverage, ver-

satility, and ability to explain many phenomenons of interest. With

causal inference emerging as a key instrument for many RS studies

and applications, it becomes imperative to learn the desired causal

mechanism from RS feedback data.

However, unlike other scientic elds (such as clinical trials, etc.)

where interventions are purposefully made to elucidate the causal

mechanisms of interest [

27

], the interventions made by RS are pri-

marily designed to increase the users’ engagement and revenue.

Worse yet, we may not even be able to tell whether a recommenda-

tion has indeed changed the users’ decision making, which means

the intervention from RS is of an unknown nature. As a result,

with the existing solutions, it is nearly impossible to identify the

underlying causal mechanisms using RS feedback data alone.

An important observation that makes causal discovery possible,

even with unknown intervention, is that an eect given its causes

remains invariant to changes in the mechanism that generates the

causes [

30

]. It implies that while the recommendation made by

RS can interfere with what causes a user to give the feedback, the

causal mechanism behind the user’s decision making is unaltered

regardless of the interference. The opposite statement is not true,

that the occurrence of a cause given the eect will not be invariant

under outside interference. As we discuss later, this critical asym-

metry can help us identify the cause-eect relationship in the RS

feedback data.

Rather than making unrealistic assumptions to consolidate the

unknown interventions of RS, we propose a novel modelling tech-

nique through a mixture of competing mechanisms. The high-level

intuition is similar to that of the classical mixture of distributions

[32], but we make two signicant progress:

(1)

the expectations are now taken with respect to an

expert

–

which is a stochastic indicator function – that judges the winner

of the competing mechanisms, namely, the recommendation

mechanisms and the causal mechanism;

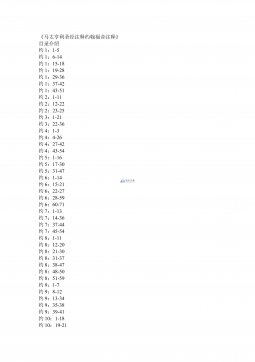

arXiv:2210.10256v1 [cs.IR] 19 Oct 2022

2024-12-10 56

2024-12-10 56

2024-12-10 84

2024-12-10 84

2024-12-26 163

2024-12-26 163

2024-12-26 434

2024-12-26 434

2024-12-26 297

2024-12-26 297

2024-12-26 400

2024-12-26 400

2025-08-09 237

2025-08-09 237

2025-08-09 61

2025-08-09 61

2025-08-18 12

2025-08-18 12

2025-11-20 47

2025-11-20 47

渝公网安备50010702506394

渝公网安备50010702506394