COFFEE Counterfactual Fairness for Personalized Text Generation in Explainable Recommendation Nan Wang13 Qifan Wang2 Yi-Chia Wang2 Maziar Sanjabi2 Jingzhou Liu2

2025-04-29

1

0

5.14MB

18 页

10玖币

侵权投诉

COFFEE: Counterfactual Fairness for Personalized Text Generation in

Explainable Recommendation

Nan Wang1,3∗

, Qifan Wang2, Yi-Chia Wang2, Maziar Sanjabi2, Jingzhou Liu2

Hamed Firooz2,Hongning Wang3,Shaoliang Nie2

1Netflix Inc., Los Gatos, California, USA

2Meta AI, Menlo Park, CA, USA

3University of Virginia, VA, USA

Abstract

As language models become increasingly inte-

grated into our digital lives, Personalized Text

Generation (PTG) has emerged as a pivotal

component with a wide range of applications.

However, the bias inherent in user written text,

often used for PTG model training, can inad-

vertently associate different levels of linguistic

quality with users’ protected attributes. The

model can inherit the bias and perpetuate in-

equality in generating text w.r.t. users’ pro-

tected attributes, leading to unfair treatment

when serving users. In this work, we investi-

gate fairness of PTG in the context of person-

alized explanation generation for recommenda-

tions. We first discuss the biases in generated

explanations and their fairness implications.

To promote fairness, we introduce a general

framework to achieve measure-specific coun-

terfactual fairness in explanation generation.

Extensive experiments and human evaluations

demonstrate the effectiveness of our method.

1 Introduction

Personalized text generation (PTG) has extensive

applications, such as explainable recommendation

(Zhang and Chen,2020;Chen et al.,2021), post

generation (Yuan and Huang,2019;He et al.,2021),

and conversational systems (Zhang et al.,2018,

2019;Lee et al.,2021). The auto-generated text,

functioning at the frontier of human-machine inter-

action, influences users’ decisions and transforms

their way of thinking and behaving. However, due

to its immense power and wide reach, PTG can

inadvertently give rise to fairness issues and lead to

unintended consequences (Alim et al.,2016;Bor-

dia and Bowman,2019;Blodgett et al.,2020).

In this work, we investigate the fairness issues

in PTG, focusing on one of the mostly studied set-

tings: generating natural language explanations for

∗

The research was conducted when the author was an in-

tern at Meta and a PhD candidate at the University of Virginia.

Correspondence to nanw@netflix.com

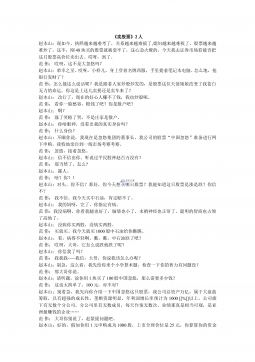

Figure 1: Left: average FeatCov of ground-truth ex-

planations in the train set. Right: average FeatCov of

explanations generated on the test set by PETER.

recommendations (Wang et al.,2018;Chen et al.,

2021;Yang et al.,2021;Li et al.,2021a;Yang et al.,

2022). Personalized explanation generation aims

to provide a user with descriptive paragraphs on

recommended items that align with his/her pref-

erences, enabling more informative and accurate

decision-making. The generators are typically lan-

guage models trained on user written reviews from

e-commerce platforms (Zhang et al.,2018;Ni et al.,

2019;Yang et al.,2021), where sentences related

to item descriptions are retained to construct the

ground-truth explanations. However, due to histori-

cal, social, or behavioral reasons, inherent bias may

exist within the review text, associating specific

linguistic characteristics with the users’ protected

attributes such as gender or race (Newman et al.,

2008;Alim et al.,2016;Volz et al.,2020). While

certain linguistic features that capture the diversity

of language use (Newman et al.,2008;Groenwold

et al.,2020) are suitable for personalization, others

pertaining to the linguistic quality of explanations,

such as informativeness or detailedness, (Louis,

2013), should be excluded. Failure to do so can

result in unfair treatment when serving users.

As an example, we investigate the explanation

generation on Amazon Movies

1

with the personal-

ized transformer model PETER (Li et al.,2021a).

We adopt feature coverage (FeatCov, the number

of unique features mentioned about a movie) as

an automatically measurable metric of explanation

1The details about experiment setup is in Section 6.

arXiv:2210.15500v2 [cs.CL] 22 Oct 2023

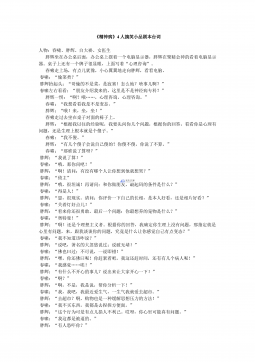

Figure 2: FeatCov vs. human evaluated quality mea-

sures. Each measure is rated in a five-category scale.

p

-valus < 0.05 in Kruskal-Wallis H test for all three

criteria (see Appendix Dfor detailed test results).

quality. As shown in Figure 1(left), reviews from

male users generally have higher FeatCov on the

target movies than those from female users (Fan-

Osuala,2023)

2

. A model trained on such data can

inherit the bias and generate explanations discrim-

inately when serving users—higher FeatCov for

males than females, as indicated in Figure 1(right).

To further substantiate the bias issue, we conducted

a user study and found strong positive correlation,

shown in Figure 2, between FeatCov and human

evaluated quality criteria including informativeness,

detailedness and helpfulness. The observations re-

main consistent when results from male and female

human evaluators are analyzed separately, affirm-

ing that both genders consider FeatCov a signifi-

cant indicator of quality. This study demonstrates

the bias in FeatCov as a concerning issue for PTG.

Details of the user study are in Section 7.

In particular, the bias observed in training data

originates from various factors such as different

demographic behavior patterns that are beyond our

control. But the system should not inherit the bias

and act discriminately by generating explanations

of different quality for different users—the lack of

informative reviews from users of a demographic

group should not prevent them from receiving infor-

mative explanations. Without proper intervention,

the system can exhibit such bias, thus adversarially

affecting the users’ experience, reliance and trust

in the system (Tintarev and Masthoff,2015). From

a broader perspective, the bias can reinforce itself

by influencing how users write online, and jeopar-

dize fairness in the long run (Schwartz et al.,2016;

Alim et al.,2016;Bordia and Bowman,2019).

To mitigate the issue, we take a causal perspec-

tive to dissect the bias and enforce counterfactual

fairness (CF) (Kusner et al.,2017) in personalized

explanation generation: the quality of generated

2

We use binary gender for case study, but our work gener-

alizes to any binary or non-binary attributes.

explanations should not differentiate a user in the

real world vs. in the counterfactual world where

only the user’s protected attribute (e.g., gender) is

changed. This problem is essential and unique com-

pared to fairness problems in the literature (Sec-

tion 2), and imposes specific technical challenges

(Section 4). To achieve the goal, we develop a

general framework, COFFEE, for COunterFactual

FairnEss in Explanation generation. COFFEE

treats a user’s protected attribute value as a sep-

arate token input to the model, and disentangles

its representation from the user’s representation

(Ma et al.,2019;Locatello et al.,2019b;Zheng

et al.,2021). By controlling the input attribute val-

ues for counterfactual inference (CI), we impose

a measure-specific CF constraint (Russell et al.,

2017) on generated explanations. Then a novel

fair policy learning scheme is developed to opti-

mize CF with carefully designed rewards, which

generalizes to any single or combination of qual-

ity measures. We use user-side fairness by default

in discussions, but COFFEE is general to ensure

fairness on either user or item side in the two-sided

market (Wang and Joachims,2021), and be adapted

to different models. Extensive experiments and

rigorous user studies demonstrate COFFEE’s su-

periority in achieving fairness and maintain high

generation performance compared to baselines.

2 Background

Uniqueness and Importance: Fairness in machine

learning (ML) is originally studied in automatic

decision-making systems that directly impose "sig-

nificant" or "legal" effects on individuals (Voigt and

Bussche,2017). Such fairness considerations often

revolve around resource allocation by ML models,

exemplified in contexts like loan assessments (Lee

and Floridi,2021) or job applications (Singh and

Joachims,2018), where model predictions can un-

favorably affect a protected group (Du et al.,2021;

Mehrabi et al.,2021).

In contrast to resource allocation fairness, NLP

researchers primarily examine the representational

fairness (Blodgett et al.,2020;Liang et al.,2021;

Sheng et al.,2021) regarding how language models

shape social biases and stereotypes through natu-

ral language understanding (NLU) or generation

(NLG). In the realm of NLG, fairness particularly

concerns how generated text may contain biased

information about a specific demographic group.

For instance, Huang et al. (2020) analyze the sen-

tence completion by a GPT-2 model, and find dif-

ferent sentiment distributions of completed sen-

tences when the occupation word is counterfactu-

ally changed in the prompts. Bordia and Bowman

(2019) revealed a more frequent co-occurrence of

the ’doctor’ with male pronouns and ’nurse’ with

female pronouns in generated text. However, these

biases, directly encapsulated within the text, can

be more easily analyzed. To the best of our knowl-

edge, our work is pioneering in exploring how per-

sonalization, bridging NLP and recommendation,

associates the bias in NLG with protected attributes

of users.

Fairness Notions: Various fairness notions exist in

the literature, with group-wise fairness notions be-

ing the firstly studied ones (Zafar et al.,2015;Hardt

et al.,2016;Zafar et al.,2017). Yet, group-wise

fairness has different quantitative definitions that

are generally incompatible (Kleinberg et al.,2017;

Berk et al.,2021). Some definitions can even exac-

erbate discrimination (Kusner et al.,2017). Individ-

ual fairness (Zemel et al.,2013;Joseph et al.,2016)

requires similar users to receive similar predictions.

But it relies on carefully chosen domain-specific

similarity metrics (Dwork et al.,2012). In contrast,

counterfactual fairness (CF) (Kusner et al.,2017),

considering fairness from a causal perspective, has

gained prominence recently as a more robust fair-

ness notion (Russell et al.,2017;Wu et al.,2019;

Makhlouf et al.,2020), which can also enhance

group-wise fairness in certain scenarios (Zhang

and Bareinboim,2018;Khademi et al.,2019).

Though CF has been studied in some non-

personalized NLP tasks (Huang et al.,2020;Garg

et al.,2019), most existing works study the depen-

dency of model outputs on attribute-specific words

within the input text (Blodgett et al.,2020;Liang

et al.,2021;Sheng et al.,2021). In such cases,

CI can be easily performed on the input text it-

self, such as changing male pronouns to female

pronouns (Huang et al.,2020;Garg et al.,2019).

However, CF in PTG necessitates CI on the pro-

tected attributes of users being served-an area yet

to be thoroughly explored.

3 Problem Formulation

In the following discussions, we consider a sin-

gle protected attribute on the user side for sim-

plicity, but our proposed framework is versatile

to accommodate multiple attributes on either the

user or the item side. The value of a user’s pro-

tected attribute is denoted by a variable

A∈ A

,

where

A

is the set of possible attribute values, e.g.,

A={male, f emale, other}

for gender. Each

dataset entry is a tuple of

(u, i, a, e)

, correspond-

ing to user ID, item ID, observed attribute value,

and ground-truth explanation. The explanation gen-

erator Gθis a language model parameterized by θ.

Given a user

u

, an item

i

, and observed attribute

value

a

, an explanation can be sampled from the

generator as

Y∼Gθ(u, i|A=a)

. The linguis-

tic quality of any explanation

y

is measured by a

function

Q(y)

. Notably, we treat

Q

as a black box

oracle—a quality measure that can only be queried.

This is essential in practice and offers the flexibility

to arbitrarily tailor

Q

based on the fairness require-

ments of the application. An explanation can be

gauged in various ways by customizing

Q

, such as

using an explicit function, human evaluation, or a

tool provided by authorities. We assume, without

loss of generality, that higher

Q

values represent

superior quality. CF on any measure

Q

of explana-

tions is achieved when, given a user

u

and an item

i,

P(Q(YA←a)|u, i, a) = P(Q(YA←a′)|u, i, a),(1)

where

YA←a′∼Gθ(u, i|A=a′)

is the expla-

nation generated when we counterfactually as-

sign the value of the user’s protected attribute by

A←a′, a′̸=a

. The right side of Eq. (1) evaluates

the quality distribution of explanations generated

had the user’s protected attribute value been

a′

,

given that the observed attribute value is

a

(Kusner

et al.,2017;Li et al.,2021b).

Denote the loss of the generator for a given user

u

and item

i

by

Lgen(Gθ(u, i|A=a), e)

, which

is typically the negative log-likelihood (NLL) loss

or a combination of several losses (Li et al.,2017;

Yang et al.,2021;Li et al.,2021a). We consider

training the generator for fair explanation genera-

tion as a constrained optimization problem:

minLgen(Gθ(u, i|A=a), e)

s.t. EYA←a[Q(YA←a)|u, i, a] =

EYA←a′[Q(YA←a′)|u, i, a]

(2)

For ease of presentation, we consider a single user-

item pair, and the total loss on a dataset is simply

summed over all user-item pairs with the constraint

applied to every pair. In this work, we apply the

first-order moment of the quality of generated ex-

planations to construct the constraint, and leave the

extension to other moment-matching constraints

for future work. We further simplify the expression

of the constraint as E[Q(YA←a)] = E[Q(YA←a′)].

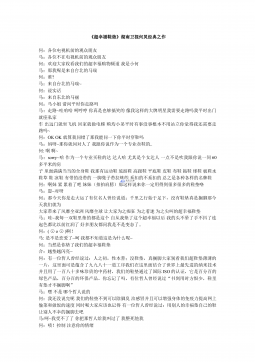

Figure 3: Given a user with attribute value

a

and a recommended item, COFFEE performs CI by switching

the attribute value to

a′

to get the counterfactual user, which is achieved by disentangled attribute embeddings.

Explanations

Y

for both the real user and the counterfactual user are sampled from the generator, evaluated by

Q

.

COFFEE then updates the generator’s parameters θby policy learning from the fairness constraint.

4 COFFEE

User and item IDs are conventionally input to the

generator for explanation generation, represented

as learned embedding vectors (Li et al.,2017;Wang

et al.,2018;Li et al.,2021a). However, the user’s

protected attribute, entangled with their preference,

is implicitly encoded in the representations (Lo-

catello et al.,2019a), hindering its direct manipu-

lation for CI. One way explored for CF in person-

alization involves removing all information about

the protected attribute in representations via dis-

criminators (Zemel et al.,2013;Li et al.,2021b;

Wang et al.,2022). Although this improves fairness,

it detrimentally affects personalization and elimi-

nates desired characteristics linked to the protected

attribute. In contrast, we aim to enforce the inde-

pendence of the protected attribute from any speci-

fied quality measure

Q

on generated explanations,

while preserving explanation content-dependence

to sustain high personalization performance.

To enable CI, COFFEE considers a user’s pro-

tected attribute value as a separate token input

to the model, along with user and item IDs, and

learns disentangled attribute representations from

the user representation to encapsulate the effect

of each attribute value in explanation generation

(Locatello et al.,2019a,b;Ma et al.,2019;Zheng

et al.,2021). This mirrors methods in controllable

text generation (Oraby et al.,2018;Shu et al.,2020;

Dathathri et al.,2020), where disentangled attribute

tokens are utilized as inputs to modulate the topic,

sentiment, or style of generated text. CI is then

achieved by altering the attribute token input to the

model. Subsequently, we enforce a fairness con-

straint based on the explanations generated pre and

post CI, and establish a policy learning method for

optimizing this constraint. An illustration of the

COFFEE framework is shown in Figure 3.

4.1 Disentangled Attribute Representation

For a given tuple

(u, i, a)

, we denote the represen-

tation for the attribute value

a

as

ra

, the user’s pref-

erence representation (independent from the pro-

tected attribute) as

ru

and item representation as

ri

.

The complete user representation is

ra

u= [ra,ru]

.

Correspondingly, when performing

A←a′

on

user

u

, we change the input attribute token from

a

to

a′

, and the new user representation becomes

rA←a′

u= [r′

a,ru]

. Note that each attribute value

has its own representation, and is shared across all

users having that same attribute value. For instance,

all male users’ attribute representation is the same

vector

rmale

. We can do the same for item-side

attributes as ra

i= [ra,ri].3

Simply separating the user’s protected attribute

and preference representations does not guarantee

that

ru

will not contain any information about the

protected attribute, inhibiting the accuracy of CI.

To further enforce the disentanglement, we intro-

duce a discriminator

D(ru)

, and add an adversarial

loss on ruin Eq. (2) as

min Lgen (Gθ(u, i|A=a), e) + λDlog(D(ru, a))

s.t. E[Q(YA←a)] = E[Q(YA←a′)],∀a′∈ A, a′̸=a, (3)

where

D(ru, a)

is the probability of predicting the

correct attribute value

a

. In this way, we adver-

sarially remove the protected attribute information

from

ru

, and enforce

ra

to capture all the attribute

information. During mini-batch optimization, we

alternate between the parameter updates of the

model and the discriminator as follows: (1)

X

batches of updates minimizing the loss Eq. (3) with

D

fixed, and (2)

Z

batches of updates maximizing

the the loss Eq. (3) with the generator Gθfixed.

3

We can introduce

K≥2

attribute tokens, each mapped to

its disentangled representations. Sum instead of concatenation

of embeddings can be used when Kis large.

摘要:

展开>>

收起<<

COFFEE:CounterfactualFairnessforPersonalizedTextGenerationinExplainableRecommendationNanWang1,3∗,QifanWang2,Yi-ChiaWang2,MaziarSanjabi2,JingzhouLiu2HamedFirooz2,HongningWang3,ShaoliangNie21NetflixInc.,LosGatos,California,USA2MetaAI,MenloPark,CA,USA3UniversityofVirginia,VA,USAAbstractAslanguagemodels...

声明:本站为文档C2C交易模式,即用户上传的文档直接被用户下载,本站只是中间服务平台,本站所有文档下载所得的收益归上传人(含作者)所有。玖贝云文库仅提供信息存储空间,仅对用户上传内容的表现方式做保护处理,对上载内容本身不做任何修改或编辑。若文档所含内容侵犯了您的版权或隐私,请立即通知玖贝云文库,我们立即给予删除!

相关推荐

-

《卖股票》2人仿赵本山小品卖拐VIP免费

2024-11-30 9

2024-11-30 9 -

《罗密欧与茱丽叶》穿越版-10人以上幽默搞笑小品剧本VIP免费

2024-11-30 15

2024-11-30 15 -

《精神病》4人搞笑小品剧本台词VIP免费

2024-11-30 11

2024-11-30 11 -

《超幸福鞋垫》湖南卫视何炅经典之作VIP免费

2024-11-30 14

2024-11-30 14 -

《曹操与葛朗台》3人搞笑小品剧本台词VIP免费

2024-11-30 13

2024-11-30 13 -

《摆摊-卖碟》多人(搞笑)最新9人VIP免费

2024-11-30 14

2024-11-30 14 -

《摆摊-卖碟》多人(搞笑)最新7人VIP免费

2024-11-30 13

2024-11-30 13 -

《摆摊-卖碟》多人(搞笑)最新VIP免费

2024-11-30 15

2024-11-30 15 -

“专心成长 超越自我”主题年会暨经管院就协成立一周年庆典联欢会策划书VIP免费

2024-11-30 18

2024-11-30 18 -

高效团队建设方案-如何组建高效的团队VIP免费

2024-12-09 49

2024-12-09 49

分类:图书资源

价格:10玖币

属性:18 页

大小:5.14MB

格式:PDF

时间:2025-04-29

渝公网安备50010702506394

渝公网安备50010702506394