zero everywhere w.l.o.g.,

µ(v) = 0,∀v∈V

, and a kernel function,

k

, that captures the covariance

between different locations. If we have access to

T

measurements, at

VT={vt}T

t=1

perturbed by

i.i.d. Gaussian noise,

yT={q(vt) + ηt}T

t=1

with

ηt∼ N (0, σ2)

, we can compute the posterior mean

and covariance over the constraint at unseen locations

v

,

v0

as

µT(v) = k>

T(v)(KT+σ2I)−1yT

and

kt(v, v0) = k(v, v0)−k>

T(v)(KT+σ2I)−1kT(v0)

, where

kT(v) = [k(v1, v), ..., k(vT, v)]>, KT

is the positive definite kernel matrix [k(v, v0)]v,v0∈VTand I∈RT×Tdenotes the identity matrix.

In this work, we make the same assumptions about the safe seed and the regularity of qand ρ.

Approximations of the feasible set

. Based on the GP posterior above, GOOSE builds monotonic con-

fidence intervals for the constraint at each iteration

t

as

lq

t(v):= max{lq

t−1(v), µq

t−1(v)−βq

tσq

t−1(v)}

and

uq

t(v):= min{uq

t−1(v), µq

t−1(v) + βq

tσq

t−1(v)}

, which contain the true constraint function for

every

v∈V

and

t≥1

, with high probability if

βq

t

is selected as in [

18

] or Section 5. GOOSE uses

these confidence intervals within a set

S⊆V

together with the

Lq

-Lipschitz continuity of

q

, to

define operators that determine which locations are safe in plausible worst- and best-case scenarios,

pt(S) = {v∈V, |∃z∈S:lq

t(z)−Lqd(v, z)≥0},(3)

oq

t(S) = {v∈V, |∃z∈S:uq

t(z)−q−Lqd(v, z)≥0}.(4)

Notice that the pessimistic operator relies on the lower bound,

lq

, while the optimistic one on the

upper bound,

uq

. Moreover, the optimistic one uses a margin

q

to exclude "barely" safe locations

as the agent might get stuck learning about them. Finally, to disregard locations the agent could

not safely reach or from where it could not safely return, GOOSE introduces the

Rergodic(·,·)

operator.

Rergodic(pt(S), S)

indicates locations in

S

or locations in

pt(S)

reachable from

S

and from where the

agent can return to

S

along a path contained in

pt(S)

. Combining

pt(S)

and

Rergodic(·,·)

,GOOSE

defines the pessimistic and ergodic operator

˜

Pt(·)

, which it uses to update the pessimistic safe set.

Similarly, it defines ˜

Ot(·)using oq

t(·)to compute the optimistic safe set.

4MACOPT and SAFEMAC

This section presents MACOPT and SAFEMAC, our algorithms for unconstrained and safety-constrained

multi-agent coverage control, which we then formally analyze in Section 5.

4.1 MACOPT: unconstrained multi-agent coverage control

Greedy sensing regions

. In sequential optimization, it is crucial to balance exploration and exploita-

tion. GP-UCB [

19

] is a theoretically sound strategy to strike such a trade-off that works well in

practice. Agents evaluate the objective at locations that maximize an upper confidence bound over the

objective given by the GP model such that locations with either a high posterior mean (exploitation) or

standard deviation (exploration) are visited. We construct a valid upper confidence bound for the cov-

erage

F(X)

starting from our confidence intervals on

ρ

, by replacing the true density

ρ

with its upper

bound

uρ

t

in Eq. (1). Next, we apply the greedy algorithm to this upper bound (Line 3 of Algorithm 1)

to select

N

candidate locations for evaluating the density. However, this simple exploration strategy

may perform poorly, due to the fact that in order to reduce the uncertainty over the coverage

F

at

X

,

we must learn the density

ρ

at all locations inside the sensing region,

SN

i=1 Di

, rather than simply at

X

.

It is a form of partial monitoring [

20

], where the objective

F

differs from the quantity we measure, i.e.,

the density

ρ

. Next, we explain how to choose locations where to observe the density for a given

X

.

Uncertainty sampling

. Given location assignments

X

for the agents, we measure the density to

efficiently learn the function

F(X)

. Intuitively, agent

i

observes the density where it’s most uncertain

within the area it covers that is not covered by agents

{1,...i−1}

, i.e.,

Di−

t

(Line 4 of Alg. 2, Fig. 2a).

Stopping criterion

. The algorithm terminates when a near-optimal solution is achieved. Intuitively,

this occurs when the uncertainty about the coverage value of the greedy recommendation is low. For-

mally, we require the sum of the uncertainties over the sampling targets to be below a threshold, i.e. ,

wt=PN

i=1 uρ

t−1(xg,i

t)−lρ

t−1(xg,i

t)≤ρ

(Line 3 of Algorithm 2). Importantly, this stopping criterion

requires the confidence intervals to shrink only at regions that potentially maximize the coverage.

MACOPT

. Now, we introduce MACOPT in Algorithm 2. At round

t

, we select the sensing locations

for the agents,

Xt

, by greedily optimizing the upper confidence bound of the coverage. Then, each

4

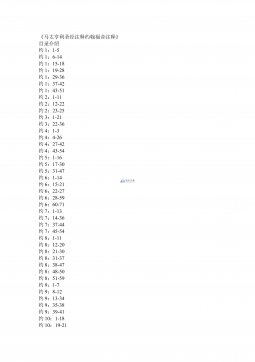

2024-12-10 56

2024-12-10 56

2024-12-10 84

2024-12-10 84

2024-12-26 163

2024-12-26 163

2024-12-26 422

2024-12-26 422

2024-12-26 283

2024-12-26 283

2024-12-26 388

2024-12-26 388

2025-08-09 237

2025-08-09 237

2025-08-09 61

2025-08-09 61

2025-08-18 12

2025-08-18 12

2025-11-20 47

2025-11-20 47

渝公网安备50010702506394

渝公网安备50010702506394