Tag 1 Tag 2 Tag 3 Tag 4 Tag 1 TAG2 TAG 3 TAG4 Tag 1 Tag 2 Tag 3 Tag 4

(a) (b) (c)

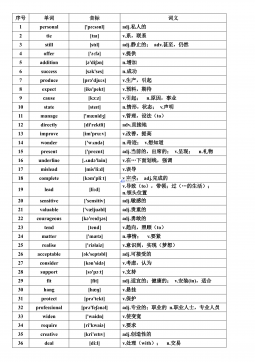

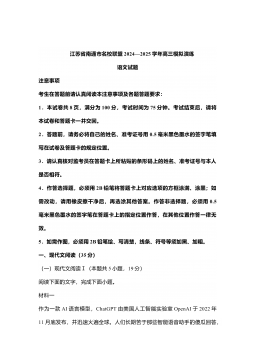

Fig. 1. (a) A toy example with 6 objects {si}6

i=1, and their partition into 2 clusters C1and C2. Tag set Tcontains 4 tags. Each object siis associated a subset

ti⊂T. In this example, t1={TAG 1,TAG 2},t2={TAG 1},t3={TAG 1},t4={TAG 2,TAG 3,TAG 4},t5={TAG 3},t6={TAG 3}. One

solution for DTDM is T1={TAG 1}, T2={TAG 3}. (b) Optimal solution for DTDM and MinConCD is T1={TAG 1}, T2={TAG 3}, however both

tags are generic and do not reveal specific information for each cluster, reducing the usefulness of the descriptors. (c) If select T1={TAG 1,TAG 2}, T2=

{TAG 3,TAG 4}as descriptors, they explain each cluster better albeit using more tags.

covered can sometimes be too strict, making even finding

a feasible solution very challenging. To address this issue,

Davidson et al. also discussed several variants of DTDM.

One such variant is referred to as the “cover-or-forget” ver-

sion, which relaxes the constraint that all objects must be

covered and allows ignoring some of the objects. Recently,

Sambaturu et al. [2], have pointed out that the “cover-or-

forget” variant does resolve the feasibility issue but might

have highly unbalanced coverage, i.e., for some clusters, the

descriptors can cover most of the objects, but for some cluster,

the descriptor might cover very few objects. The authors

proposed to address this issue by adding constraints on the

number of objects needed to be covered for each cluster, i.e.,

introduce additional parameter M`for each cluster C`, such

that the number of objects that are covered must be at least

M`. The extended cluster description formulation with more

balanced coverage guarantees for all clusters is referred to

as the minimum constrained cluster description (MinConCD)

problem. The DTDM is therefore a special case of MinConCD

when M`=|C`|for 1≤`≤k. However, we argue that this

model has another issue. Consider the following scenario: in

many datasets, we might have several tags that cover most

of the objects. For example, it has been noticed multiple

times that depending on the partially extracted information,

the Twitter hashtags may follow Zipf or approximate power

laws [3], [4]. If we only focus on minimizing the total number

of tags while satisfying the coverage constraints, the most

frequently used tags will be selected preferably. Figure 1(b)

shows such scenario, while the optimal solution uses only 2

tags. These tags might generally describe the entire dataset,

they do not reveal specific information about the cluster they

are assigned to. The descriptors of the clusters will therefore be

similar to each other, reducing the usefulness of the results. In

contrast, we wish to select the tags that reveal the information

of each cluster albeit potentially using more tags, as in the

scenario displayed in Figure 1(c).

To address this issue, we propose to further reinforce the

explainability formulations of MinConCD by adding an addi-

tional metric to the objective function which will fundamen-

tally change the outcomes. The novel metric, which we call

tag modularity (TM), is inspired by the notion of modularity

[5] in network science. Modularity is a measure of the graph

structure which quantifies the strengths of the division of a

graph into clusters. A clustering with high modularity tends to

have dense connections between the nodes within clusters but

sparse connections between nodes in different clusters. Quanti-

tatively, the modularity on graphs measures a deviation of node

clusters from a random model. Maximizing the modularity

naturally punishes trivial clusterings which are practically

useless. Intuitively, by considering both the total number of

tags used for the descriptors and the connections between the

tags and the objects, we can better identify the cluster-specific

tags and filter out the general tags, making the descriptor

more interpretable and thus improving the explainability of

the descriptors.

Both DTDM and MinConCD are challenging combinatorial

optimization problems. Davidson et al. [1] showed that even

for two clusters, finding only a feasible solution of DTDM

is intractable not to mention the optimality. Sambaturu et

al. [2] showed that if the coverage constraints in MinConCD

for each cluster must be met, then unless P=NP , for

any ρ≥1, there is no polynomial-time algorithm that can

approximate the objective function value with a factor of ρ.

Typically, in order to tackle such challenging optimization

models, the problems are formulated in terms of integer linear

programming (ILP), as proposed in the works of Davidson

et al. and Sambaturu et al. Then, to tackle the computational

intractability of such problems, relaxations on the constraints

or approximation algorithms are applied.

We explore the way to deal with the hardness of such

problems from the optimization accelerator systems’ angle

whose recent advent opens new opportunities for the data

mining community. As we are approaching the end of Moore’s

law [6] due to the physical limitations, major efforts from the

2024-12-06 5

2024-12-06 5

2024-12-06 16

2024-12-06 16

2024-12-06 30

2024-12-06 30

2024-12-06 27

2024-12-06 27

2024-12-06 33

2024-12-06 33

2024-12-06 14

2024-12-06 14

2024-12-06 38

2024-12-06 38

2024-12-06 20

2024-12-06 20

2024-12-06 53

2024-12-06 53

2024-12-06 33

2024-12-06 33

渝公网安备50010702506394

渝公网安备50010702506394