Enrichment Score a better quantitative metric for evaluating the enrichment capacity of molecular docking models

EnrichmentScore:abetterquantitativemetricforevaluatingtheenrichmentcapacityofmoleculardockingmodelsIanScottKnightian.knight@ucsf.eduSlavaNaprienkonaprienko@stanford.eduJohnJ.Irwinjohn.irwin@ucsf.eduOctober2022AbstractThestandardquantitativemetricforevaluatingenrichmentcapacityknownasLo-gAUCdependson...

相关推荐

-

网络营销技巧分享VIP免费

2025-02-28 8

2025-02-28 8 -

最系统销售培训资料VIP免费

2025-02-28 6

2025-02-28 6 -

最系统的房地产销售培训资料VIP免费

2025-02-28 6

2025-02-28 6 -

资深业务人员的谈判技巧VIP免费

2025-02-28 5

2025-02-28 5 -

珠宝终端店销售培训VIP免费

2025-02-28 6

2025-02-28 6 -

中国移动客服亲和力电话营销培训VIP免费

2025-02-28 5

2025-02-28 5 -

医药代表专业销售技巧培训VIP免费

2025-02-28 4

2025-02-28 4 -

医药代表销售技巧高级培训VIP免费

2025-02-28 7

2025-02-28 7 -

医药代表培训宝典(最新)VIP免费

2025-02-28 7

2025-02-28 7 -

新入职大学生培训方案全套VIP免费

2025-02-28 6

2025-02-28 6

作者详情

相关内容

-

淘宝直播红人经纪合同-9页

分类:人力资源/企业管理

时间:2025-06-11

标签:无

格式:DOC

价格:10 玖币

-

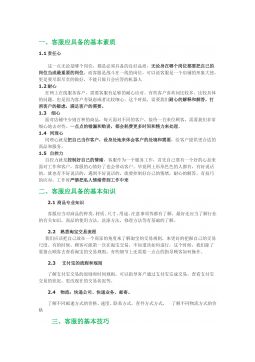

淘宝在线客服培训资料【精华整理版】-10页

分类:人力资源/企业管理

时间:2025-06-11

标签:无

格式:DOC

价格:10 玖币

-

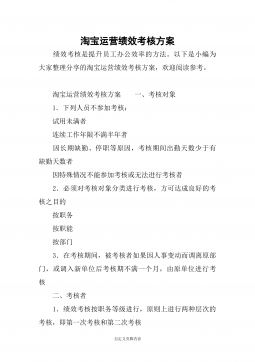

淘宝运营绩效考核方案-8页

分类:人力资源/企业管理

时间:2025-06-11

标签:无

格式:DOCX

价格:10 玖币

-

淘宝运营方案-11页

分类:人力资源/企业管理

时间:2025-06-11

标签:无

格式:DOCX

价格:10 玖币

-

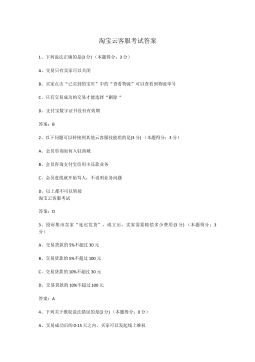

淘宝云客服考试答案-7页

分类:人力资源/企业管理

时间:2025-06-11

标签:无

格式:DOCX

价格:10 玖币

渝公网安备50010702506394

渝公网安备50010702506394