Other work studies learning from diverse demonstrations. Several approaches [

7

,

8

,

9

,

22

,

23

] model

the multimodality of diverse demonstrations directly, usually by explicitly handling each mode

of the data. Unfortunately, these approaches fail in the sequential, interactive imitation learning

setting that we study, where we gradually introduce new modes and retrain after each collection. A

separate line of work tackles learning with suboptimal data through the use of offline reinforcement

learning [

12

,

13

,

14

], filtered imitation [

11

,

15

,

16

,

17

], or inverse reinforcement learning [

24

,

25

,

26

].

These methods use extra reward annotations for each demonstration, and generally try to reweight or

selectively remove suboptimal data. Unlike these approaches, this work tackles the root cause of the

problem – how to collect compatible demonstrations from humans in the first place, removing the

need to filter out 10s – 100s of painstakingly collected demonstrations.

Interactive Imitation Learning.

Most of the work in interactive imitation learning attempts to

address the problem of covariate shift endemic to policies trained via behavioral cloning. Ross

et al.

[1]

introduce DAgger, a method for iteratively collecting demonstrations by relabeling the

states visited by a policy interactively, teaching the policy to recover. Later work builds on DAgger,

primarily focusing on reducing the number of expert labels requested during training. These variants

identify different measures such as safety and uncertainty to use to query the expert limiting the

amount of supervision a user needs to provide [

2

,

20

,

27

,

28

,

29

,

30

]. The focus of these works is on

learning from a single user rather than from multiple users demonstrating heterogenous behaviors.

Our proposed approach presents a novel interactive imitation learning method that learns to actively

elicit demonstrations from a pool of multiple users during the course of data collection, with the

express goal of guiding users towards compatible, optimal demonstrations.

3 Problem Setting

We consider sequential decision making tasks, modeled as a Markov Decision Process (MDP) with

the following components: the state space

S

, action space

A

, transition function

T

, reward function

R

, and discount factor

γ

. In this work, we assume sparse rewards only provided on task completion.

A state

s= (ogrounded, oproprio)∈ S

is comprised of a grounded observation (either coordinates/poses

of objects in the environment, or an RGB visual observation) and the robot’s proprioceptive state.

An action

a∈ A

is a continuous vector of robot joint actions. We assume access to a small dataset

of trajectories

Dbase ={τ1, τ2. . . τN}

where each trajectory

τi

is a sequence of states and actions,

τi={(s1, a1). . . (sT, aT)}. We train an initial policy πbase on this dataset via behavioral cloning.

Our approach has two components: 1) developing a measure of the compatibility of a new demonstra-

tion with an existing base policy, and 2) building a method for actively eliciting compatible demon-

strations from users. For the first component, we learn a measure

M(Dbase,(snew, anew)) ∈[0,1]

that

defines a compatibility at the granuality of a state-action pair. For the second component, we use our

fine-grained measure

M

to provide rich feedback to users about their demonstrations, in addition to

deciding whether to accept/reject a new demonstration

τnew

. The set of new demonstrations the user

collects comprises Dnew – after each collection step, we train a new policy πnew on Dbase ∪ Dnew.

Under our definition of compatibility (and the measure

M

we derive), we hope to guide users to

provide new datasets

Dnew

such that

πnew

will have as high of an expected return as possible, and

minimally, a higher expected return than

πbase

. In other words, our definition of compatibility asks

that new data should only help, not hurt performance relative to the initial set.

4 Learning to Measure Compatibility in Multi-Human Demonstrations

We first derive a general compatibility measure

M

given a base set of demonstrations, then evaluate

our measure through a series of case studies grounded in real, user-provided demonstration data.

4.1 Estimating Compatibility and Identifying Good Demonstrations

An idealized compatibility measure

M

has one role – estimating the performance of a policy

πbase

that

is retrained on the union of a known base dataset

Dbase

and a new dataset

Dnew

. Crucially,

M

needs to

operate at a granular level (ideally at the level of individual state-action pairs), without incurring the

cost of retraining and evaluating

πbase

on the new dataset. Phrased this way, there is a clear connection

to pool-based active learning [

31

], informing a choice of a plausible metrics that could help predict

downstream success. While many metrics could work, we choose two easy-to-compute metrics that

lend themselves well to interpretability: the likelihood of actions

anew

in

Dnew

under

πbase

, measured

3

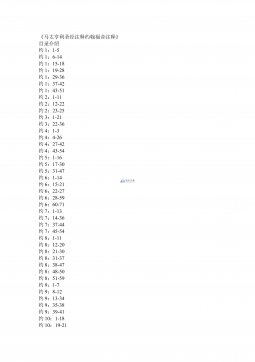

2024-12-10 56

2024-12-10 56

2024-12-10 84

2024-12-10 84

2024-12-26 163

2024-12-26 163

2024-12-26 356

2024-12-26 356

2024-12-26 219

2024-12-26 219

2024-12-26 303

2024-12-26 303

2025-08-09 225

2025-08-09 225

2025-08-09 57

2025-08-09 57

2025-08-18 12

2025-08-18 12

2025-11-20 33

2025-11-20 33

渝公网安备50010702506394

渝公网安备50010702506394