On Robust Incremental Learning over Many Multilingual Steps Karan Praharaj andIrina Matveeva Reveal

2025-05-02

0

0

6.01MB

10 页

10玖币

侵权投诉

On Robust Incremental Learning over Many Multilingual Steps

Karan Praharaj and Irina Matveeva

Reveal

Chicago, IL

{kpraharaj,imatveeva}@revealdata.com

Abstract

Recent work in incremental learning has in-

troduced diverse approaches to tackle catas-

trophic forgetting from data augmentation to

optimized training regimes. However, most

of them focus on very few training steps. We

propose a method for robust incremental learn-

ing over dozens of fine-tuning steps using data

from a variety of languages. We show that a

combination of data-augmentation and an op-

timized training regime allows us to continue

improving the model even for as many as fifty

training steps. Crucially, our augmentation

strategy does not require retaining access to

previous training data and is suitable in scenar-

ios with privacy constraints.

1 Introduction

Incremental learning is a common scenario for prac-

tical applications of deep language models. In such

applications, training data is expected to arrive in

batches rather than all at once, and so incremen-

tal perturbations to the model are preferred over

retraining the model from scratch every time new

training data becomes available for efficiency of

time and computational resources. When multi-

lingual models are deployed in applications, they

are expected to deliver good performance over data

across multiple languages and domains. This is

why it is desirable that the model keeps acquir-

ing new knowledge from incoming training data

in different languages, while preserving its ability

on languages that were trained in the past. The

model should ideally keep improving over time, or

at the very least not deteriorate its performance on

certain languages through the incremental learning

lifecycle.

It is known that incremental fine-tuning with

data in different languages leads to catastrophic

forgetting (French,1999;Mccloskey and Cohen,

1989) of languages that were fine-tuned in the past

(Liu et al.,2021b;Vu et al.,2022). This means that

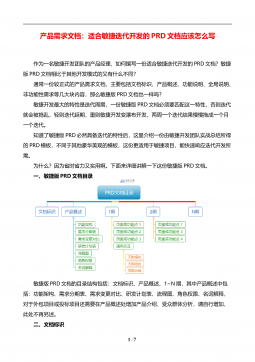

Figure 1: Translation augmented sequential fine-tuning

approach with LLRD-enabled training. We begin with

a pre-trained multilingual model M0and fine-tune it

over multiple stages to obtain (Miwhere i= 0...N).

At each fine-tuning stage, we train the model Miover

examples from the training set that is available at that

stage (Di) which is in language Lj. At each step, we

sample a small random subset from Diand translate

that sample into languages (L \ Lj) to create a set of

translated examples Ti. Each step of training includes

LLRD as a hyperparameter.

the performance on previously fine-tuned tasks or

languages decreases after training on a new task or

language. Multiple strategies have been proposed

to mitigate catastrophic forgetting. Data-focused

strategies such as augmentation and episodic mem-

ories (Hayes et al.,2019;Chaudhry et al.,2019b;

Lopez-Paz and Ranzato,2017), entail maintaining

a cache of a subset of examples from previous train-

ing data, which are mixed with new examples from

the current training data. The network is subse-

quently fine-tuned over this mixture as a whole, in

order to help the model "refresh" its "memory" of

prior information so that it can leverage previous

experience to transfer knowledge to future tasks.

Closely related to our current work is the work

arXiv:2210.14307v1 [cs.CL] 25 Oct 2022

by M’hamdi et al. (2022); Ozler et al. (2020) of un-

derstanding the effect of incrementally fine-tuning

models with multi-lingual data. They suggest that

joint fine-tuning is the best way to mitigate the ten-

dency of cross-lingual language models to erase

previously acquired knowledge. In other words,

their results show that joint fine-tuning should be

used instead of incremental fine-tuning, if possible.

Optimization focused strategies such as

Mirzadeh et al. (2020); Kirkpatrick et al. (2017)

focus on the training regime, and show that

techniques such as dropout, large learning rates

with decay and shrinking the batch size can create

training regimes that result in more stable models.

Translation augmentation has been shown to be

an effective technique for improving performance

as well. Wang et al. (2018); Fadaee et al. (2017);

Liu et al. (2021a) and Xia et al. (2019) use var-

ious types of translation augmentation strategies

and show substantial improvements in performance.

Encouraged by these gains, we incorporate transla-

tion as our data augmentation strategy.

In our analysis, we consider an additional con-

straint that affects our choice of data augmentation

strategies. This constraint is that the data that has

already been used for training cannot be accessed

again in a future time step. We know that privacy

is an important consideration for continuously de-

ployed models in corporate applications and similar

scenarios and privacy protocols often limit access

of each tranche of additional fine-tuning data only

to the current training time step. Under such con-

straints, joint fine-tuning or maintaining a cache

like Chaudhry et al. (2019a); Lopez-Paz and Ran-

zato (2017) is infeasible. Thus, we use translation

augmentation as a way to improve cross-lingual

generalization over a large number of fine-tuning

steps without storing previous data.

In this paper we present a novel translation-

augmented sequential fine-tuning approach that

mixes in translated data at each step of sequen-

tial fine-tuning and makes use of a special training

regime. Our approach shows minimization of the

effects of catastrophic forgetting, and the interfer-

ence between languages. The results show that for

incremental learning over dozens of training steps,

the baseline approaches result in catastrophic for-

getting. We see that it may take multiple steps to

reach this point, but the performance eventually

collapses.

The main contribution of our work is combin-

ing data augmentation with adjustments in train-

ing regime and evaluating this approach over a

sequence of 50 incremental fine-tuning steps. The

training regime makes sure that incremental fine-

tuning of models using translation augmentation

is robust without the access to previous data. We

show that our model delivers a good performance

as it surpasses the baseline across multiple evalua-

tion metrics. To the best of our knowledge, this

is the first work to provide a multi-stage cross-

lingual analysis of incremental learning over a large

number of fine-tuning steps with recurrence of lan-

guages.

2 Related Work

Current work fits into the area of incremental learn-

ing in cross-lingual settings. M’hamdi et al. (2022)

is the closest work to our research. The authors

compare several cross-lingual incremental learn-

ing methods and provide evaluation measures for

model quality after each sequential fine-tuning step.

They show that combining the data from all lan-

guages and fine-tuning the model jointly is more

beneficial than sequential fine-tuning on each lan-

guage individually. We use some of their evaluation

protocols but we have different constraints: we do

not keep the data from previous sequential fine-

tuning steps and we do not control the sequence

of languages. In addition, they considered only

six hops of incremental fine-tuning whereas we are

interested in dozens of steps. Ozler et al. (2020)

do not perform a cross-lingual analysis, but study

a scenario closely related to our work. Their find-

ings fall in line with those of M’hamdi et al. (2022)

as they show that combining data from different

domains into one training set for fine-tuning per-

forms better than fine-tuning each domain sepa-

rately. However, this type of joint fine-tuning is

ruled out for our scenario where we assume that ac-

cess to previous training data is not available, and

so we focus on sequential fine-tuning exclusively.

Mirzadeh et al. (2020) study the impact of vari-

ous training regimes on forgetting mitigation. Their

study focuses on learning rates, batch size, regular-

ization method. This work, like ours, shows that

applying a learning rate decay plays a significant

role in reducing catastrophic forgetting. However,

it is important to point out that our type of decay is

different from theirs. Mirzadeh et al. (2020) start

with a high initial learning rate for the first task to

obtain a wide and stable minima. Then, for each

摘要:

展开>>

收起<<

OnRobustIncrementalLearningoverManyMultilingualStepsKaranPraharajandIrinaMatveevaRevealChicago,IL{kpraharaj,imatveeva}@revealdata.comAbstractRecentworkinincrementallearninghasin-troduceddiverseapproachestotacklecatas-trophicforgettingfromdataaugmentationtooptimizedtrainingregimes.However,mostofthemf...

声明:本站为文档C2C交易模式,即用户上传的文档直接被用户下载,本站只是中间服务平台,本站所有文档下载所得的收益归上传人(含作者)所有。玖贝云文库仅提供信息存储空间,仅对用户上传内容的表现方式做保护处理,对上载内容本身不做任何修改或编辑。若文档所含内容侵犯了您的版权或隐私,请立即通知玖贝云文库,我们立即给予删除!

相关推荐

-

.net笔试题选择题集VIP免费

2024-11-14 29

2024-11-14 29 -

产品需求文档 - 适合敏捷迭代开发的PRD文档应该怎么写VIP免费

2024-11-23 4

2024-11-23 4 -

产品需求文档 - 面向产品需求的验证管理VIP免费

2024-11-23 4

2024-11-23 4 -

产品需求文档 - 没有标准,只有沟通VIP免费

2024-11-23 3

2024-11-23 3 -

产品需求文档 - 产品需求应该怎么写VIP免费

2024-11-23 5

2024-11-23 5 -

产品需求文档 - 产品需求文档 PRD模板VIP免费

2024-11-23 32

2024-11-23 32 -

产品需求文档 - 产品需求核心组件分析VIP免费

2024-11-23 45

2024-11-23 45 -

2024版.新高考版.高考总复习.数学.5·3A版1_1集合VIP免费

2024-11-23 28

2024-11-23 28 -

2024版.新高考版.高考总复习.数学.5·3A版1_1集合(分层集训)VIP免费

2024-11-23 16

2024-11-23 16 -

产品需求文档 - 产品技能树之需求分析(一)VIP免费

2024-11-23 8

2024-11-23 8

分类:图书资源

价格:10玖币

属性:10 页

大小:6.01MB

格式:PDF

时间:2025-05-02

渝公网安备50010702506394

渝公网安备50010702506394