NAS-based Recursive Stage Partial Network RSPNet for Light-Weight Semantic Segmentation Anonymous Authors

2025-04-29

0

0

2.38MB

8 页

10玖币

侵权投诉

NAS-based Recursive Stage Partial Network (RSPNet) for Light-Weight Semantic

Segmentation

Anonymous Authors

Abstract

Current NAS-based semantic segmentation methods focus

on accuracy improvements rather than light weight design.

In this paper, we propose a two-stage framework to design

our NAS-based RSPNet model for light-weight semantic seg-

mentation. The first architecture search determines the inner

cell structure, and the second architecture search considers

exponentially growing paths to finalize the outer structure of

the network. It was shown in the literature that the fusion

of high- and low-resolution feature maps produces stronger

representations. To find the expected macro structure with-

out manual design, we adopt a new path-attention mecha-

nism to efficiently search for suitable paths to fuse useful

information for better segmentation. Our search for repeat-

able micro-structures from cells leads to a superior network

architecture in semantic segmentation. In addition, we pro-

pose an RSP (recursive Stage Partial) architecture to search

a light-weight design for NAS-based semantic segmentation.

The proposed architecture is very efficient, simple, and effec-

tive that both the macro- and micro- structure searches can be

completed in five days of computation on two V100 GPUs.

The light-weight NAS architecture with only 1/4 parameter

size of SoTA architectures can achieve SoTA performance on

semantic segmentation on the Cityscapes dataset without us-

ing any backbones.

Introduction

Network Architecture Search (NAS) (Elsken, Metzen, and

Hutter 2019b) is a computational approach for automating

the optimization of the neural architecture design. As deep

learning has been widely used for medical image segmenta-

tion, the most common deep networks used in practice are

still designed manually. In this work, we focus on applying

NAS for medical image segmentation as the targeted appli-

cation. To optimize the NAS that looks for the best archi-

tecture for image segmentation, the search task can be de-

composed into three parts: (i) a supernet to generate all pos-

sible architecture candidates, (ii) a global search of neural

architecture paths from the supernet, and (iii) a local search

of the cell architectures, namely operations including the

conv/deconv kernels and the pooling parameters. The NAS

space to explore is exponentially large w.r.t. the number of

generated candidates, the paths between nodes, the number

Copyright © 2022, Association for the Advancement of Artificial

Intelligence (www.aaai.org). All rights reserved.

of depths, and the available cell operations to choose from.

The computational burden of NAS for image segmentation

is much higher than other tasks such as image classification,

so each architecture verification step takes longer to com-

plete. As a result, there exist fewer NAS methods that work

successfully for image segmentation. In addition, none is

designed for light-weight semantic segmentation, which is

very important for AV(Automobile Vehicle)-related applica-

tions.

The main challenge of NAS is on how to deal with the

exponentially large search space when exploring and eval-

uating neural architectures. We tackle this problem based

on a formulation regarding what needs to be considered

in priority and how to effectively reduce search complex-

ity. Most segmentation network designs (Ronneberger, Fis-

cher, and Brox 2015; Fourure et al. 2017; Weng et al. 2019;

Liu et al. 2019) use U-Nets to achieve better accuracies

in image segmentation. For example, AutoDeepLab (Liu

et al. 2019) designs a level-based U-Net as the supernet

whose search space grows exponentially according to its

level number Land depth parameter D. Joining the search

for network-level and cell-level architectures creates huge

challenges and inefficiency in determining the best architec-

ture. To avoid exponential growth in the cell search space,

only one path in AutoDeepLab is selected and sent to the

next node. This limit is unreasonable since more input to the

next node can generate richer features for image segmenta-

tion.

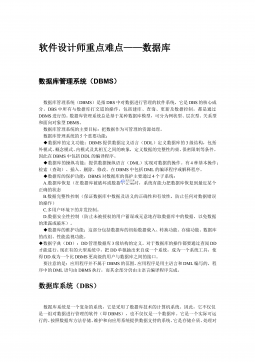

A “repeatable” concept is adopted in this paper to con-

struct our model. Similar to repeatable cell architecture de-

sign, our model contains repeated units that share the same

structure. The proposed model architecture for image seg-

mentation is shown in Fig. 1. It is based on differential learn-

ing and is as efficient as the DARTS (Differentiable AR-

chiTecture Searching) method (Liu, Simonyan, and Yang

2019), compared to the other NAS methods based on RL and

EV. In addition, we modify the concept of CSPNet (Wang

et al. 2020) to recursively use only half of the channels to

pass through the cell we searched for. The RSPNet (Re-

cursive Stage Partial Network) makes our search procedure

much more efficient and results in a light weight architec-

ture for semantic segmentation. The proposed architecture is

simple, efficient, and effective in image segmentation. Both

the macro- and micro- structure searches can be completed

arXiv:2210.00698v1 [cs.CV] 3 Oct 2022

Cat

Upsample

Output

Input

Input 0 Out

Keep

Down

Up

Cell

0Intermediate

Node

1/32

1/16

1/8

1/4

1 2 3 4Layer

Scale

Ops1 Ops2

Module

+

+Element-wise

Add

N

PAM

Figure 1: Top: Our fully connected model with Path-Attention Module(PAM) to search macro structure. Bottom Left: Before

entering the next layer, feature-maps generated by previous layer pass through the Path-Attention Module(PAM) and choose

the best one to enter the cell. Bottom Right: Because of the efficiency and parameter size, we only use one cell at each layer

when we search cell using DARTS. During training, we stack four cells we searched in search process.

in two days of computation on two V100 GPUs. The light-

weight NAS network with only 1/4 parameter size of SoTA

architectures achieves state-of-the-art performance on the

Cityscapes datasets without any backbones. Main contribu-

tions of this paper are summarized in the following:

• We propose a two-stage search method to decrease

memory usage and speed up search time.

• We designed a cell-based architecture that can con-

struct a complex model by stacking the cell we

searched for.

• RSPNet makes our search procedure much more ef-

ficient and results in our light-weight architecture for

semantic segmentation.

• The proposed PAM selects the paths and fuses more

inputs than Auto-Deeplab (Liu et al. 2019) and Hi-

NAS (Cho et al. 2020) to generate richer features for

better image segmentation.

• Without using any backbone, our architecture out-

performs SoTA methods with improved accuracy

and efficiency on the Cityscapes (Cordts et al. 2016)

dataset.

Related Works

Mainstream NAS algorithms generally consist of three ba-

sic steps: supernet generation,architecture search, and net-

work cell parameter optimization. Approaches for architec-

ture search can be organized into three categories (Elsken,

Metzen, and Hutter 2019b): reinforcement learning (RL)

based, evolution (EV) based, and gradient based. In what

follows, the details of each method are discussed.

RL-based methods (Baker et al. 2017; Zoph and Le

2017) use a controller to sample neural network architec-

tures (NNAs) to learn a reward function to generate better

architectures from exploration and exploitation. Although an

RL-based NAS approach can construct a stable architecture

for evolution, it needs a huge number of tries to get a positive

reward for updating architectures and thus is very computa-

tionally expensive. For example, in (Zhong et al. 2018), a

cell-based Q-learning approach is evaluated on ImageNet,

and the search takes up to 9 GPU-days to run.

Evolution-based methods (Real et al. 2017; Real et al.

2019; Liu et al. 2018; Elsken, Metzen, and Hutter 2019a)

perform evolution operators (e.g. crossover and mutation)

based on the genetic algorithm (GA) to continuously adjust

NNAs and improve their qualities across generations. These

methods suffer from high computational cost in optimizing

the model generator when validating the accuracy of each

candidate architecture. Compared to the methods, which rely

on optimization over discrete search spaces, gradient-based

methods can optimize much faster via search in continuous

spaces.

Gradient-based methods: The Differentiable ARchiTec-

ture Search (DARTS) (Liu, Simonyan, and Yang 2019) sig-

nificantly improves search efficiency by computing a con-

vex combination of a set of operations where the best ar-

chitecture can be optimized by gradient descent algorithms.

In DARTS, a supernet is constructed by placing a mixture

of candidate operations on each edge rather than applying a

single operation to a node. An attention mechanism on the

connections is adopted to remove weak connections, such

that all supernet weights can be efficiently optimized jointly

with a continuous relaxation of the search space via gradi-

ent descent. The best architecture is found efficiently by re-

stricting the search space to the subgraphs of the supernet.

However, this acceleration comes with a penalty when limit-

摘要:

展开>>

收起<<

NAS-basedRecursiveStagePartialNetwork(RSPNet)forLight-WeightSemanticSegmentationAnonymousAuthorsAbstractCurrentNAS-basedsemanticsegmentationmethodsfocusonaccuracyimprovementsratherthanlightweightdesign.Inthispaper,weproposeatwo-stageframeworktodesignourNAS-basedRSPNetmodelforlight-weightsemanticseg-...

声明:本站为文档C2C交易模式,即用户上传的文档直接被用户下载,本站只是中间服务平台,本站所有文档下载所得的收益归上传人(含作者)所有。玖贝云文库仅提供信息存储空间,仅对用户上传内容的表现方式做保护处理,对上载内容本身不做任何修改或编辑。若文档所含内容侵犯了您的版权或隐私,请立即通知玖贝云文库,我们立即给予删除!

相关推荐

-

.net笔试题选择题集VIP免费

2024-11-14 29

2024-11-14 29 -

产品需求文档 - 适合敏捷迭代开发的PRD文档应该怎么写VIP免费

2024-11-23 5

2024-11-23 5 -

产品需求文档 - 面向产品需求的验证管理VIP免费

2024-11-23 4

2024-11-23 4 -

产品需求文档 - 没有标准,只有沟通VIP免费

2024-11-23 4

2024-11-23 4 -

产品需求文档 - 产品需求应该怎么写VIP免费

2024-11-23 5

2024-11-23 5 -

产品需求文档 - 产品需求文档 PRD模板VIP免费

2024-11-23 33

2024-11-23 33 -

产品需求文档 - 产品需求核心组件分析VIP免费

2024-11-23 45

2024-11-23 45 -

2024版.新高考版.高考总复习.数学.5·3A版1_1集合VIP免费

2024-11-23 29

2024-11-23 29 -

2024版.新高考版.高考总复习.数学.5·3A版1_1集合(分层集训)VIP免费

2024-11-23 16

2024-11-23 16 -

产品需求文档 - 产品技能树之需求分析(一)VIP免费

2024-11-23 9

2024-11-23 9

分类:图书资源

价格:10玖币

属性:8 页

大小:2.38MB

格式:PDF

时间:2025-04-29

渝公网安备50010702506394

渝公网安备50010702506394