Learning O Helps for Learning More Handling the Unlabeled Entity Problem for Class-incremental NER Ruotian Ma1 Xuanting Chen1 Lin Zhang1 Xin Zhou1

Learning"O"HelpsforLearningMore:HandlingtheUnlabeledEntityProblemforClass-incrementalNERRuotianMa1∗,XuantingChen1∗,LinZhang1,XinZhou1,JunzheWang1,TaoGui2†,QiZhang1†,XiangGao3,YunwenChen31SchoolofComputerScience,FudanUniversity,Shanghai,China2InstituteofModernLanguagesandLinguistics,FudanUniversity,S...

相关推荐

-

公司日常考勤制度VIP免费

2024-11-29 17

2024-11-29 17 -

公司人事考勤制度VIP免费

2024-11-29 19

2024-11-29 19 -

公司规章制度汇编VIP免费

2024-11-29 20

2024-11-29 20 -

岗位绩效工资制度VIP免费

2024-11-29 20

2024-11-29 20 -

保密制度汇编VIP免费

2024-11-29 21

2024-11-29 21 -

《绩效管理制度》VIP免费

2024-11-29 24

2024-11-29 24 -

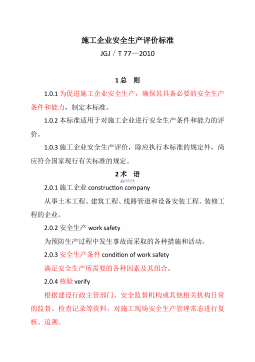

《施工企业安全生产评价标准》JGJ/T-77—2010VIP免费

2024-12-14 232

2024-12-14 232 -

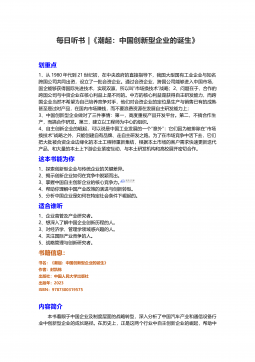

《潮起:中国创新型企业的诞生》导读VIP免费

2024-12-14 71

2024-12-14 71 -

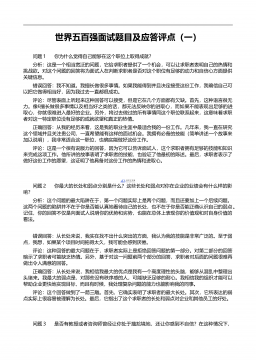

岗位面试题库合集-通用面试题库-世界五百强面试题目及应答评点(全套50题)VIP免费

2024-12-15 80

2024-12-15 80 -

(试行)建设项目工程总承包合同示范文本GF-2011-0216VIP免费

2025-01-13 148

2025-01-13 148

作者详情

相关内容

-

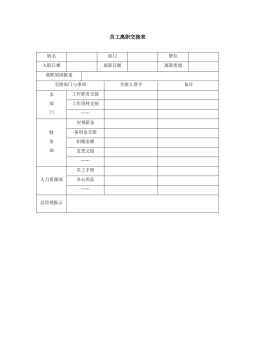

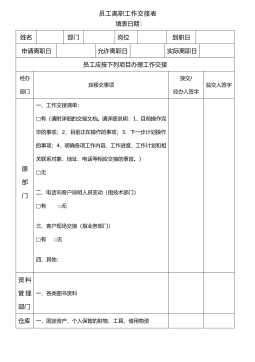

47_员工离职交接表-模板

分类:人力资源/企业管理

时间:2025-08-23

标签:无

格式:DOC

价格:10 玖币

-

46_员工离职工作交接表

分类:人力资源/企业管理

时间:2025-08-23

标签:无

格式:DOC

价格:10 玖币

-

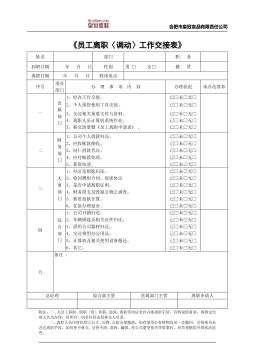

45_员工离职〈调动〉工作交接表

分类:人力资源/企业管理

时间:2025-08-23

标签:无

格式:DOC

价格:10 玖币

-

2025年行政事业性国有资产报告软件操作讲解20251216

分类:人力资源/企业管理

时间:2026-01-05

标签:无

格式:PPTX

价格:10 玖币

-

2025年度行政事业性国有资产报告 - 资产报告及公共基础设施等20251217

分类:人力资源/企业管理

时间:2026-01-05

标签:无

格式:PPTX

价格:10 玖币

渝公网安备50010702506394

渝公网安备50010702506394