An Ensemble Teacher-Student Learning Approach with Poisson Sub-sampling

to Differential Privacy Preserving Speech Recognition

Chao-Han Huck Yang1, Jun Qi1, Sabato Marco Siniscalchi1,2,3, Chin-Hui Lee1

1Georgia Institute of Technology, USA and 2Kore University of Enna, Italy

3Department of Electronic Systems, NTNU, Trondheim, Norway

{huckiyang,chl}@gatech.edu

Abstract

We propose an ensemble learning framework with Poisson

sub-sampling to effectively train a collection of teacher mod-

els to issue some differential privacy (DP) guarantee for train-

ing data. Through boosting under DP, a student model derived

from the training data suffers little model degradation from the

models trained with no privacy protection. Our proposed so-

lution leverages upon two mechanisms, namely: (i) a privacy

budget amplification via Poisson sub-sampling to train a target

prediction model that requires less noise to achieve a same level

of privacy budget, and (ii) a combination of the sub-sampling

technique and an ensemble teacher-student learning framework

that introduces DP-preserving noise at the output of the teacher

models and transfers DP-preserving properties via noisy labels.

Privacy-preserving student models are then trained with the

noisy labels to learn the knowledge with DP-protection from

the teacher model ensemble. Experimental evidences on spo-

ken command recognition and continuous speech recognition

of Mandarin speech show that our proposed framework greatly

outperforms existing benchmark DP-preserving algorithms in

both speech processing tasks.

Index Terms: Speech recognition, privacy-preserving learning,

Mandarin speech recognition

1. Introduction

Speech data privacy has attracted increasing public attention [1]

when personal speech data are employed to deploy widespread

speech application. In fact, unsuccessful privacy protection ex-

amples have been reported and associated with large amounts of

financial punishments, which include Google’s 57 million Eu-

ros fine in 2019 and Amazon’s 746 million Euros fine in 2021

related to insufficient data protection and privacy measurement

on their deployed advertisement models and speech processing

systems against GDPR.

Differential privacy [2] (DP) is one solution that provides

both rigorous mathematical guarantees of the privacy bud-

get measurement, and effective system results against privacy-

based attacks [3]. The foundation of DP is based on theoreti-

cal cryptography and point-wise perturbation introduced by the

database research community. By applying random perturba-

tion (e.g., additive noise or shuffling) under DP, personal infor-

mation could be measured by statistical divergences [4] in terms

of privacy budgets1(e.g., the power of anonymization [5]).

Therefore, how to connect DP-preserving mechanisms and

DNN-based systems has become an important research topic

1Apple has deployed differential privacy measurement with a pri-

vacy budget (ε=8) on its iOS and macOS systems. The results are

reported in an official document in https://www.apple.com/

privacy/docs/Differential_Privacy_Overview.pdf.

due to its potential to provide formal measurement [6] on pri-

vacy budgets for end-users.

There are two main streams of DP-related ML research: (1)

reducing a cost of privacy budget (e.g., fewer additive distor-

tions) under the same system performance and (2) advancing

DP preserving algorithms to deep learning-based systems (e.g.,

improving system performance under a fixed privacy budget).

Previous works demonstrate that training ML models with DP-

preserving intervention would yield a serve performance degra-

dation. Meanwhile, teacher-student learning (TSL) [7] serves as

an advanced privacy solution, that transfers knowledge of DP-

preserving properties from the teacher model and outcomes to a

private student model. TSL-based DP-aware training, including

privacy-aggregation of teacher ensemble [7] (PATE) and PATE

with generative models [8], applies DP-aware noisy perturba-

tion and avoids data interfering directly to degrade model per-

formance.

In particular, ASR-related tasks are even more sensitive to

the scale of training data in order to achieve good recognition

results using advanced deep techniques, such as self-attention

network [9], and recurrent neural network transducer [10]. We

are thus motivated to propose a solution to efficiently allocate

training data for deploying DP-preserving ASR systems. In this

work, we introduce a new private teacher training framework

by using a DP-preserving Poisson sub-sampling [11, 12] from

a sensitive dataset, where the sub-sampling mechanism aims to

avoid splitting into subsets [7, 13] used in the PATE to calculate

the private budget.

We provide an experimental study on Mandarin speech

recognition, where we conduct our experiments on two differ-

ent tasks of isolated word recognition and continuous speech

recognition.

2. Related Work

2.1. Privacy Preservation & Applications to Speech Tasks

Recent research studies to ensure data privacy in an ASR system

can be classified into two groups: (i) systemic, such as federated

computing [14], features isolation [15], and (ii) algorithmic,

mainly machine learning with differential privacy[16], such dif-

ferentially private stochastic gradient descent [17] (DPSGD)

and PATE [7, 18]. Federated architectures [15] have been stud-

ied in the speech processing community to increase privacy

protection. For example, the average gradient method [19]

was used to update the learning model for decentralized train-

ing. However, those approaches at a system-level usually make

some assumptions about the limited accessibility of malicious

attackers and provide less universal measures about privacy

guarantees. Meanwhile, algorithmic efforts focus on “system-

agnostic” studies with more flexibility.

arXiv:2210.06382v1 [eess.AS] 12 Oct 2022

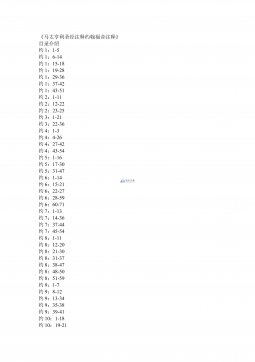

2024-12-10 56

2024-12-10 56

2024-12-10 84

2024-12-10 84

2024-12-26 163

2024-12-26 163

2024-12-26 421

2024-12-26 421

2024-12-26 282

2024-12-26 282

2024-12-26 386

2024-12-26 386

2025-08-09 237

2025-08-09 237

2025-08-09 61

2025-08-09 61

2025-08-18 12

2025-08-18 12

2025-11-20 47

2025-11-20 47

渝公网安备50010702506394

渝公网安备50010702506394