SilverAlign MT-Based Silver Data Algorithm For Evaluating Word Alignment Abdullatif Köksal12 Silvia Severini1 Hinrich Schütze1

2025-04-26

0

0

990.87KB

13 页

10玖币

侵权投诉

SilverAlign: MT-Based Silver Data Algorithm For Evaluating Word

Alignment

Abdullatif Köksal1,2, Silvia Severini1, Hinrich Schütze1

1Center for Information and Language Processing (CIS), LMU Munich, Germany

2Munich Center for Machine Learning (MCML), Germany

{akoksal, silvia}@cis.lmu.de

Abstract

Word alignments are essential for a variety of

NLP tasks. Therefore, choosing the best ap-

proaches for their creation is crucial. However,

the scarce availability of gold evaluation data

makes the choice difficult. We propose Silver-

Align, a new method to automatically create

silver data for the evaluation of word aligners

by exploiting machine translation and minimal

pairs. We show that performance on our silver

data correlates well with gold benchmarks for

9 language pairs, making our approach a valid

resource for evaluation of different domains

and languages when gold data are not avail-

able. This addresses the important scenario of

missing gold data alignments for low-resource

languages.

1 Introduction

Word alignments (WA) are crucial for statistical

machine translation (SMT) where they constitute

the basis for creating probabilistic translation dic-

tionaries. They are relevant to different tasks such

as neural machine translation (NMT) (Alkhouli

et al.,2018), typological analysis (Östling,2015),

annotation projection (Huck et al.,2019), bilin-

gual lexicon extraction (McKeown et al.,1996;

Ribeiro et al.,2001), and for creating multilingual

embeddings (Dufter et al.,2018). Different ap-

proaches have been investigated using statistics

like IBM models (Brown,1993) and Giza++ (Och

and Ney,2003). More recently, Östling and Tiede-

mann (2016) introduced Eflomal, a high-quality

word aligner widely used nowadays for its ability

to align many languages effectively. Other methods

create alignments from attention matrices of NMT

models (Zenkel et al.,2019), solve multitask prob-

lems (Garg et al.,2019), or leverage multilingual

word embeddings (Sabet et al.,2020).

Given the variety of approaches available for

aligning words, the choice of the best alignment

methods for a certain parallel corpus has gained

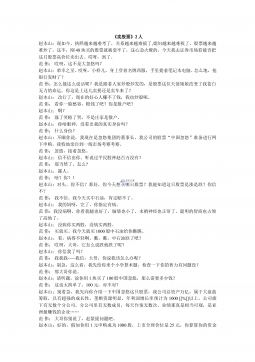

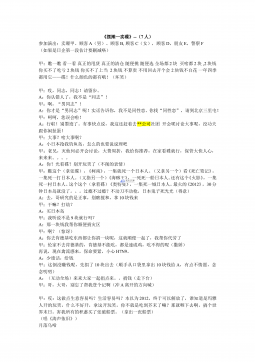

Figure 1: An example of our technique with minimal

pairs for a source sentence in English and the target lan-

guage, Blissymbols.1For a word (dog) in the source sen-

tence, we create minimal pairs (orchestra, cat, child),

and then we can align the word dog to the correct sym-

bol in Blissymbols with the help of translations.

attention. Such decision requires evaluation data

for the pair of languages and specific domain ad-

dressed. Collecting gold data or high-quality word

alignment benchmarks requires the work of various

annotators as for the Blinker project of Melamed

(1998) and WA shared tasks (Mihalcea and Peder-

sen,2003;Martin et al.,2005) which can be a time-

consuming or impractical job for lesser spoken

languages. Melamed (1998) reports that annotat-

ing word alignments for 100 sentences in English-

French would take 9 to 22 hours. Additionally, the

annotation process often leads to conflicts among

annotators (Macken,2010). Hence, gold data is

scarce or completely unavailable for many low-

resource languages and, when dealing with domain-

specific data such as medical or legal text, such

availability is even less. Therefore analyzing exist-

ing word alignment models with a varying number

of language pairs in different domains is a challeng-

ing task.

1

Blissymbols is a constructed language established in

1949 to help people with communication difficulties. The

blissonline.se dictionary is used for the examples.

arXiv:2210.06207v2 [cs.CL] 27 Mar 2023

We propose SilverAlign, a novel algorithm to

create silver evaluation data for guiding the choice

of appropriate word alignment methods. Our ap-

proach is based on a machine translation model and

exploits minimal sentence pairs to create parallel

corpora with alignment links. Figure 1illustrates

our core idea with minimal pairs in English and

Blissymbols. Our approach is to create alterna-

tive sentences in minimal pairs, to rely on machine

translation models to track changed words for each

alternative and then align words in the source sen-

tence.

In summary, our contributions are:

1.

We find that our silver benchmarks rank meth-

ods with high consistency compared to rank-

ings based on gold data. This means that we

can identify the best methods based on silver

data if there is no gold data available, which is

frequently the case in low-resource scenarios

for word alignment.

2.

We conduct an extensive analysis of our silver

resource with respect to gold data for 9 lan-

guage pairs from different language families

and resource availability. We perform various

experiments for word alignment models on

sub-word tokenization, tokenizer vocabulary

size, varying performance of Part-of-Speech

tags, and word frequencies.

3.

SilverAlign supports a more accurate evalua-

tion and a more in-depth analysis than small

gold sets (i.e., English-Hindi has only 90 sen-

tences) because we can automatically create

larger evaluation benchmarks. Also, Silver-

Align is robust to domain changes as it shows

a high correlation between gold and both in-

and out-of-domain silver benchmarks.

4.

It has been shown that machine translation per-

formance (including NMT performance) can

be improved by choosing a tokenization that

optimizes compatibility between source and

target languages (Deguchi et al.,2020). We

show that SilverAlign can be used to find such

a compatible tokenization for each language

pair.

5.

We make our silver data and code available as

a resource for future work that takes advantage

of our silver evaluation datasets.

2

Our code

2https://github.com/akoksal/SilverAlign

can be used to create silver benchmarks for

multiple languages, and our silver benchmark

can be used out-of-the box.

The rest of the paper is organized as follows.

Section 2describes related work. The details of

SilverAlign method are explained in Section 3. Sec-

tion 4describes the experimental setup, evaluation

metrics and datasets. We compare the results on

our silver benchmarks to gold data in Section 5.

Finally, we draw conclusions and discuss future

work in Section 6.

2 Related Work

2.1 Word alignment analysis

The analysis of word alignment performance with

respect to different factors has been analyzed by

many works. Ho and Yvon (2019) compare dis-

crete and neural version word aligners and show

the superiority of the second class. They also com-

pare them with respect to unaligned words, rare

words, Part-of-Speech (PoS) tags, and distortion

errors. Asgari et al. (2020) study word alignment

results when using subword-level tokenization and

show improved performance with respect to word

level. Sabet et al. (2020) analyze the performance

of word aligners regarding different PoS for En-

glish/German and show that Eflomal has low per-

formance when aligning links with high distortion.

They also analyze the alignments based on word

frequency and show that the performance decreases

for rare words when aligning at the word level ver-

sus the subword level.

Ho and Yvon (2021) analyze the interaction be-

tween alignment methods and subword tokeniza-

tion (Unigram and Byte Pair Encoding (BPE)).

They observe that tokenizing into smaller units

helps to align rare and unknown words. They also

investigate the effect of different vocabulary sizes

and conclude that word-based segmentation is less

optimal. We also conduct an experiment in this

direction in Section 5.3.

2.2 Silver data creation in NLP

Collecting gold data for evaluating or training sys-

tems can be impractical due to its cost and the

need for human annotators. To solve these issues,

silver data - data generated automatically - has

been widely exploited for different tasks and do-

mains. For the Named Entity Recognition (NER)

task, Rebholz-Schuhmann et al. (2010) introduce

He buys football shoes

Mike buys football shoes

Anna buys football shoes

He sells football shoes

He wants football shoes

He buys tennis shoes

He buys running shoes

He buys football shorts

He buys football jersey

[MASK] buys football shoes He [MASK] football shoes He buys [MASK] shoes He buys football [MASK]

Er kauft Fußballschuhe

Er kauft Tennisschuhe

Er kauft Laufschuhe

MT

BERT

Er kauft Fußballschuhe

Er kauft Fußballshorts

Er kauft Fußballsocken

MT

He buys football shoes

Er kauft Fußballschuhe

He buys football shoes

Er kauft Fußballschuhe

He buys football shoes

Er kauft Fußballschuhe

BERT BERT BERT

MT Er kauft Fußballschuhe 1)

2)

3)

4)

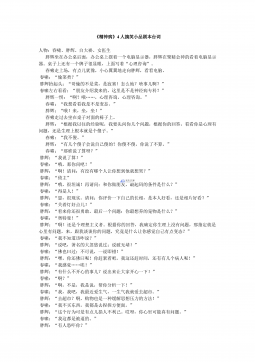

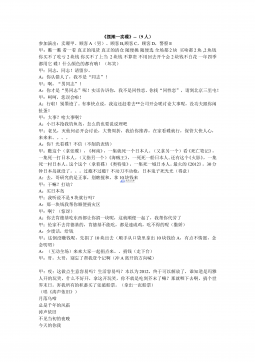

Figure 2: One-to-one and many-to-one examples of English-German alignments according to SilverAlign. The

first step translates a given source sentence (si) to a sentence (ti) in the target language via machine translation.

The second step finds alternatives for each word in sivia a foundation model trained with the masked language

modeling (MLM) objective. The third step translates all alternative sentences of a given word and tracks which

words are changed in ti. If alternative translations change the same word in ti, we label the corresponding align-

ments and merge the affected words in the fourth step. (Details for the first two columns omitted for clarity).

CALBC, a silver standard corpus generated by the

harmonization of multiple annotations, Wu et al.

(2021) create training data for their NER model

through word-to-word machine translation and an-

notation projection, and Severini et al. (2022) cre-

ate named entities pairs from co-occurrence statis-

tics and transliteration models. For the medical

domain, there exist multiple silver sets due to the

difficulty of finding qualified annotators. Examples

are the silver corpus of Rashed et al. (2020) for

training and evaluating COVID-19-related NLP

tools, and DisTEMIST from Miranda-Escalada

et al. (2022), a multilingual dataset for 6 languages

created through annotation transfer and MT for

automatic detection and normalization of disease

mentions from clinical case documents. Paulheim

(2013) introduced DBpedia-NYD for evaluating

the semantic relatedness of resources in DBpedia

and exploiting web search engines. Baig et al.

(2021) propose a silver-standard dependency tree-

bank of Urdu tweets using self-training and co-

training to automatically parse big amounts of data.

Wang et al. (2022) synthesize labeled data using

lexicons to adapt pretrained multilingual models to

low-resource languages.

3 Method

The pipeline of our silver data creation algorithm is

illustrated in Figure 2. Given a source language

S

and a target language

T

, we now describe the steps

to create our word alignment silver data for S-T:

1.

We first collect monolingual data from the

source language,

DS

. Given a sentence

si=

ws

1, ws

2, ..., ws

N∈DS

of length

N

, we use a

machine translation system to generate the tar-

get sentence

ti=wt

1, wt

2, ..., wt

M

, and there-

fore target data DT.

2.

Then, we create minimal pairs for

si

by

finding alternative words for each

ws

j

in

the sentence (

j∈[1, N])

. We use a pre-

trained Masked Language Model (i.e., English

BERT

Large

) to find alternative words which fit

into the context well. For each

si

, we cre-

ate five alternatives per word by masking one

word at a time. Examples of minimal pairs

for the sentence “I love pizza” are “You love

pizza”, “I hate pizza”, and “I love apples”.

3.

In the third step, we use a machine translation

system to translate all alternative sentences to

the target language.

3

Based on the changed

3

As recent machine translation models (NLLB Team et al.,

摘要:

展开>>

收起<<

SilverAlign:MT-BasedSilverDataAlgorithmForEvaluatingWordAlignmentAbdullatifKöksal1,2,SilviaSeverini1,HinrichSchütze11CenterforInformationandLanguageProcessing(CIS),LMUMunich,Germany2MunichCenterforMachineLearning(MCML),Germany{akoksal,silvia}@cis.lmu.deAbstractWordalignmentsareessentialforavarietyof...

声明:本站为文档C2C交易模式,即用户上传的文档直接被用户下载,本站只是中间服务平台,本站所有文档下载所得的收益归上传人(含作者)所有。玖贝云文库仅提供信息存储空间,仅对用户上传内容的表现方式做保护处理,对上载内容本身不做任何修改或编辑。若文档所含内容侵犯了您的版权或隐私,请立即通知玖贝云文库,我们立即给予删除!

相关推荐

-

《卖股票》2人仿赵本山小品卖拐VIP免费

2024-11-30 9

2024-11-30 9 -

《罗密欧与茱丽叶》穿越版-10人以上幽默搞笑小品剧本VIP免费

2024-11-30 15

2024-11-30 15 -

《精神病》4人搞笑小品剧本台词VIP免费

2024-11-30 11

2024-11-30 11 -

《超幸福鞋垫》湖南卫视何炅经典之作VIP免费

2024-11-30 14

2024-11-30 14 -

《曹操与葛朗台》3人搞笑小品剧本台词VIP免费

2024-11-30 13

2024-11-30 13 -

《摆摊-卖碟》多人(搞笑)最新9人VIP免费

2024-11-30 14

2024-11-30 14 -

《摆摊-卖碟》多人(搞笑)最新7人VIP免费

2024-11-30 13

2024-11-30 13 -

《摆摊-卖碟》多人(搞笑)最新VIP免费

2024-11-30 15

2024-11-30 15 -

“专心成长 超越自我”主题年会暨经管院就协成立一周年庆典联欢会策划书VIP免费

2024-11-30 18

2024-11-30 18 -

高效团队建设方案-如何组建高效的团队VIP免费

2024-12-09 49

2024-12-09 49

分类:图书资源

价格:10玖币

属性:13 页

大小:990.87KB

格式:PDF

时间:2025-04-26

渝公网安备50010702506394

渝公网安备50010702506394