BioNLI Generating a Biomedical NLI Dataset Using Lexico-semantic Constraints for Adversarial Examples Mohaddeseh Bastan

2025-05-06

0

0

239.16KB

12 页

10玖币

侵权投诉

BioNLI: Generating a Biomedical NLI Dataset Using Lexico-semantic

Constraints for Adversarial Examples

Mohaddeseh Bastan

Stony Brook University

mbastan@cs.stonybrook.edu

Mihai Surdeanu

University of Arizona

msurdeanu@email.arizona.edu

Niranjan Balasubramanian

Stony Brook University

niranjan@cs.stonybrook.edu

Abstract

Natural language inference (NLI) is critical

for complex decision-making in biomedical

domain. One key question, for example, is

whether a given biomedical mechanism is sup-

ported by experimental evidence. This can

be seen as an NLI problem but there are no

directly usable datasets to address this. The

main challenge is that manually creating infor-

mative negative examples for this task is dif-

ficult and expensive. We introduce a novel

semi-supervised procedure that bootstraps an

NLI dataset from existing biomedical dataset

that pairs mechanisms with experimental evi-

dence in abstracts. We generate a range of neg-

ative examples using nine strategies that ma-

nipulate the structure of the underlying mech-

anisms both with rules, e.g., flip the roles of

the entities in the interaction, and, more impor-

tantly, as perturbations via logical constraints

in a neuro-logical decoding system (Lu et al.,

2021b).

We use this procedure to create a novel dataset

for NLI in the biomedical domain, called

BioNLI and benchmark two state-of-the-art

biomedical classifiers. The best result we ob-

tain is around mid 70s in F1, suggesting the

difficulty of the task. Critically, the perfor-

mance on the different classes of negative ex-

amples varies widely, from 97% F1 on the sim-

ple role change negative examples, to barely

better than chance on the negative examples

generated using neuro-logic decoding.1

1 Introduction

Biomedical research has progressed at a tremen-

dous pace, to the point where PubMed

2

has indexed

well over 1M publications per year in the past

eight years. Many of these publications include

high-level mechanistic knowledge, e.g., protein-

signaling pathways, which is critical for the under-

1

Code and data is available at

https://github.com/

StonyBrookNLP/BioNLI

2https://pubmed.ncbi.nlm.nih.gov

Premise:

The outflow of uracil from the yeast Saccha-

romyces cerevisiae is known to be relatively fast in certain

circumstances, to be retarded by proton conductors and

to occur in strains lacking a uracil proton symport. In the

present work, it was shown that uracil exit from washed

yeast cells is an active process, creating a uracil gradient

of the order of -80 mV relative to the surrounding medium.

Glucose accelerated uracil exit, while retarding its entry.

DNP or sodium azide each lowered the gradient to about

-30 mV, simultaneously increasing the rate of uracil entry.

They also lowered cellular ATP content. Manipulation of

the external ionic conditions governing delta mu H+ at

the plasma membrane had no detectable effect on uracil

transport in yeast preparations thoroughly depleted of ATP.

Consistent Hypothesis:

It was concluded that <re> uracil

<er> exit is probably not driven by the s <el> proton <le>

gradient but may utilize ATP directly.

Adversarial Hypothesis:

It is concluded that <el> uracil

<le> exit from S. cerevisiae is an active process facilitated

bya<re> proton <er> gradient and ATP.

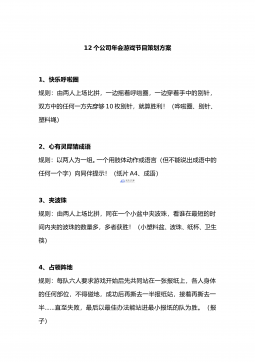

Table 1: Example of a premise/hypothesis pair in the

BioNLI dataset, as well as of an adversarial hypoth-

esis that was automatically generated by an encoder-

decoder network that manipulated the lexico-semantic

constraints in the original hypothesis. Here the regula-

tor entity is marked as <re> entity <er>, and the regu-

lated entity is marked as <el> entity <le>.

standing of many diseases (Valenzuela-Escarcega

et al.,2018), but which must be supported by lower-

level experimental evidence to be trustworthy. De-

veloping models that can understand and reason

about such mechanisms is crucial for support-

ing effective access to the rich biomedical knowl-

edge (Bastan et al.,2022). In particular, the current

information deluge motivates the need for develop-

ing tools that can answer the question: “Is a given

mechanism supported by experimental evidence?”.

This can be seen as a biomedical natural language

inference (NLI) problem. Despite the prevalence of

many biomedical NLP datasets (Demner-Fushman

et al.,2020;Bastan et al.,2022;Krallinger et al.,

2017), there are no datasets that can be directly

used to address this task.

arXiv:2210.14814v1 [cs.CL] 26 Oct 2022

However, manually creating a biomedical NLI

dataset that focuses on mechanistic information is

challenging. Table 1, which contains an actual ex-

ample from our proposed dataset, highlights several

difficulties. First, understanding biomedical mech-

anisms and the necessary experimental evidence

that supports (or does not support) them requires

tremendous expertise and effort (Kaushik et al.,

2019). For example, the premise shown is con-

siderably larger than the average premise in other

open-domain NLI datasets such as SNLI (Bowman

et al.,2015), and is packed with domain-specific

information. Second, negative examples are sel-

dom explicit in publications. Creating them manu-

ally risks introducing biases, simplistic information,

and systematic omissions (Wu et al.,2021).

In this work, we introduce a novel semi-

supervised procedure for the creation of biomedical

NLI datasets that include mechanistic information.

Our key contribution is automating the creation

of negative examples that are informative without

being simplistic. Intuitively, we achieve this by

defining lexico-semantic constraints based on the

mechanism structures in the biomedical literature

abstracts. Our dataset creation is as follows:

(1)

We extract positive entailment examples con-

sisting of a premise and hypothesis from abstracts

of PubMed publications. We focus on abstracts

that contain an explicit conclusion sentence, which

describes a biomedical interaction between two en-

tities (a regulator and a regulated protein or chem-

ical). This yields premises that are considerably

larger than premises in other open-domain NLI

datasets: between 3 – 15 sentences.

(2)

We generate a wide range of negative exam-

ples by manipulating the structure of the under-

lying mechanisms both with rules, e.g., flip the

roles of the entities in the interaction, and, more

importantly, by imposing the perturbed conditions

as logical constraints in a neuro-logical decoding

system (Lu et al.,2021b). This battery of strategies

produces a variety of negative examples, which

range in difficulty, and, thus, provide an important

framework for the evaluation of NLI methods.

We employ this procedure to create a new dataset

for natural language inference (NLI) in the biomed-

ical domain, called BioNLI. Table 1shows an ac-

tual example from BioNLI. The dataset contains

13489 positive entailment examples, and 26907 ad-

versarial negative examples generated using nine

different strategies. An evaluation of a sample of

these negative examples by human biomedical ex-

perts indicated that

86%

of these examples are in-

deed true negatives. We trained two state-of-the-art

neural NLI classifiers on this dataset, and show

that the overall F1 score remains relatively low,

in the mid 70s, which indicates that this NLI task

remains to be solved. Critically, we observe that

the performance on the different classes of nega-

tive examples varies widely, from

97%

accuracy

on the simple negative examples that change the

role of the entities in the hypothesis, to

55%

(i.e.,

barely better than chance) on the negative exam-

ples generated using neuro-logic decoding. Further,

given how the dataset is constructed we can also

test if models produce consistent decisions on all

adversarial negatives associated with a mechanism,

giving deeper insight into model behavior. Thus, in

addition of its importance in the biomedical field,

we hope that this dataset will serve as a benchmark

to test models’ language understanding abilities.

2 Related Work

Previous work on NLI in scientific domains include:

medical question answering (Abacha and Demner-

Fushman,2016), entailment based text exploration

in health care (Adler et al.,2012), entailment recog-

nition in medical texts (Abacha et al.,2015), textual

inference in clinical trials (Shivade et al.,2015),

NLI on medical history (Romanov and Shivade,

2018), and SciTail (Khot et al.,2018) which is

created from multiple-choice science exams and

web sentences. These datasets either have modest

sizes (Abacha et al.,2015), target specific NLP

problems such as coreference resolution or named

entity extraction (Shivade et al.,2015), and make

use of experts in the domain to generate inconsis-

tent data which is costly and labor-intensive. Ad-

ditionally, they also focus on sentence-to-sentence

entailment tasks, where both the premise and the

hypothesis are no longer than one sentence. Most

importantly, none of these are directly aimed at

inference on mechanisms in biomedical literature.

Our work is also related to NLI tasks that go be-

yond sentence-level entailments. For example, (Yin

et al.,2021) include premises longer than a sen-

tence, but only use three simple rule-based meth-

ods to create negative samples. (Yan et al.,2021;

Nie et al.,2019) use larger contexts as premises for

the NLI task but only on general purpose domains

like news, fiction, and Wiki. On the other hand, the

BioNLI dataset is an inference problem with large

contexts as premises but in the biomedical domain

which often requires handling more complex texts

and domain knowledge.

There is also a growing body of research into

exploring factual inconsistency in text generation

models (Maynez et al.,2020;Zhu et al.,2021;

Utama et al.,2022). We take advantage of the

known weakness of generation models for halluci-

nation and also employ a constraint based neurolog-

ical decoding from recently introduced decoding

methods (Lu et al.,2021b,a;Kumar et al.,2021) to

generate adversarial examples for BioNLI dataset.

3 BioNLI Creation

We model the task of understanding if a high-level

mechanistic statement is supported by lower-level

experimental evidence as natural language infer-

ence (NLI). The goal of NLI is to understand

whether the given hypothesis can be entailed from

the premise or not (Dagan et al.,2005). This is

typically modeled with three labels (entailed or

not, plus a neutral class if the two texts are un-

related). In our case, the premise contains the

experimental evidence, while the hypothesis sum-

marizes the higher-level mechanistic information.

Both of these texts are extracted from abstracts

of biomedical publications, where the beginning

sentences (the supporting set) describe experimen-

tal evidence, and a conclusion sentence summa-

rizes the mechanistic information that is entailed

by these experiments.

In this work, we introduce the BioNLI dataset,

an NLI dataset automatically created from a set of

abstracts of PubMed open-access publications. We

collected all the abstracts which contain a conclu-

sion sentence with mechanistic information at the

end of the abstract, and filter out the rest. Following

previous work in mechanism generation (Bastan

et al.,2022), we focus on conclusion sentences that

discuss binary biochemical interactions between

a regulator and a regulated entity (both of which

are proteins or chemicals). We then generate nega-

tive examples by manipulating the structure of the

conclusion sentences.

In the following subsections we describe in de-

tail the generation of both positive and negative

examples in BioNLI.

3.1 Identifying Abstracts with Mechanistic

Information

To identify abstracts that contain conclusion sen-

tences with such binary biochemical interactions,

we followed the same procedure and dataset

3

as

(Bastan et al.,2022) . That is, we used a series of

patterns (e.g., finding words that start with conclud

all patterns are described in Appendix A) to iden-

tify conclusion sentences at the end of abstracts,

and consider the previous ones as the supporting

set. We analyzed the SuMe dataset and found that

91% of the abstracts end with conclusion sentences,

which indicates that the filtering heuristic is robust.

Further, we take advantage of the structured text

in the biomedical domain, by focusing on abstracts

that describe some mechanism between two bio-

chemical entities. One of the main entities is called

regulator entity and is marked with <re> entity

<re> inside the text; the other main entity is called

regulated entity and is marked with <el> entity

<le> inside the text. We will use this structure to

generate negative examples by modifying it.

3.2 Positive Instances

For positive examples, we simply use the origi-

nal conclusion sentence from the abstract as the

hypothesis and the supporting set as the premise.

These sentences are likely to be accurate as they are

written by domain experts, and also peer-reviewed

by other scientists.

3.3 Adversarial Instances

The key contribution of this paper is on the auto-

matic creation of meaningful, yet difficult negative

examples without the use of experts. We introduce

multiple strategies for creating negative examples.

We group these strategies into two groups: rule-

based and neural-based counterfactuals, both of

which are detailed below. We show examples of

these strategies in Table 4.

3.3.1 Rule-Based Counterfactuals

This category consists of rule-based methods that

convert a correct conclusion sentence (i.e., the hy-

pothesis) into an instance that is not entailed by

the given supporting set by perturbing parts of

its semantic structure. Most of them are used in

general-domain factual consistency evaluating sys-

tems (Kry´

sci´

nski et al.,2019;Zhu et al.,2020):

3

This paper works at a higher level of abstraction, which

contains causal semantic relations (or “activations”), which

are always directed and not necessarily asymmetric.

摘要:

展开>>

收起<<

BioNLI:GeneratingaBiomedicalNLIDatasetUsingLexico-semanticConstraintsforAdversarialExamplesMohaddesehBastanStonyBrookUniversitymbastan@cs.stonybrook.eduMihaiSurdeanuUniversityofArizonamsurdeanu@email.arizona.eduNiranjanBalasubramanianStonyBrookUniversityniranjan@cs.stonybrook.eduAbstractNaturallangu...

声明:本站为文档C2C交易模式,即用户上传的文档直接被用户下载,本站只是中间服务平台,本站所有文档下载所得的收益归上传人(含作者)所有。玖贝云文库仅提供信息存储空间,仅对用户上传内容的表现方式做保护处理,对上载内容本身不做任何修改或编辑。若文档所含内容侵犯了您的版权或隐私,请立即通知玖贝云文库,我们立即给予删除!

相关推荐

-

《卖股票》2人仿赵本山小品卖拐VIP免费

2024-11-30 9

2024-11-30 9 -

《罗密欧与茱丽叶》穿越版-10人以上幽默搞笑小品剧本VIP免费

2024-11-30 15

2024-11-30 15 -

《精神病》4人搞笑小品剧本台词VIP免费

2024-11-30 11

2024-11-30 11 -

《超幸福鞋垫》湖南卫视何炅经典之作VIP免费

2024-11-30 14

2024-11-30 14 -

《曹操与葛朗台》3人搞笑小品剧本台词VIP免费

2024-11-30 13

2024-11-30 13 -

《摆摊-卖碟》多人(搞笑)最新9人VIP免费

2024-11-30 14

2024-11-30 14 -

《摆摊-卖碟》多人(搞笑)最新7人VIP免费

2024-11-30 13

2024-11-30 13 -

《摆摊-卖碟》多人(搞笑)最新VIP免费

2024-11-30 15

2024-11-30 15 -

“专心成长 超越自我”主题年会暨经管院就协成立一周年庆典联欢会策划书VIP免费

2024-11-30 18

2024-11-30 18 -

高效团队建设方案-如何组建高效的团队VIP免费

2024-12-09 49

2024-12-09 49

分类:图书资源

价格:10玖币

属性:12 页

大小:239.16KB

格式:PDF

时间:2025-05-06

渝公网安备50010702506394

渝公网安备50010702506394